So you want to get rid of your mainframe?

In the spring of 1995, I had an opportunity to witness a historic occasion: the removal of a firm’s mainframe IBM computer. I wrote a couple of columns for Infoworld back then and was recently reminded about the moment. Here is what I wrote:

The notion of turning off your mainframe computers is somewhere between romantic and impossible, depending upon whom you talk to you. Our esteemed editor-in-chief gave an actual date (this month) when the last mainframe at Infoworld would be shut off — that is the romance part — while I am convinced that there will always be room for mainframes in corporate America, somewhere.

The company that I visited is Edison Electric Institute, the trade association for the nation’s several hundred investor-owned electric utilities. They are located in downtown Washington DC and had — until recently — a single IBM mainframe computer running their business.

At the core of any trade association are lists: “We are constantly putting on conferences, mailing things, and updating records of people in our member’s companies,” said Jon Arnold, the IS director at Edison. Edison publishes over 200 different handbooks, conducts training seminars, and even tests potential operators of nuclear plants.

Edison had originally purchased a 4381 about 12 years ago. “At the time, we were spending hundreds of thousands of dollars with an outsourcing company — only back then we called them remote job entry sites,” said Jeff Kirstein, one of Arnold’s managers. “We had written all sorts of applications in COBOL and everything was charged back to the end user.”

Edison decided to buy their own mainframe and began to write what would end up being a core of six different applications, most of them written in SAS: a list management routine that had over 70,000 names on over a thousand different lists, a meeting registration package to keep track of hotels, courses, and other details needed for the various conferences the trade association put on each year, a financial tracking package, a package to keep track of the several hundred publications and periodicals that Edison subscribed to, and a committee membership tracking system.

SAS was flexible, to be sure. “But it was also a hog, and as a statistical package it was poor at generating business reports, but once we got the hang of it, it worked fine for our purposes,” said Kirstein.

The mainframe-based applications “got the job done,” said Jim Coughlin, a programmer analyst at Edison. “We had setup our mainframe to make it easier for each user to maintain their own lists — every mainframe logon ID was tied to a particular virtual machine and memory space. However, the mainframe systems were fairly crude: each time a user opened a list it would take time to resort and there was minimal error trapping during data entry.” One plus for the mainframe was that “we didn’t have to spend a lot of time running the machine,” said Arnold. Using IBM’s VM operating system, Arnold had one system operator and an assistant to do routine maintenance and backups.

But the annual fixed costs were high, and when Edison moved four years ago into a new building in downtown DC they began to deploy LAN-based technologies. At the time of the move they began thinking about turning off their mainframe. However, they had to manage the transition slowly:

“First off, my predecessor signed a five-year lease with IBM shortly before I came on board,” said Arnold. That lease, along with the monthly software maintenance charges for the various applications and system software, ran to about a quarter of a million dollars a year. Breaking the lease early would have been costly, so Edison decided to start planning on turning the mainframe off when the lease expired in February, 1995.

Secondly, they needed to find another tool besides SAS that would run their applications on the PC. More on what they picked next week.

Third, they had to populate their desktops with PCs rather than terminals. They began to do this when they moved into their new building, first buying IBM PS/2 model 50s. Now Edison buys Dell Pentiums exclusively. All of the desktops are networked together with two NetWare 3.12 servers, and run a standard suite of office applications including Word Perfect GroupWise and word processing, Lotus 1-2-3 and Windows.

Fourth, they had to retrain their end-users. This was perhaps the most difficult part of the process, and is still on-going.

I’ve seen lots of training efforts, but my hat is off to Edison’s IS crew: they have the right mix of pluck, enthusiasm, and end-user motivations to make things work.

The IS staff raffles off a used model 50 for $50 at a series of informal seminars (“You have to be present to win,” said Arnold). And “we also kept a list of people who didn’t get trained, and they don’t get access to the new applications on the LAN,” said Jeanny Shu Lu, another IS manager at Edison. Finally, the applications developers in IS held a series of weekly brown-bag lunches with their end-users, and solicited suggestions for changes. These would be implemented if there was a group consensus.

I turned off my first mainframe at 1:11 pm on March 2nd, 1995 in the shadow of the building where the original Declaration of Independence is kept. Somewhat fitting, but somewhat sad. I was at the offices of the Edison Electric Institute, who happen to be located across the street from the National Archives in downtown DC.

When I first spoke to Jon Arnold (the IS manager at Edison) last year about doing this, I was somewhat psyched: after all, I had been using mainframes for about 15 years and had never been in the position of actually being able to flip the big red switch (a little bigger than the ones on the back of your PCs, but not by much) off before. However, when the time came to do the deed on March 2nd, my feelings had changed somewhat: more of a mixture of bathos and regret.

IBM mainframes were perhaps responsible for my first job in trade computer publishing in 1986: back then I was deeply involved in DISOSS (now called OfficeVision and almost forgotten), 3270 gateways, and products from IBM such as Enhanced Connectivity Facility and IND$FILE file transfer.

The mainframe I was ending was a small one: an IBM 4381 model 13. It had, at the end of its lifetime, a whopping 16 megabytes of memory and 7.5 gigabytes of disk storage — DASD to you old-line IBMers. Since this may be the last time you see this acronym, it stands for Direct Access Storage Device. It was running VM, which stands for Virtual Machine, as its operating system. And since it cost over a quarter of a million dollars annually to run, it had reached the end of its cost-effective life. It was replaced by two NetWare file servers, one of which had more RAM and disk than the 4381 had. The network was token ring, reflecting the IBM heritage of Edison and the utility industry it represents. Edison took existing SAS and COBOL applications running on VM and rewrote them in Magic and Btrieve on the LAN.

Magic is an Israeli-based software development company that provides tools to build screens and assemble database applications that can run on a variety of back-end database servers. One of the reasons Edison began using it was they had a contractor who began to build applications using it. “However, they never finished the work and we had to take it over,” said Coughlin. “It is a wonderful rapid application development language, and easy to make changes.”

The combination of the two products is very solid, according to Edison’s developers and end-users. “We’ve had no data corruption issues, no performance problems at all,” said Kirstein. “We had tried other products, such as writing X-Base applications in Clipper, but that was a mess.”

The Magic-based applications took less time than SAS. For example, rewriting the meeting registration package took two months, about half the time the equivalent SAS application required.

And the new LAN-based applications allowed Edison to improve service to their members, avoid duplicate mailings, and increase the quality of their databases. “We forced more cooperation among our end-users since now one person owns the name while another owns the address,” said Kirstein. “We’ve added lots of user-requested capabilities to our list management software, but we’ve also managed to keep it centralized,” said Arnold.

Getting rid of a mainframe, even a small one like this 4381, isn’t simple of course. It took Edison several years to migrate towards this LAN-centric computing environment and to rewrite their applications on PC platforms. And then they still had to pay several thousand dollars to have someone cart the 4381 off their premises. (Their original lease, written five years ago, stipulated that Edison would be responsible for shipping charges.)

So in the end, the machine had a negative salvage value to Edison. They managed to make a few hundred bucks, though, on a pair of 3174/3274 controllers that someone wanted to purchase. Ironic, though, that the communications hardware (which was even older technology than the 4381 itself) would prove to be worth more and outlive the actual beast itself.

Turning it off was relatively easy: I typed in “SHUTDOWN” at the systems console (forgetting for a moment where the “ENTER” key is on the 3270-style keyboard), waited a few minutes, and then hit the off switch on several components, including a tape drive and CPU unit. Ironically, the cabinet of this mainframe was red: perhaps Edison knew they were going to replace the mainframe with a NetWare network long ago? The hardest part was remembering the command to turn off forever their 3270 gateway, which was a piece of software so old from Novell that it no longer was supported or manufactured (why upgrade something that you eventually will retire?).

What made the moment of shutdown a sad one was that I was standing amidst people who had seen the 4381 first come in the door at Edison, and who had spent a large portion of their careers in the care and feeding of the machine.

“It is going to be pretty quiet in our computer room,” said Kirstein.

After we shutdown the mainframe, the Tricord running NetWare was still running. And it was alot quieter.

Where are they now? Arnold worked for years as the Managing Director WW Utilities Industry for Microsoft and sadly died way too soon.

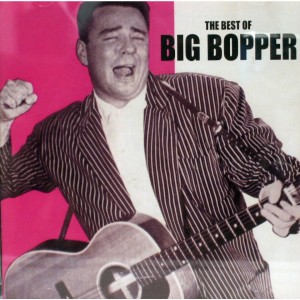

Many of us of a certain age remember the “day the music died” when Buddy Holly and the Big Bopper’s plane crashed. Or if not the actual day we get the reference that was most notably chronicled in the song “American Pie” by Don McLean. But there is another day that is harder to pin down, when digital music finally took over and we never looked back on CDs, cassettes, 8 track tapes and vinyl. I put that date somewhere around 1999-2000, depending on how old you were and how much analog music you had already collected by then.

Many of us of a certain age remember the “day the music died” when Buddy Holly and the Big Bopper’s plane crashed. Or if not the actual day we get the reference that was most notably chronicled in the song “American Pie” by Don McLean. But there is another day that is harder to pin down, when digital music finally took over and we never looked back on CDs, cassettes, 8 track tapes and vinyl. I put that date somewhere around 1999-2000, depending on how old you were and how much analog music you had already collected by then. 1. Music is more mobile. Back then we had separate rooms of our homes where we could listen to music, and only in those rooms. The notion of carrying most of your music collection around in your pocket was about as absurd as Maxwell Smart’s shoe-phone. We had separate radio stations with different music formats too that helped with discovering new music, and would carry recordings to our friends’ homes to play on their expensive stereos. Stereos were so named because they had two speakers the size of major pieces of furniture.

1. Music is more mobile. Back then we had separate rooms of our homes where we could listen to music, and only in those rooms. The notion of carrying most of your music collection around in your pocket was about as absurd as Maxwell Smart’s shoe-phone. We had separate radio stations with different music formats too that helped with discovering new music, and would carry recordings to our friends’ homes to play on their expensive stereos. Stereos were so named because they had two speakers the size of major pieces of furniture. 3. Sharing is caring. At the beginning of this digital music transformation was Napster. It was the undoing of the music industry, making it easy for anyone to share digital copies of thousands of songs across the Internet. While they were the most infamous service, there were dozens of other products, some legit and some fairly shadowy, which I describe in this story that I wrote back in November 2001 that shows some of the interfaces of these forgotten programs.

3. Sharing is caring. At the beginning of this digital music transformation was Napster. It was the undoing of the music industry, making it easy for anyone to share digital copies of thousands of songs across the Internet. While they were the most infamous service, there were dozens of other products, some legit and some fairly shadowy, which I describe in this story that I wrote back in November 2001 that shows some of the interfaces of these forgotten programs.

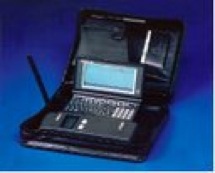

My first brush with wireless messaging was when Bill Frezza stopped by my office and gave me one of his first prototypes of what would eventually turn into the BlackBerry. It was called the Viking Express, and it weighed two pounds and was a clumsy collection of spare parts: a wireless modem, a small HP palmtop computer running DOS, and a nice leather portfolio to carry the whole thing around in.

My first brush with wireless messaging was when Bill Frezza stopped by my office and gave me one of his first prototypes of what would eventually turn into the BlackBerry. It was called the Viking Express, and it weighed two pounds and was a clumsy collection of spare parts: a wireless modem, a small HP palmtop computer running DOS, and a nice leather portfolio to carry the whole thing around in.

The Apple Mac has played an important part of my professional journalism career for at least 20 of the years that I have been a writer. One Mac or another has been my main writing machine since 1990, and has been in daily use, traveling around the world several times and my more-or-less constant work companion. It is a tool not a religion, yet I have been quite fond of the various machines that I have used.

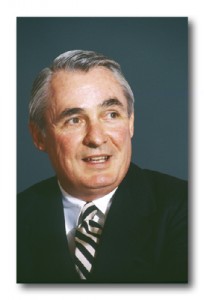

The Apple Mac has played an important part of my professional journalism career for at least 20 of the years that I have been a writer. One Mac or another has been my main writing machine since 1990, and has been in daily use, traveling around the world several times and my more-or-less constant work companion. It is a tool not a religion, yet I have been quite fond of the various machines that I have used. Another great tech manager has left our ranks this week, Ed Iacobucci. Ed lost a 16-month battle with pancreatic cancer. I last saw him two years ago when I was transiting Miami, and he was good enough to meet me at the airport on the weekend to brief me on his latest venture on desktop virtualization, Virtual Works. That is the kind of guy he was: coming out to the airport for a quick press meet on the weekend. There aren’t too many folks that would do that, and it shows the mutual respect we had for each other.

Another great tech manager has left our ranks this week, Ed Iacobucci. Ed lost a 16-month battle with pancreatic cancer. I last saw him two years ago when I was transiting Miami, and he was good enough to meet me at the airport on the weekend to brief me on his latest venture on desktop virtualization, Virtual Works. That is the kind of guy he was: coming out to the airport for a quick press meet on the weekend. There aren’t too many folks that would do that, and it shows the mutual respect we had for each other.