“Have you ever had the opportunity to work with someone who is the best in the world?’ That question got at the heart of a presentation from Jim McKelvey last night at a rather unusual event that I attended at our newly renovated central library downtown. I’ll get to Jim in a moment, but first I want to tell you the context of the event.

“Have you ever had the opportunity to work with someone who is the best in the world?’ That question got at the heart of a presentation from Jim McKelvey last night at a rather unusual event that I attended at our newly renovated central library downtown. I’ll get to Jim in a moment, but first I want to tell you the context of the event.

Here in St. Louis, like many areas of the world, we have a coding shortage. There are dozens of companies, some big and some just getting started, that can’t hire good programmers. It isn’t from lack of trying, or resources: they have the money, the open positions, and the need. The problem in the past has been explained that either they can’t find them or don’t know where to look. But there is a third possibility: the coders exist, they just need some training to get started. That is where an effort called LaunchCode comes into play.

For the past several weeks, hundreds of folks have been taking the beginning computer science programming class, CS50 that Harvard offers over the EdX online platform. The class started with more than a thousand participants and is now down to about 300 or so hardy souls who spend anywhere from 20 hours per week or more learning how to code. Each week they gather in our library to listen to the lectures and work together on the various programming problem sets.

David Malan, who went to Harvard himself and is a rockstar teacher, teaches the course. I watched a couple of his lectures and found them interesting and engaging, even when he covers some basic concepts that I have long known. If I had him teaching me programming back in the day, I might have stuck with it and become a coder myself.

The CS50.tv collection online is pretty amazingly complete: there are scans of the handouts, quizzes, problem tests, additional readings, supplemental lectures and so forth. The courseware is very solidly organized and designed and very impressive, from my short time spend looking around.

But here is the problem: while the online class is fantastic, only one percent of the people who take the class complete it satisfactorily. That is almost a mirror image of the completion rate for those attending in-person at the Harvard campus, where 99% of the students finish. I was surprised at those numbers, because Malan goes quickly through his lectures. You have to stop and rewind them frequently to catch what he is doing.

This is where LaunchCode comes into play. The operation, which is an all-volunteer effort, is trying to short-circuit the coder hiring process by pairing the students who complete the course with experienced programmers in one of more than a 100 target tech companies who are looking for talent. They think of what they are doing as going around the traditional HR process and building a solid local talent pool. It is a great idea. I spoke to a few students, many of who come from technical backgrounds but who don’t have current coding experience. They are finding the class challenging but doable.

LaunchCode is also supplementing the CS50 lectures and online courseware with meatspace assistance. They have space reserved downtown for the students to get together and help each other. Some students have actually moved to St. Louis so they could take the class here: that was pretty amazing! LaunchCode has created mailing lists and Reddit forums where students can share ideas. But that isn’t enough, and last night we learned that Malan is coming to town in a few weeks, bring a dozen of his teaching assistants with him for a special evening hackathon for the class participants. Wow. Will that help get more students to finish the class? I hope so, because I want Malan & Co. to make a regular trip here to see the next class, and the next.

The problem with teaching programming is that you have to just do it to become good at it. No amount of academic study is going to help you understand how to parse algorithms, debug your code, figure out what pieces of the puzzle you need and how to organize them in such a way to make more efficient code. You just have to go do “build something” as McKelvey told us all last night.

Back to his question posed at the top of my post. Obviously, he thinks Malan is the best programming teacher in the world. He challenged everyone in the auditorium to think about what questions they would ask Malan when he comes into town, and how they can leverage their time with the master. He used the analogy of when he built his glassblowing studio he was able to spend time with Lino Talgiapietra, a master Venetian glassblower. Last night he once again told the story of how humbling an experience that was and how he was allowed to only ask a single question of the “maestro.” Wow.

McKelvey was very gracious with his time, and answered lots of questions from the LaunchCode students. Many of the questions last night were how the students were going to position themselves to get a coding job once the class was over in a few weeks. McKelvey kept emphasizing that they need to just “rock the class” and not worry about whether they were going to be programming in php or Ruby. “That isn’t important,” he kept saying: just demonstrate to Malan that they could write the best possible code when he comes here in a few weeks.

I have heard McKelvey speak before and last night he was in fine form. Will LaunchCode succeed at seeding lots of beginning coders? Only time will tell. But my hat is off to them for trying an very unconventional approach, and I hope it works.

Comments always welcome here:

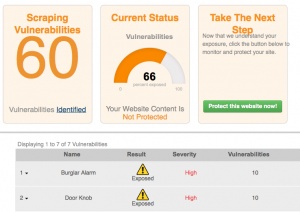

Because more malware enters via the browser than any other place across the typical network, enterprises are looking for alternatives to the standard browsers. In this white paper that I wrote for Authentic8, makers of the Silo browser (their console is shown here), I talk about some of the issues involved and benefits of using virtual browsers. These tools offer some kind of sandboxing protection to keep malware and infections from spreading across the endpoint computer. This means any web content can’t easily reach the actual endpoint device that is being used to surf the web, so even if it is infected it can be more readily contained.

Because more malware enters via the browser than any other place across the typical network, enterprises are looking for alternatives to the standard browsers. In this white paper that I wrote for Authentic8, makers of the Silo browser (their console is shown here), I talk about some of the issues involved and benefits of using virtual browsers. These tools offer some kind of sandboxing protection to keep malware and infections from spreading across the endpoint computer. This means any web content can’t easily reach the actual endpoint device that is being used to surf the web, so even if it is infected it can be more readily contained. I am reminded today about the cold war with next week being the 30th anniversary of the Chernobyl disaster. But leading up to this unfortunate event were a series of activities during the 1950s and 1960s where we were in a race with the Soviets to produce nuclear weapons, launch manned space vehicles, and create other new technologies. We also were in competition to develop the beginnings of the underlying technology for what would become today’s Internet.

I am reminded today about the cold war with next week being the 30th anniversary of the Chernobyl disaster. But leading up to this unfortunate event were a series of activities during the 1950s and 1960s where we were in a race with the Soviets to produce nuclear weapons, launch manned space vehicles, and create other new technologies. We also were in competition to develop the beginnings of the underlying technology for what would become today’s Internet. Ultimately the Soviet-style “command economy” proved inflexible and eventually imploded. Instead of being a utopian vision of the common man, it gave us quirky cars like the Lada and a space station Mir that looked like something built out of spare parts.

Ultimately the Soviet-style “command economy” proved inflexible and eventually imploded. Instead of being a utopian vision of the common man, it gave us quirky cars like the Lada and a space station Mir that looked like something built out of spare parts.

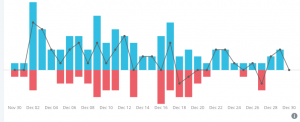

It is ironic that the same thing that got Chris Christy in trouble – delaying traffic into Manhattan — is being used by others to build a multi-million business. I am talking about network traffic for stock traders. Perhaps you have seen the stories about them based on

It is ironic that the same thing that got Chris Christy in trouble – delaying traffic into Manhattan — is being used by others to build a multi-million business. I am talking about network traffic for stock traders. Perhaps you have seen the stories about them based on