Copying content from the Web can be both a good and bad thing. There are companies that make it easy to scrape public data archives such as ScraperWiki.org that are used by data sciences and journalists to track trends and uncover government abuses. And Google and other search engines use various kinds of scraping algorithms to index and categorize your site, and to ensure that your content is ranked appropriately.

But for the most part scraping is bad news. Chances are good that someone has copied your Web content and is hosting it as their own elsewhere online. This happened with LinkedIn not too long ago , where someone picked up thousands of personal profiles to use for their own recruiting purposes. That is a scary thought, indeed.

, where someone picked up thousands of personal profiles to use for their own recruiting purposes. That is a scary thought, indeed.

And lest you think this is difficult to do, there are numerous automated scraping tools that make it easy for anyone to collect content from anywhere, including Mozenda and Scapebox. I won’t get into whether it is ethical to use these on a site that you don’t own the content. Some of these attack sites are very clever in how they go about their scraping, with massive numbers of ever-changing IP addresses blocks to obtain their content.

So what can you do to prevent the bad kind of scraping? There are several companies that try to protect your site from being scraped by a bad actor, including Distill Networks and CloudFlare’s ScrapeShield.

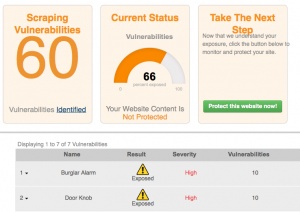

But today’s post is to tell you about another one that goes even further than these two tools called ScrapeDefender. You can watch a screencast video that I just produced here that shows its features.

Scrape Defender is easy to get started with: you just plug in your site’s URL and it will take about a day to look at your site and see where you are vulnerable. When I tried it with my own domain strom.com I was surprised to see it listed 150 different exploits. Some of them have pretty oddball names, such as dripping water or shotgun that show where anyone can come in and grab your content. The service provides a piece of Javascript tracking code that you add to each of your site’s page headers. Once this is in place you can monitor what is going in in near-real time and protect your site against these abusers.

For example, you can view how many pages a potential abusive IP address has visited, any geolocation information, which risk metrics were tripped, what alarms were generated because of this activity and other IP addresses that are owned by the same organization. All that information can help you figure out if your site was suddenly very popular or was being targeted by one of your competitors or someone that wants to steal your content. Their service is Web-based; you bring up your browser and can view these metrics and reports, along with suggestions on best security practice to defend your content too.

The hard part about defending and hardening your site against potential scrapers is that it is difficult to distinguish between a legitimate visitor and an automated bot that is collecting your content. That is the secret sauce of ScrapeDefender: they have looked at thousands of websites to figure out when a bad actor is present, and have code these various behaviors into their system.

You can try Scrape Defender for free, the paid service starts at $79 per month to keep track of a single domain, with more expensive and extensive plans available. It is well worth a look.