I recently wrote a sponsored blog for VirtualZ Computing, a startup involved in innovative mainframe software. As I was writing the post, I was thinking about the various points in my professional life where I came face-to-CPU with IBM’s Big Iron, as it once was called. (For what passes for comic relief, check out this series of videos about selling mainframes.)

My last job working for a big IT shop came about in the summer of 1984, when I moved across country to LA to work for an insurance company. The company was a huge customer of IBM mainframes and was just getting into buying PCs for its employees, including mainframe developers and ordinary employees (no one called them end users back then) that wanted to run their own spreadsheets and create their own documents. There were hundreds of people working on and around the mainframe, which was housed in its own inner sanctum, raised-floor series of rooms. I wrote about this job here, and it was interesting because it was the last time I worked in IT before switching careers for tech journalism.

Back in 1984, if I wanted to write a program, I had to first create them by typing out a deck of punch cards. This was done at a special station that was the size of a piece of office furniture. Each card could contain instructions for a single line of code. If you made a mistake you had to toss the card and start anew. When you had your deck you would then feed it into a specialized card reader that would transfer the program to the mainframe and create a “batch job” – meaning my program would then run sometime during the middle of the night. I would get my output the next morning, if I was lucky. If I made any typing errors on my cards, the printout would be a cryptic set of error messages, and I would have to fix the errors and try again the next night. Finding that meager output was akin to getting a college rejection letter in the mail – the acceptances would be thick envelopes. Am I dating myself enough here?

Today’s developers probably are laughing at this situation. They have coding environments that immediately flag syntax errors, and tools that dynamically stop embedded malware from being run, and all sorts of other fancy tricks. if they have to wait more than 10 milliseconds for this information, they complain how slow their platform is. Code is put into production in a matter of moments, rather than the months we had to endure back in the day.

Even though I roamed around the three downtown office towers that housed our company’s workers, I don’t remember ever stepping foot in our Palais d’mainframe. However, over the years I have been to my share of data centers across the world. One visit involved turning off a mainframe for Edison Electric Institute in Washington DC in 1993, where I wrote about the experience and how Novell Netware-based apps replaced many of its functions. Another involved moving a data center from a basement (which would periodically flood) into a purpose-built building next door, in 2007. That data center housed more souped-up microprocessor-based servers which would form the beginnings of massive CPU collections that are used in today’s z Series mainframes BTW.

Mainframes had all sorts of IBM gear that required care and feeding, and lots of knowledge that I used to have at my fingertips: I knew my way around the proprietary protocols called Systems Network Architecture and proprietary networking protocols called Token Ring, for example. And let’s not forget that it ran programs written in COBOL, and used all sorts of other hardware to connect things together with proprietary bus-and-tag cables. When I was making the transition to PC Week in the 1980s, IBM was making the (eventually failed) transition to peer-to-peer mainframe networking with a bunch of proprietary products. Are you seeing a trend here?

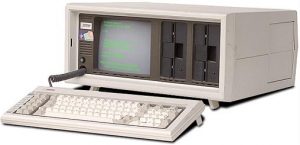

Speaking of the IBM PC, it was the first product from IBM that was built with spare parts made by others, rather than its own stuff. That was a good decision, and this was successful because you could add a graphics card (the first PCs just did text, and monochrome at that) or extra memory or a modem. Or a adapter card that connected to another cabling scheme (coax) that turned the PC into a mainframe terminal. Yes, this was before wireless networks became useful, and you can see why.

Now IBM mainframes — there are some 10,000 of them still in the wild — come with the ability to run Linux and operate across TCP/IP networks, and about a third of them are running Linux as their main OS. This was akin to having one foot in the world of distributed cloud computing, and one foot back in the dinosaur era. So let’s talk about my client VirtualZ and where they come into this picture.

They created software – mainframe software – that enabled distributed applications to access mainframe data sets, using OpenAPI protocols and database connectors. The data stays put on the mainframe but is available to applications that we know and love such as Salesforce and Tableau. It is a terrific idea, just like the original IBM PC in that it supports open systems. This makes the mainframe just another cloud-connected computer, and shows that the mainframe is still an exciting and powerful way to go.

Until VirtualZ came along, developers who wanted access to mainframe data had to go through all sorts of contortions to get it — much like what we had to do in the 1980s and 1990s for that matter. Companies like Snowflake and Fivetran made very successful businesses out of doing these “extract, transfer and load” operations to what is now called data warehouses. VirtualZ eliminates these steps, and your data is available in real time, because it never leaves the cozy comfort of the mainframe, with all of its minions and backups and redundant hardware. You don’t have to build a separate warehouse in the cloud, because your mainframe is now cloud-accessible all the time.

I think VirtualZ’s software will usher in a new mainframe era, an era that puts us further from the punch card era. But it shows the power and persistence of the mainframe, and how IBM had the right computer, just not the right context when it was invented, for today’s enterprise data. For Big Iron to succeed in today’s digital world, it needs a lot of help from little iron.