I spent some time with Shaun St. Hill to discuss my passion for nonprofits and what they can do to use multi factor authentication and other better infosec methods to improve their cyber hygiene. You can listen to the 15 min. recording here:

Category Archives: security

In search of better browser privacy options

A new browser privacy study by Professor Doug Leith, the Computer Science department chair at Trinity College is worth reading carefully. Leith instruments the Mac versions of six popular browsers (Chrome, Firefox, Safari, Edge, Yandex and Brave) to see what happens when they “phone home.” All six make non-obvious connections to various backend servers, with Brave connecting the least and Edge and Yandex (a Russian language browser) the most. How they connect and what information they transmit is worth understanding, particularly if you are paranoid about your privacy and want to know the details.

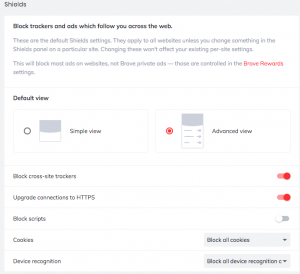

If you aren’t familiar with Brave, it is built on the same Chromium engine that Google uses for its browser, but it does have a more logical grouping of privacy settings that can be found under a “Shields” tab as you can see in this screenshot. It also comes with several extensions for an Ethereum wallet and support for Chromecast and Tor. This is why Brave is marketed as a privacy-enhanced browser.

If you aren’t familiar with Brave, it is built on the same Chromium engine that Google uses for its browser, but it does have a more logical grouping of privacy settings that can be found under a “Shields” tab as you can see in this screenshot. It also comes with several extensions for an Ethereum wallet and support for Chromecast and Tor. This is why Brave is marketed as a privacy-enhanced browser.

Brave scored the best in Leith’s tests. It didn’t track originating IP addresses and didn’t share any details of its browsing history. The others tagged data with identifiers that could be linked to an enduser’s computer along with sharing browsing history with backend servers. Edge and Yandex also saved data that persisted across a fresh browser installation on the same computer. That isn’t nice, because this correlated data could be used to link different apps running on that computer to build an overall user profile.

One problem is the search bar autocomplete function. This is a big time saver for users, but it also a big privacy invasion depending on what data is transmitted back to the vendor’s own servers. Safari generated 32 requests to search servers and these requests persist across browser restarts. Leith proposed adding a function to both Chrome and Firefox to disable this autocomplete function upon startup for those who have privacy concerns. He also has proposed to Apple that Safari’s default start page be reconfigured and an option to avoid unnecessary network connections. He has not heard back from any of the vendors on his suggestions.

So if you are a privacy-concerned user, what are your options? First, you should probably audit your browser extensions and get rid of ones that you don’t use or that have security issues, as Brian Krebs wrote recently. Second, if you feel like switching browsers, you could experiment with Brave or Authentic8’s Silo browser or Dooble. I reviewed two of them many years ago; here is a more updated review on some other alternative browsers done by the folks at ProtonMail.

If you want to stick with your current browser, you could depend on your laptop vendor’s privacy additions, such as what HP provides. However, those periodically crash and don’t deliver the best experience. I am not picking on HP, it is just what I currently use, and perhaps other vendors may have more reliable privacy add-ons. You could also run a VPN all the time to protect your IP address, but you will still have issues with the leaked backend collections. And if you are using a mobile device, there is Jumbo, which helps you assemble a better privacy profile. What Jumbo illustrates though is that privacy shouldn’t be this hard. You shouldn’t have to track down numerous menus scattered across your desktop or mobile device.

Sadly, we still have a lot of room to improve our browser privacy.

RSA Blog: The Tried and True Past Cybersecurity Practices Still Relevant Today

Too often we focus on the new and latest infosec darling. But many times, the tried and true is still relevant.

I was thinking about this when a friend recently sent me a copy of , which was published in 2003. Schneier has been around the infosec community for decades: he has written more than a dozen books and has his own blog that publishes interesting links to security-related events, strategies and failures..

His 2003 book contains a surprisingly cogent and relevant series of suggestions that still resonate today. I spent some time re-reading it, and want to share with you what we can learn from the past and how many infosec tropes are still valid after more than 15 years.

At the core of Schneier’s book is a five-point assessment tool used to analyze and evaluate any security initiative – from bank robbers to international terrorism to protecting digital data. You need to answer these five questions:

- What assets are you trying to protect?

- What are the risks to those assets?

- How well will the proposed security solution mitigate these risks?

- What other problems will this solution create?

- What are the costs and trade-offs imposed?

You’ll notice that this set of questions bears a remarkable resemblance to the IDEA framework that RSA CTO Dr. Zulfikar Ramzan presented during a keynote he gave several years ago. IDEA stands for creating innovative, distinctive end-to-end systems with successful assumptions. Well, actually Ramzan had a lot more to say about his IDEA but you get the point: you have to zoom back a bit, get some perspective, and see how your security initiative fits into your existing infrastructure and whether or not it will help or hurt the overall integrity and security.

Part of the problem is as Schneier says that “security is a binary system, either it works or it doesn’t. But it doesn’t necessarily fail in its entirety or all at once.” Solving these hard failures is at the core of designing a better security solution.

We often hear that the biggest weakness of any security system is the user itself. But Schneier makes a related point: “More important than any security claims are the credentials of the people making those claims. No single person can comprehensively evaluate the effectiveness of a security countermeasure.” We tend to forget about this when proposing some new security tech, and it is worth the reminder because often these new measures are too complex. Schneier tells us “No security countermeasure is perfect, unlimited in its capabilities and completely impervious to attack. Security has to be an ongoing process.” That means you need to periodically audit and re-evaluate your solutions to ensure that they are as effective as you originally proposed.

This brings up another human-related issue. “Knowledge, experience and familiarity all matter. When a security event occurs, it is important that those who have to respond to the attack know what they have to do because they’ve done it again and again, not because they read it in a manual five years ago.” This highlights the importance of training, and disaster and penetration planning exercises. Today we call this resiliency and apply strategies broadly across the enterprise, as well as specifically to cybersecurity practices. Managing these trusted relationships, as I wrote about in an earlier RSA blog, can be difficult.

Often, we tend to forget what happens when security systems fail. As Schneier says early on: “Good security systems are designed in anticipation of possible failure.” He uses the example of road signs that have special break-away poles in case someone hits the sign, or where modern cars have crumple zones that will absorb impacts upon collision and protect passengers. He also presents the counterexample of the German Enigma coding machine: it was thought to be unbreakable, “so the Germans never believed the British were reading their encrypted messages.” We all know how that worked out.

The ideal security solution needs to have elements of prevention, detection and response. These three systems need to work together because they complement each other. “An ounce of prevention may be worth a pound of cure, but only if you are absolutely sure beforehand where that ounce of prevention should be applied.”

One of the things he points out is that “forensics and recovery are almost always in opposition. After a crime, you can either clean up the mess and get back to normal, or you can preserve the crime scene for collecting the evidence. You can’t do both.” This is a problem for computer attacks because system admins can destroy the evidence of the attack in their rush to bring everything back online. It is even more true today, especially as we have more of our systems online and Internet-accessible.

Finally, he mentions that “secrets are hard to keep and hard to generate, transfer and destroy safely.” He points out the king who builds a secret escape tunnel from his castle. There always will be someone who knows about the tunnel’s existence. If you are a CEO and not a king, you can’t rely on killing everyone who knows the secret to solve your security problems. RSA often talks about ways to manage digital risk, such as this report that came out last September. One thing is clear: there is no time like the present when you should be thinking about how you protect your corporate secrets and what happens when the personnel who are involved in this protection leave your company.

Steer clear of Plaid for your small business accounting

If you are looking for a small business accounting software service, don’t consider WaveApps, Sage or the site And.co. All of them use the banking connector Plaid.com and have a major shortcoming. Let me explain my journey.

When I first began my freelancing business in 1992 (can it be?), I used the best accounting program at that time: QuickBooks for DOS. It was simple, it was easy to setup, and it did the job. I stayed with QB when I went to Windows and then to Mac, upgrading every few years, either when my accountant told me that they couldn’t use my aging software or when Intuit told me that I had to upgrade.

When I first began my freelancing business in 1992 (can it be?), I used the best accounting program at that time: QuickBooks for DOS. It was simple, it was easy to setup, and it did the job. I stayed with QB when I went to Windows and then to Mac, upgrading every few years, either when my accountant told me that they couldn’t use my aging software or when Intuit told me that I had to upgrade.

I use my accounting software for three things:

- To keep track of my expenses and payments, entering information once or twice a month to stay on top of things.

- To produce invoices and to accept credit card payments from my clients

- To produce reports once a year for my accountant to produce my business tax filings

That isn’t a lot of requirements to be sure. Naturally, over time some of them have changed: when I first began my accountant directly read my QB file. Now she just wants a few year-end statements, which almost every accounting tool can produce. Also, enabling credit card payments isn’t a big deal that it once was: there are so many other solutions that don’t have to originate from the accounting software tool itself (such as Square, for example).

One thing that hasn’t changed is my goal: having to spend as little time as possible using the software, because this means that I have more time to spend actually writing and doing the work that I get paid to do.

But installing software on my desktop is so last century. Eventually, Intuit stopped making physical software and every QB version is now in the cloud. Their solutions start at $25/month, discounted for the first few months. Actually, that isn’t completely accurate: they also have a “self-employed” version for $15/month, but it has so few features that you can’t really use it effectively – such as producing those yearend reports that I need for my accountant.

Several years ago, I found Waveapps. It was free, it had just enough features to make it useful for me (see above) and did I mention it was free? I started using it and was generally happy. One of the nice features was how it connected to my corporate checking account at Bank of America and imported all my transactions, which made it easier to prepare my books and track my payments.

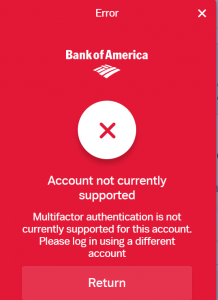

A few weeks ago, Wave decided to “upgrade” its banking connector to Plaid. And that broke my BofA connection. The problem is that I have setup my banking login to use an SMS text multi-factor authentication (MFA). I wish BofA offered something better, but that is what they have — they call it “extra security” — and so I use it. Plaid doesn’t support my bank account’s “extra security” MFA setting.

A few weeks ago, Wave decided to “upgrade” its banking connector to Plaid. And that broke my BofA connection. The problem is that I have setup my banking login to use an SMS text multi-factor authentication (MFA). I wish BofA offered something better, but that is what they have — they call it “extra security” — and so I use it. Plaid doesn’t support my bank account’s “extra security” MFA setting.

This begins The 2020 Accounting Software Evaluation Project. It deserves the capital letters because it meant that I had to start looking around, reading software reviews, signing up for the software service providers, and checking them out. I very quickly found that Sage and And.co (I do hate their domain name) also use Plaid as a banking connector, so I wasn’t getting very far by switching to them. Meanwhile, here we are into February and I still haven’t decided on what to do with my accounting software.

I took time to email the PR person at Plaid, who initially told me that the BofA MFA issue was a bug and they were working on a fix. That was a lie, or perhaps a misunderstanding. Eventually, this is what I got from them: “Plaid supports the standard MFA for Bank of America and most of the other 11,000 institutions on the Plaid network, but we do not currently support BofA’s perpetual MFA setting.” This is also not true. BofA only offers a single MFA method: sending SMS texts to your phone. I wish they offered a smartphone authenticator app, but they don’t.

So my dilemma is this: should I eschew security for convenience? I can turn off the MFA and get my accounting data imported, and then will have to turn it back on. I could try to switch accounting providers to something else — I haven’t tried all of the small business providers, but I have a feeling that Plaid has them as customers too. I could find another bank that has better security and perhaps works with Plaid, but that would mean changing a lot of my bill paying data too.

No good choices, to be sure. I guess I will just stick with Wave for the time being, but I am not happy about it. Secure users shouldn’t use plaid.com.

A field guide to Iran’s hacking groups

Iran has been in the news alot lately. And there have been some excellent analyses of the various hacking groups that are sponsored by the Iranian state government. Most of us know that Iran has hacked numerous businesses over the years, including numerous banks, the Bowman Dam in New York in 2013, the Las Vegas Sands hotel in 2014, various universities and government agencies and even UNICEF. When you review all the data, you begin to see the extent of its activities. It is hard to keep all the group names distinct, what with names like Static Kitten, Charming Kitten, Clever Kitten and Flying Kitten. (This summary from Security Boulevard is a good place to start and has links to all the various felines.) Check Point has found 35 different weekly victims, and their latest analysis shows that 17% of them are Americans. Half of the overall targets are government agencies and financial companies.

To get a more detailed analysis of the various groups, Cyberint Research has published this 30-page document that describes the tactics, techniques and procedures used by ten such groups, matching them to the MITRE ATT&CK threat and group IDs. The group IDs are useful because different security researchers use different descriptive names (the Kitten ones come from CrowdStrike, for example).

What comes out of reading this document is pretty depressing, because the scale of Iran’s efforts is enormous. They are a very determined adversary, and they have taken aim at just about everyone over the past decade. Part of the problem is that there are many private hackers who are taking credit for some of the attacks, such as the recent defacement of the Federal Depository Library Program, although “hacker culture in Iran is gradually being forced into submission by the regime through increasingly controlled infrastructure and internet laws, and recruitment to state-sponsored cyber warfare groups,” according to a report from Intsights.

What comes out of reading this document is pretty depressing, because the scale of Iran’s efforts is enormous. They are a very determined adversary, and they have taken aim at just about everyone over the past decade. Part of the problem is that there are many private hackers who are taking credit for some of the attacks, such as the recent defacement of the Federal Depository Library Program, although “hacker culture in Iran is gradually being forced into submission by the regime through increasingly controlled infrastructure and internet laws, and recruitment to state-sponsored cyber warfare groups,” according to a report from Intsights.

And a recent news report in the Jerusalem Post says that Iranian hacking is getting increasingly more sophisticated and broadening their targets The story cites two former Israeli government cyber agents that claim Iran is now using Chinese hacking tools in their attacks, which can be useful if Iran wants to confuse the attack origins. According to these sources, Israel gets more than 8M daily total cyber attacks.

To add insult to injury, other attackers are leveraging these threats by using them as a phishing lure, sending a message that pretends to be from Microsoft and asking you to login with your credentials. (A word to the wise: don’t.)

The US National Cyber Awareness System through CERT issued this alert last week. They recommend that you have your incident response plan in order and have the key roles delineated and rehearsed so you can stem any potential losses. Lotem Finkelstein, head of Check Point’s cyber intelligence group, agrees: “You should ensure that MFA is enabled and you brush up your incident response plans.“ Other suggestions from CERT include limiting PowerShell usage and log its activities, make sure everything is up to date on patches, and ensure that your network monitoring is doing its job.

Digital Shadows, a security consultancy, also has plenty of other practical suggestions in this blog post for improving your infosec. They recommend being able to keep lines of communication open and help your management understand the implications and risks involved. You should also have a plan for potential DDOS attacks and work through at least a tabletop exercise if not a complete fire drill to see where you are weakest.

Iran is a formidable foe. If they haven’t been on your radar before now, take a moment to review some of these documents and understand what you are up against.

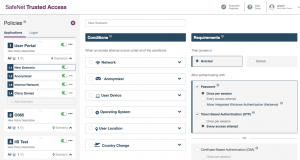

Review of Thales’ SafeNet Trusted Access

Thales SafeNetTrusted Access (STA) offers a compelling blend of security solutions that bridge the MFA, SSO and access management worlds in a single, well-integrated package. STA does this by offering policy-based access controls and SSO with very strong authentication features. These policies are flexible and powerful enough that you can address a broad range of access scenarios.

Thales SafeNetTrusted Access (STA) offers a compelling blend of security solutions that bridge the MFA, SSO and access management worlds in a single, well-integrated package. STA does this by offering policy-based access controls and SSO with very strong authentication features. These policies are flexible and powerful enough that you can address a broad range of access scenarios.

Because STA covers multiple security workflows, there are several places that it can fit into your overall data protection needs. Part of your own motivation for using this product will depend on the particular direction that you are coming from. What you need STA to do will depend on what you have already purchased and where your existing security tools are weakest.

If you presently use another SSO tool, or if you aren’t happy with your existing identity management product, you might examine whether they can support or integrate with STA and use it as your principal identity provider. This will give you greater automation scope and move towards better MFA coverage for your consolidated logins.

If delivering MFA is your primary focus for purchasing a new identity product, STA should be on your short list of vendors. If you are rolling out MFA protection as part of a larger effort to secure your users and logins, then things get more interesting and the case for using STA becomes more compelling. For example, it can handle a variety of application authentication situations and be granular enough to deploy these methods for particular user collections and circumstances. Many older IAM products bolted-on their MFA methods with cumbersome or quirky integration methods or required you to purchase separate add-on products for these features. STA has had this flexibility built-in from the get-go and has a well-integrated MFA set of solutions.

If you presently use another vendor’s authentication app or have a collection of hardware tokens that you are trying to migration away from, you might want to examine whether STA’s MobilePass+ offers improvements to the user workflows that could increase MFA coverage across your application portfolio.

Thales SafeNetTrusted Access is available at this link. Pricing starts at $3.50 /user/month, which includes access management, SSO, authentication tokens and services support. A premium subscription which adds PKI MFA support is also available.

You can read my full report here. And here is my screencast video that points out the major product features:

Medium One-Zero: How to Totally Secure Your Smartphone

The more we use our smartphones, the more we open ourselves up to the possibility that the data stored on them will be hacked. The bad guys are getting better and better at finding ways into our phones through a combination of subtle malware and exploits. I review some of the more recent news stories about cell phone security, which should be enough to worry even the least paranoid among us. Then I describe the loss of privacy and the how hackers can gain access to our accounts through these exploits. Finally, I provide a few practical suggestions on how you can be more vigilant and increase your infosec posture. You can read the article on Medium’s OneZero site.

The more we use our smartphones, the more we open ourselves up to the possibility that the data stored on them will be hacked. The bad guys are getting better and better at finding ways into our phones through a combination of subtle malware and exploits. I review some of the more recent news stories about cell phone security, which should be enough to worry even the least paranoid among us. Then I describe the loss of privacy and the how hackers can gain access to our accounts through these exploits. Finally, I provide a few practical suggestions on how you can be more vigilant and increase your infosec posture. You can read the article on Medium’s OneZero site.

RSA blog: Trust has become a non-renewable resource: why you need a chief trust officer

Lately it seems like trust is in short supply with tech-oriented businesses. It certainly doesn’t help that there have been a recent series of major breaches among security tech vendors. And the discussions about various social networks accepting political advertising haven’t exactly helped matters either. We could be witnessing a crisis of confidence in our industry, and CISOs may be forced to join the front lines of this fight.

One way to get ahead of the issue might be to anoint a Chief Trust Officer. The genesis of the title is to recognize that the role of the CISO is evolving. Corporations need a manager is focused less on talking about technical threats and more about engendering trust in the business’ systems. The CTrO, as it is abbreviated, should assure stakeholders that they have the right set of tools and systems in place.

This isn’t exactly a new idea: Tom Patterson and Bob West were appointed in that position at Unisys and CipherCloud respectively more than five years ago, and Bill Burns had held his position at Informatica for more than three years. Burns was originally their CISO and given the job to increase transparency and improve overall security and communications. Still, the title hasn’t exactly caught on: contemporary searches on job boards such as Glassdoor and Indeed find few open positions advertised. Perhaps finding a CTrO is more of an internal promotion than hiring from outside the organization. It is interesting that all the instances cited above are from the tech universe. Does that say we in IT are quicker to recognize the problem, or just that we have given it lip service?

Tom Patterson echoes a phrase that was often used by Ronald Reagan: “trust but verify.” It is a good maxim for any CTrO to keep in mind.

I spoke to Drummond Reed, who has been for three years now an actual CTrO for the security startup Evernym. “We choose that title very consciously because many companies already have Chief Security Officers, Chief Identity Officers and Chief Privacy Officers.” But at the core of all three titles is “to build and support trust. For a company like ours, which is in the business of helping businesses and individuals achieve trust through self-sovereign identity and verifiable digital credentials, it made sense to consolidate them all into a Chief Trust Officer.”

Speaking to my comment about paying lip service, Reed makes an important point: the title can’t be just an empty promise, but needs to carry some actual authority, and must be at a level that can rise above just another technology manager. The CTrO needs to understand the nature of the business and legal rules and policies that a company will follow to achieve trust with its customers, partners, employees, and other stakeholders. It is more about “elevating the importance of identity, security, and privacy within the context of an enterprise whose business really depends on trust.”

Trust is something that RSA’s President Rohit Ghai speaks often about. Corporations should “enable trust; not eradicate threats. Enable digital wellness; not eradicate digital illness.” I think this is also a good thing for CTrO’s to keep in mind as they go about their daily work lives. Ghai talks about trust as the inverse of risk: “we can enhance trust by delivering value and reducing risk,” and by that he means not just managing new digital risks, but all kinds of risks.

In addition to hiring a CTrO, perhaps it is time we also focus more on enabling and promoting trust. For that I have a suggestion: let’s start treating digital trust as a non-renewable resource. Just like the energy conservationists promote moving to more renewable energy sources, we have to do the same with promoting better trust-maintaining technologies. These include better authentication, better red team defensive strategies, and better network governance. You have seen me write about these topics in other columns over the past couple of years, but perhaps they are more compelling in this context.

How to prevent a data breach, lessons learned from the infosec vendors themselves

This fall there have been data breaches at the internal networks of several major security vendors. I had two initial thoughts when I first started hearing about these breaches: First, if the infosec vendors can’t keep their houses in order, how can ordinary individuals or non-tech companies stand a chance? And then I thought it would be useful to examine these breaches as powerful lessons to be avoided by the rest of us. You see, understanding the actual mechanics of what happened during the average breach isn’t usually well documented. Even the most transparent businesses with their breach notifications don’t really get down into the weeds. I studied these breaches and have come away with some recommendations for your own infosec practices.

This fall there have been data breaches at the internal networks of several major security vendors. I had two initial thoughts when I first started hearing about these breaches: First, if the infosec vendors can’t keep their houses in order, how can ordinary individuals or non-tech companies stand a chance? And then I thought it would be useful to examine these breaches as powerful lessons to be avoided by the rest of us. You see, understanding the actual mechanics of what happened during the average breach isn’t usually well documented. Even the most transparent businesses with their breach notifications don’t really get down into the weeds. I studied these breaches and have come away with some recommendations for your own infosec practices.

The breaches are:

- NordVPN, which experienced a breach in one of their servers in their Finland datacenter,

- Avast, which had an unauthorized access to an unused VPN account,

- Trend Micro, which had a malicious insider selling customer data and

- ZoneAlarm, which was penetrated through a third-party software forum.

You will notice a few common trends from these breaches. First, the delay in identifying the breach, and then notifying customers. It took NordVPN five weeks before they notified by their datacenter provider, and they found out the attack was part of an attack on their other VPN vendor customers. “The datacenter deleted the user accounts that the intruder had exploited rather than notify us.” It took Avast months to identify their breach. Initially, IT staffers dismissed the unauthorized access as a false positive and ignored the logged entry. Months later it was re-examined and determined to be malicious. It took two months for Trend to track down exactly what happened before the employee was identified and then terminated.

Finally, about 4,000 users on a support forum have notified by ZoneAlarm about a data breach. Data compromised includes names, email addresses, hashed passwords, and birthdates. The issue was outdated forum software code that wasn’t patched to current versions. Their breach happened at least several weeks before being noticed and emails were sent out to affected users within 24 hours of when they figured the situation out.

These delays are an issue for anyone. Remember, the EU, through GDPR, gives companies 72 hours to notify regulators. These regulators have issued some pretty big fines for those companies who don’t meet this deadline, such as British Airways.

Second is a question of relative transparency. Most of the vendors were very transparent about what happened and when. You’ll notice that for three out of the four situations I have linked to the actual vendor’s blog posts that describe the breach and what they have done about it. The sole exception is ZoneAlarm, which has not posted any details publicly. The company is owned by Check Point, and while they have been very forthcoming with emails to reporters that is still not the same as posting something online for the world to see.

Third is the issue that insider threats are real. Employees will always be the weakest link in any security strategy. With Trend, customer data (including telephone numbers but no payment data) was divulged by a rogue employee who sold the data from 68,000 customers in a support database to a criminal third party. This can happen to anyone, but you should contemplate how to make a leak such as this more difficult.

Finally, recovery, remediation and repair aren’t easy, even for the tech vendors that know what they are doing (at least most of the time). Part of the problem is first figuring out what actual harm was done, what the intruders did and what gear has to be replaced. Avast’s blog post is the most instructive of the three and worth reading carefully. They have embarked on a major infrastructure replacement, as their CISO told me in a separate interview here. For example, they found that some of their TLS keys were obtained but not used. Avast then revoked and reissued various encryption certificates and pushed out updates of its various software products to ensure that they weren’t polluted or compromised by the attackers. Both Avast and NordVPN also launched massive internal audits to track what happened and to ensure that no other parts of their computing infrastructure were affected.

But part of the problem is that our computing infrastructures have become extremely complex. Even our own personal computer applications are impossible to navigate (just try setting up your Facebook privacy options in a single sitting). How many apps does the average person use these days? Can you honestly tell me that there is some cloud login that you haven’t used since 2010 that doesn’t have a breached password? Now expand that to your average small business that allows its employees to bring their personal phones to work and their company laptops home and you have a nightmare waiting to happen: all it takes is one of your kids clicking on some dodgy link on your laptop, or you downloading an app to your phone, and it is game over. And as a friend of mine who uses a Mac found out recently, a short session on an open Wifi network can infect your computer. (Macs aren’t immune, despite popular folklore.)

So I will leave you with a few words of hope. Study these breaches and use them as lessons to improve your own infosec, both corporate and personal. Treat all third-party sources of technology as if they are your own and ask these vendors and suppliers the hard questions about their security posture. Make sure your business has a solid notification plan in place and test it regularly as part of your normal disaster recovery processes. Trust nothing at face value, and if your tech suppliers don’t measure up find new ones that will. And as you have heard me say before, tighten up all your own logins with smartphone-based authentication apps and password managers, and use a VPN when you are on a public network.

Further misadventures in fake news

The term fake news is used by many but misunderstood. It has gained notoriety as a term of derision from political figures about mainstream media outlets. But when you look closer, you can see there are many other forms that are much more subtle and far more dangerous. The public relations firm Ogilvy wrote about several different types of fake news (satire, misinformation, sloppy reporting and purposely deceptive).

But that really doesn’t help matters, especially in the modern era of state-sponsored fake news. We used to call this propaganda back when I was growing up. To better understand this modern context, I suggest you examine two new reports that present a more deliberate analysis and discussion:

- The first is by Renee Diresta and Shelby Grossman for Stanford University’s Internet Observatory project called Potemkin Pages and Personas, Assessing GRU Online Operations. It documents two methods of Russia’s intelligence agency commonly called the GRU, narrative laundering and hack-and-leaking false data. I’ll get into these methods in a moment. For those of you that don’t know the reference, Potemkin means a fake village that was built in the late 1700’s to impress a Russian monarch who would pass by a region and fooled into thinking there were actual people living there. It was a stage set with facades and actors dressed as inhabitants.

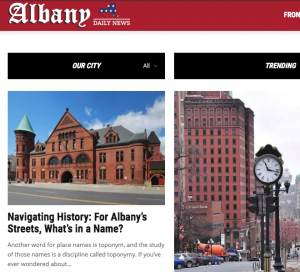

- The second report is titled Simulated media assets: local news from Vlad Shevtsov, a Russian researcher who has investigated several seemingly legit local news sites in Albany, New York (shown below) and Edmonton, Alberta. These sites constructed their news pages out of evergreen articles and other service pieces that have attracted millions of page views, according to analytics. Yet they have curious characteristics, such as being viewed almost completely from mobile sources outside their local geographic area.

Taken together, this shows a more subtle trend towards how “news” can be manipulated and shaped by government spies and criminals. Last month I wrote about Facebook and disinformation-based political campaigns. Since then Twitter announced they were ending all political advertising. But the focus on fake news in the political sphere is a distraction. What we should understand is that the entire notion of how news is being created and consumed is undergoing a major transition. It means we have to be a lot more skeptical of what news items are being shared in our social feeds and how we obtain facts. Move over Snopes.com, we need a completely new set of tools to vet the truth.

Taken together, this shows a more subtle trend towards how “news” can be manipulated and shaped by government spies and criminals. Last month I wrote about Facebook and disinformation-based political campaigns. Since then Twitter announced they were ending all political advertising. But the focus on fake news in the political sphere is a distraction. What we should understand is that the entire notion of how news is being created and consumed is undergoing a major transition. It means we have to be a lot more skeptical of what news items are being shared in our social feeds and how we obtain facts. Move over Snopes.com, we need a completely new set of tools to vet the truth.

Let’s first look at the Shevtsov report on the criminal-based news sites, for that is really the only way to think about them. These are just digital Potemkin villages: they look like real local news sites, but are just containers to be used by bots to generate clicks and ad revenue. Buzzfeed’s Craig Silverman provides a larger context in his analysis here. These sites gather traffic quickly, stick around for a year or so, and then fade away, after generating millions of dollars in ad revenues. They take advantage of legitimate ad serving operations, including Google’s AdSense, and quirks in the organic search algorithms that feed them traffic.

This is a more insidious problem than seeing a couple of misleading articles in your social news feed for one reason: the operators of these sites aren’t trying to make some political statement. They just want to make money. They aren’t trying to fool real readers: indeed, these sites probably have few actual carbon life forms that are sitting at keyboards.

The second report from Stanford is also chilling It documents the efforts of the GRU to misinform and mislead, using two methods.

— narrative laundering. This makes something into a fact by repetition through legit-sounding news sources that are also constructs of the GRU operatives. This has gotten more sophisticated since another Russian effort led by the Internet Research Agency (IRA) was uncovered during the Mueller report. That entity (which was also state-sponsored) specialized in launching social media sock puppets and creating avatars and fake accounts. The methods used by the GRU involved creating Facebook pages that look like think tanks and other media outlets. These “provided a home for original content on conflicts and politics around the world and a primary affiliation for sock puppet personas.” In essence, what the GRU is doing is “laundering” their puppets through six affiliated media front pages. The researchers identified Inside Syria Media Center, Crna Gora News Agency, Nbenegroup.com, The Informer, World News Observer, and Victory for Peace as being run by the GRU, where their posts would be subsequently picked up by lazy or uncritical news sites.

What is interesting though is that the GRU wasn’t very thorough about creating these pages. Most of the original Facebook posts had no engagements whatsoever. “The GRU appears not to have done even the bare minimum to achieve peer-to-peer virality, with the exception of some Twitter networking, despite its sustained presence on Facebook. However, the campaigns were successful at placing stories from multiple fake personas throughout the alternative media ecosystem.” A good example of how the researchers figured all this out was how they tracked down who really was behind the Jelena Rakocevic/Jelena Rakcevic persona. “She” is really a fake operative that purports to be a journalist with bylines on various digital news sites. In real life, she is a biology professor in Montenegro with a listed phone number for a Mercedes dealership.

— hack-and-leak capabilities. We are now sadly familiar with the various leak sites that have become popular across the interwebs. These benefitted from some narrative laundering as well. The GRU got Wikileaks and various mainstream US media to pick up on their stories, making their operations more effective. What is interesting about the GRU methods is that they differed from those attributed to the IRA “They used a more modern form of memetic propaganda—concise messaging, visuals with high virality potential, and provocative, edgy humor—rather than the narrative propaganda (long-form persuasive essays and geopolitical analysis) that is most prevalent in the GRU material.”

So what are you gonna do to become more critical? Librarians have been on the front lines of vetting fake news for years. Lyena Chavez of Merrimack College has four easy “tells” that she often sees:

- The facts aren’t verifiable from the alleged sources quoted.

- The story isn’t published in other credible news sources, although we have seen how the GRU can launder the story and make it more credible.

- The author doesn’t have appropriate credentials or experience.

- The story has an emotional appeal, rather than logic.

One document that is useful (and probably a lot more work than you signed up for) is this collection from her colleague at Merrimack Professor Melissa Zimdars. She has tips and various open source methods and sites that can help you in your own news vetting. If you want more, take a look at an entire curriculum that the Stony Brook J-school has assembled.

Finally, here are some tools from Buzzfeed reporter Jane Lytvynenko, who has collected them to vet her own stories.

- Reverse image search: Tineye.com

- Image metadata reader: regex.info/exif.cgi

- Image forensics: https://fotoforensics.com/

- Video verification browser plug-in: InVid

- Twitter analytics: foller.me

- Facebook search Github project: github.io/fb-search

- Website stats: https://spyonweb.com/

- Content monitoring: BuzzSumo and Crowdtangle

- Reverse AdSense search from DNSlytics

- Whois data (to verify domain ownership)