Like many of you, I love watching TED Talks. The conference, which covers technology, entertainment and design, was founded by Ricky Wurman back in 1984 and has spawned a cottage industry featuring recorded videos from some of the greatest speakers around the world. I was fortunate to attend one of the TED events back when it was still an annual event back in its early days and got to meet Wurman back when he was producing his Access city guides that were an interesting mix of travelogue and design.

If you are interested in watching more TED videos, here is my own very idiosyncratic guide to some of them that have more to do with cybersecurity and general IT operations, along with some of the lessons that I learned from the various speakers. If you do get a chance to attend a local event, I would also encourage you to do so: you will meet some interesting people, both in the audience and on stage.

The TED Talks present a unique perspective on the past, and many of them resonate with current events and best practices in the cybersecurity world. So many times security professionals think they have found something new when it turns out to be something that has been around for many years. One of the benefits of watching the TED talks is that they paint a picture of the past, and sometimes the past is still very relevant to today’s situations.

- In this 2015 talk in London, Rodrigo Bijou reviews how governments need hackers to help fight the next series of cyberwars and fight terrorists.

One of the more current trends is the situation of using malware-laced images by phishers. A recent news article mentioned the technique and labeled it “HeatStroke.” This is one method the bad guys use to hide their code from easy detection by defenders and threat hunters. Turns out this technique isn’t so new and has been seen for years by security researchers. Bijou’s talk mentioned that years ago malware-injected images were part of ad-based clickjacking attacks. HeatStroke is just a new way take on an old problem.

Bijou’s talk also references the Arab Spring that was happening around that time. One of the consequences of public protests, particularly in countries with totalitarian governments, is that the government can restrict communications by blocking overall Internet access. This is being done more frequently, and Netblocks track such outages in countries all over the world, including Papua, Algeria, Ethiopia and Yemen. Bijou shows a now-famous photo of the Google public DNS address (8.8.8.8) that had been spray painted on a wall, in the hope that people will know what it means and use it to avoid the net blockade.

Since 2015, there have been numerous public DNS services established, many of them free or low cost. Corporate IT managers should investigate their DNS supplier for both performance gains and also for the better security provided – many of these services filter out bad URL links and phishing lures for example. You should consider switching after using a similar testing regimen to what was used in this blog post to find the best technology that could work for you.

- In this 2014 talk in Rio de Janeiro Andy Yen describes how encryption works. Yen was one of the founders of ProtonMail, one of the leading encryption providers.

Email encryption is another technology that has been around a long time. In one of the better talks that I have watched multiple times. Yen’s talk has been viewed 1.7M times. We still have a love/hate relationship with email encryption: many companies still don’t use it to protect their communications, in spite of numerous improvements to products over the years. Encryption technologies continue to improve: in addition to ProtonMail there are small business encryption solutions such as the Helm server that make it easier to deploy.

- Bruce Schneier gave his talk in 2004 at Penn State about the difference between the perception and reality of security.

Part of the staying power of his message is that we humans still process threats pretty much the same way we’ve done since we were living in caves: we tend to downplay common risks (such as finding food or driving to the store) and fear more spectacular ones (such as a plane crash or being eaten by a tiger). Schneier has been talking about “security theater” for many years now, such as the process by which we get screened at the airport. Part of understanding your own corporate theatrical enactment is in evaluating how we tend to tradeoff security against money, time and convenience.

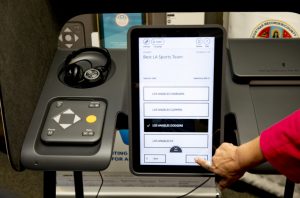

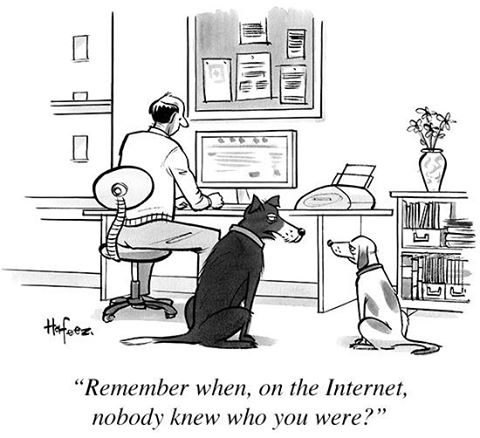

- Juan Enriquez’s talk Long Beach in 2013 was about the rise of social networks and the hyperconnected world that we now live in.

He spoke about the effect of social media posts, calling them “digital tattoos.” The issue – then and now – is that all the information we provide on ourselves is easily assembled, often by just tapping into facial databases and without even knowing that our picture has been taken by someone nearby with a cell camera phone. “Warhol got it wrong,” he said, “now you are only anonymous for 15 minutes.” He feels that we are all threatened with immortality, because of our digital tattoos that follow us around the Internet. It is a good warning about how we need to consider the privacy considerations of our posts. Again, this isn’t anything new, but it does bear repeating and a good suggestion if your company still doesn’t have any formal policies and provisions in place for social media.

- This 2014 talk by Lorrie Faith Cranor at Carnegie Mellon University (CMU) is all about passwords.

Watching several TED talks makes it clear that passwords are still the bane of our existence, even with various technologies to improve how we use them and how to harden them against attacks. But you might be surprised to find out that once upon a time, college students only had to type a single digit for their passwords. This was at CMU, a leading computer science school and the location of one of the computer emergency response teams. The CMU policy was in effect up until 2009, when the school changed the minimum requirements to something a lot more complex. Researchers found that 80% of the CMU students reused passwords, and when asked to make them more complex merely added an “!” or an “@” symbol to them. Cranor also found that the password “strength meters” that are provided by websites to help you create stronger ones don’t really measure complexity accurately, with the meters being too soft on users as a whole.

A classic password meme is the XKCD cartoon that suggests stringing together four random common words to make more complex passwords. The problem though is that these passwords are error-prone and take a long time to type in. A better choice, suggested by her research, is to use a collection of letters which can be pronounced. This is also much harder to crack. The lesson learned: passwords still are the weak entry point into our networks, and corporations who have deployed password managers or single sign-on tools are a leg up on protecting their data and their users’ logins.

- Another frequently viewed talk was given in Long Beach in 2011 by Ralph Langer, a German security consultant. He tells the now familiar story from modern history how Stuxnet came to be created and how it was deployed against the Iranian nuclear plant at Natanz back in 2010.

What makes this relevant for today is the effort that the Stuxnet creators (supposedly a combination of US and Israeli intelligence agencies) designed the malware to work in a very specific set of circumstances. In the years since Stuxnet’s creation, we’ve seen less capable malware authors also design custom code for specific purposes, target individual corporations, and leverage multiple zero-day attacks. It is worth reviewing the history of Stuxnet to refresh your knowledge of its origins. The story of how Symantec dissected Stuxnet is something that I wrote about in 2011 for ReadWrite that is also worth reading.

- Avi Rubin’s 2011 talk in DC reviews how IoT devices can be hacked. He is a professor of computer science.

Back in 2011, some members of the general public still thought you could catch a cold from a computer virus. Rubin mentions that IoT devices were under attack as far back as 2006, something worth considering that these attacks have become quite common (such as with the Mirai attacks which began in 2016). Since then, we have seen connected cars, smart home devices, and other networked devices become compromised. One lesson learned from watching Rubin’s talk is that attackers may not always follow your anticipated threat model and compromise your endpoints with new and clever methods. Rubin urges defenders to think outside the box to anticipate the next threat.

- Del Harvey gave a talk in Vancouver in 2014. She handles security for Twitter and her talk is all about the huge scale brought about by the Internet and the sorts of problems she has to face daily.

She spoke about how many Tweets her company has to screen and examine for potential abuse, spam, or other malicious circumstances. Part of her problem is that she doesn’t have a lot of context to evaluate what a user is saying in their Tweets, and also that even if she makes one mistake in looking at a million Tweets, that is still something that could happen 500 times a day. This is also a challenge for security defenders who have to process a great deal of daily network traffic to find that one bad piece of malware buried in our log files. Harvey says it helps to visualize an impending catastrophe and this contains a clue of how we have to approach the scale problem ourselves, through the use of better automated visualization tools to track down potential bad actors.

- This 2014 Berlin session by Carey Kolaja is about her experiences working for Paypal.

She was responsible for establishing new markets for the payments vendor that could help the world move money with fewer fees and less effort. Part of her challenge though is establishing the right level of trust so that payments would be processed properly, and that bad actors would be quickly identified. She tells the story of a US soldier in Iraq that was trying to send a gift to his family back in New York. The path of the transaction was flagged by Paypal’s systems because of the convoluted route that the payment took. While this was a legitimate transaction, it shows even back then we had to deal with a global reach and have some form of human evaluation behind all the technology to ensure these oddball payment events happen. The lesson for today is how we examine authentication events that happen across the world and putting in place risk-based security scoring tools to flag similar complex transactions. “Today trust is established in seconds,” she says – which also means that trust can be broken just as quickly.

- Our final talk is by Guy Goldstein and given in 2010 in Paris. He talks about how hard it is to get attribution correctly after a cyber-attack.

Even back then it was difficult to pin down why you were targeted and who was behind the attack, let alone when you were first penetrated by an adversary. “Attribution is hard to get right,” he says, “and the consequences of getting it wrong are severe.” Wise words, and why you need to have red teams to boost your defensive capabilities to anticipate where an attack might come from.

As you can see, there is a lot to be gleaned from various TED talks, even ones that have been given at conferences many years ago. There are still security issues to be solved and many of them are still quite relevant to today’s environment. Happy viewing!

Lessons for leaders: learning from TED Talks

- Public DNS providers have proliferated and a worth a new look to protect your network from outages in conflict-prone hotspots around the world

- Consider privacy implications of your staff’s social media posts and assemble appropriate guidelines for how they consume social media.

- Improve your password portfolio by using a password manager, a single sign-on tool or some other mechanism for making them stronger and less onerous in their creation for your users

- Think outside the box and visualize where your next threats will appear on your network.

- Examine whether risk-based authentication security tools can help provide more trustworthy transactions to thwart phishers.

- Build red teams to help harden your defenses.