I have had the pleasure of knowing Joey Skaggs for several decades, and observing his media hoaxing antics first-hand during the development and deployment of his many pranks. Skaggs is a professional hoaxer, meaning that he deliberately crafts elaborate stunts to fool reporters, get himself covered on TV and in newspapers, only to reveal afterwards that the reporters have been had. He sometimes spends years constructing these set pieces, fine-tuning them and involving a cast of supporting characters to bring his hoax to life.

I have had the pleasure of knowing Joey Skaggs for several decades, and observing his media hoaxing antics first-hand during the development and deployment of his many pranks. Skaggs is a professional hoaxer, meaning that he deliberately crafts elaborate stunts to fool reporters, get himself covered on TV and in newspapers, only to reveal afterwards that the reporters have been had. He sometimes spends years constructing these set pieces, fine-tuning them and involving a cast of supporting characters to bring his hoax to life.

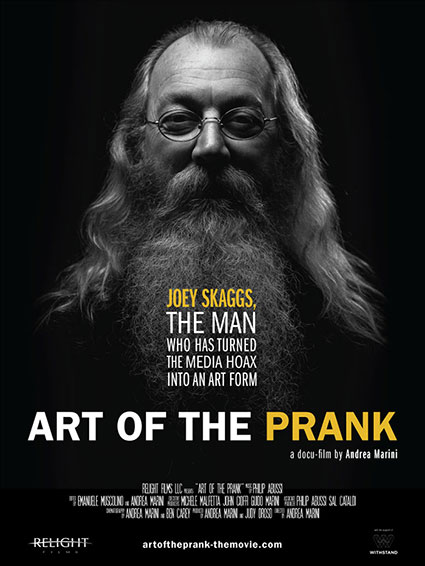

His latest stunt is a documentary movie about filming another documentary movie that is being shown at various film festivals around the world. I caught up with him this past weekend here in St. Louis, when our local film festival screened the movie called The Art of the Prank. Ostensibly, this is a movie about Skaggs and one of his pranks. More about the movie in a moment.

I have covered Skaggs’ exploits a few times. In 1994, he created a story about a fake bust of a sex-based virtual reality venture called Sexonix. I wrote a piece for Wired (scroll to nearly the bottom of the page) where he was able to whip up passions. In the winter of 1998, I wrote about one of his hoaxes, which was about some issues with a rogue project from an environmental organization based in Queensland, Australia. The project created and spread a genetically altered virus. When humans come into contact with the virus, they begin to crave junk food. To add credibility to the story, the virus was found to have infected Hong Kong chickens, among other animals. Skaggs created a phony website here, which contains documentation and copies of emails and photos. Now remember, this was 1998: back then newspapers were still thriving, and the Web was just getting going as a source for journalists.

As part of this hoax, Skaggs also staged a fake demonstration outside the United Nations headquarters campus in New York City. The AP and the NY Post, along with European and Australian newspapers, duly covered the protest, and thus laid the groundwork for the hoax.

Since then he has done dozens of other hoaxes. He set up a computerized jurisprudence system called the Solomon Project that found OJ guilty, a bordello for dogs, a portable confessional booth that was attached to a bicycle that he rode around one of the Democratic conventions, a miracle drug made from roaches, a company buying unwanted dogs to use them as food, and more. Every one of his setups is seemingly genuine, which is how the media falls for them and reports them as real. Only after his clips come in does he reveal that he is the wizard behind the curtain and comes clean that it all was phony.

Skaggs is a genius at mixing just the right amount of believable and yet unverifiable information with specific details and actual events, such as the UN demonstration, to get reporters to drop their guard and run the story. Once one reporter falls for his hoax, Skaggs can build on that and get others to follow along. Skaggs’ hoaxes illustrate how little reporters actually investigate and in most cases ignore the clues that he liberally sprinkles around. This is why they work, and why even the same media outlets (he has been on CNN a number of times) fall for them.

In the movie, you see Skaggs preparing one of his hoaxes. I won’t give you more details in the hopes that you will eventually get to see the film and don’t want to spoil it for you. He carefully gathers his actors to play specific roles, appoints his scientific “expert” and gets the media – and his documentary filmmaker – to follow him along. It is one of his more brilliant set pieces.

Skaggs shows us that it pays to be skeptical, and to spend some time proving authenticity. Given today’s online climate and how hard the public has to work to verify basic facts, this has gotten a lot more difficult, ironically. Most of us take things we read on faith, and especially if we have seen it somewhere online such as Wikipedia or when we Google something. As I wrote about the “peeps” hoax in 1998, “a website can change from moment to moment, and pining down the truth may be a very difficult proposition. An unauthorized employee could post a page by mistake. One man’s truth is another’s falsehood, depending on your point of view. Also, how can you be sure that someone’s website is truly authentic? Maybe during the night a group of imposters has diverted all traffic from the real site to their own, or put up their own pages on the authentic site, unbeknown to the site’s webmaster?”

Today we have Snopes.com and fact checking efforts by the major news organizations, but they still aren’t enough. All it takes is one gullible person with a huge Twitter following, (I am sure you can think of a few examples) and a hoax is born.

In the movie, trusted information is scarce and hard to find, and you see how Skaggs builds his house of cards. It is well worth watching this master of media manipulation at work, and a lesson for us all to be more careful, especially when we see something online. Or read about it in the newspapers or see something on TV.

When the Internet was first created, it was based on using Roman alphabet characters in domain names. This is the character set that is used by many of the world’s languages, but not all of them. As the Internet expanded across the globe, it connected countries where other alphabets were in use, such as Arabic or Mandarin.

When the Internet was first created, it was based on using Roman alphabet characters in domain names. This is the character set that is used by many of the world’s languages, but not all of them. As the Internet expanded across the globe, it connected countries where other alphabets were in use, such as Arabic or Mandarin.

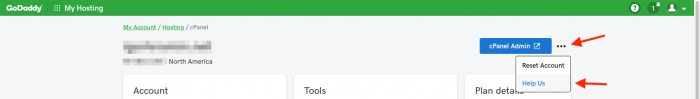

If you use GoDaddy hosting, you should go to your cPanel hosting portal, click on the small three dots at the top of the page (as shown above), click “help us” and ensure you have opted out.

If you use GoDaddy hosting, you should go to your cPanel hosting portal, click on the small three dots at the top of the page (as shown above), click “help us” and ensure you have opted out. Driven and imaginative leadership.

Driven and imaginative leadership.

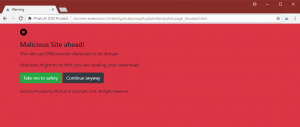

Part of this timing element is also how you deal with bugs and what happens when they occur. Yes, all software has bugs. But do you tell your user what a particular bug means? Sometimes you do, sometimes you put up some random error message that just annoys your users.

Part of this timing element is also how you deal with bugs and what happens when they occur. Yes, all software has bugs. But do you tell your user what a particular bug means? Sometimes you do, sometimes you put up some random error message that just annoys your users. I have had the pleasure of knowing Joey Skaggs for several decades, and observing his media hoaxing antics first-hand during the development and deployment of his many pranks. Skaggs is a professional hoaxer, meaning that he deliberately crafts elaborate stunts to fool reporters, get himself covered on TV and in newspapers, only to reveal afterwards that the reporters have been had. He sometimes spends years constructing these set pieces, fine-tuning them and involving a cast of supporting characters to bring his hoax to life.

I have had the pleasure of knowing Joey Skaggs for several decades, and observing his media hoaxing antics first-hand during the development and deployment of his many pranks. Skaggs is a professional hoaxer, meaning that he deliberately crafts elaborate stunts to fool reporters, get himself covered on TV and in newspapers, only to reveal afterwards that the reporters have been had. He sometimes spends years constructing these set pieces, fine-tuning them and involving a cast of supporting characters to bring his hoax to life.