In today’s changing times, tech companies must renew their focus on customers, and use their data effectively to create a holistic, 360-degree view of those customers. With this view in place, they can both improve the customer experience and better inform product development in order to attract new customers and retain existing customers. Facing fragmented data, slow and fragile data pipelines, growing demands and increasing costs, legacy data warehouse solutions are no longer sufficient. Enter next gen Cloud Data Platforms. With integrated data and seamless sharing, tech companies can now serve real-time analytics, scale up operations, and enhance the customer experience. This will take you to the slide deck for an IDG webinar that I did for Snowflake.

In today’s changing times, tech companies must renew their focus on customers, and use their data effectively to create a holistic, 360-degree view of those customers. With this view in place, they can both improve the customer experience and better inform product development in order to attract new customers and retain existing customers. Facing fragmented data, slow and fragile data pipelines, growing demands and increasing costs, legacy data warehouse solutions are no longer sufficient. Enter next gen Cloud Data Platforms. With integrated data and seamless sharing, tech companies can now serve real-time analytics, scale up operations, and enhance the customer experience. This will take you to the slide deck for an IDG webinar that I did for Snowflake.

Category Archives: Big Data

Where Moneyball meets addiction counseling

A startup here in St. Louis is trying to marry the analytics of the web with the practice of addiction counseling and psychotherapy. In doing so, they are trying to bring the methods of Moneyball to improve therapeutic outcomes. It is an interesting idea, to be sure.

The firm is called Takoda, and it is the work of several people: David Patterson Silver Wolf, an academic researcher; Ken Zheng, their business manager; Josh Fischer, their co-founder and CTO; and Jake Webb, their web developer. I spoke to Fischer who works full time for Bayer, and supports Takoda on his own time as they bootstrap the venture. “It is hard to put all the various pieces together in a single company, which is probably why no one else has tried to do this before,” he told me recently.

The idea is to measure therapists based on patient performance during treatment, just like Moneyball measured runs delivered by each baseball player as their performance measurement. But unlike baseball, there is no single metric that everyone has created, certainly not as obvious as RBIs or homers.

We are at a unique time in the healthcare industrial complex today. Everyone has multiple electronic health records that are stored in vast digital coffins; so named because this is where data usually goes to die. Even if we see mostly doctors in a single practice group, chances are our electronic medical records are stored in various data silos all over the place, without the ability to link them together in any meaningful fashion.

On top of this, the vast majority of therapists have their own paper-based data coffins: file cabinets full of treatment notes that are rarely consulted again. Takoda is trying to open these repositories, without breaching any patient data privacy or HIPAA regulations.

Part of the problem is that when someone seeks treatment, they don’t necessary learn how to get better or move beyond their addiction issues while they are in their therapist’s office. They have to do this on their own time, interacting with their families and friends, in their own communities and environment.

Another part of the problem is in how we select a therapist to see for the first time. Often, we get a personal referral, or else we hear about a particular office practice. When we walk in the door, we are usually assigned a therapist based on who is “up” – meaning the next person who has the lightest caseload or who is free at that particular moment when a patient walks in the door. This is how many retail sales operations work. The sole design criterion was to evenly distribute leads and potential customers. That is a bad idea and I will get to why in a moment.

Finally, the therapy industry uses two modalities that tend to make success difficult. One is that “good enough” is acceptable, rather than pursuing true excellence or curing a patient’s problem. When we seek medical care for something physically wrong with us, we can find the best surgeon, the best cardiologist, the best whatever. We look at their education, their experience, and so forth. Patients don’t have any way to do this when they seek counseling. The other issue is that therapists aren’t necessarily rewarded for excellence, and often practices let a lot of mediocre treatment slide. Both aren’t optimal, to be sure.

So along comes Takoda, who is trying to change how care is delivered, how success is measured, and whether we can match the right therapists to the patients to have the best treatment outcomes. That is a tall order, to be sure.

Takoda put together its analytics software and began building its product about a year ago. First they thought they could create something that is an add-on to the electronic health systems already in use, but quickly realized that wasn’t going to be possible. They decided to work with a local clinic here. The clinic agreed to be a proving ground for the technology and see if their methods work. They picked this clinic for geographic convenience (since the principals of the firm are also here in St. Louis) and because they already see numerous patients who are motivated to try to resolve their addiction issues. Also, the clinic accepts insurance payments. (Many therapists don’t deal with insurers at all.) They wanted insurers involved because many of them are moving in the direction of paying for therapy only if the provider can measure and show patient progress. While many insurers will pay for treatment, regardless of result, that is evolving. Finally, the company recognized that opioid abuse has slammed the therapy world, making treatment more difficult and challenging existing practices, so the industry is ripe for a change. Takoda recognizes that this is a niche market, but they had to start somewhere. “So we are going to reinvent this industry from the ground up,” said Fischer.

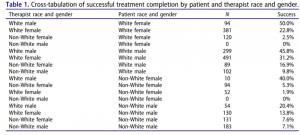

So what does their system do? First off, it uses research to better match patients with therapists, rather than leave this to chance or the “ups” system that has been used for decades. Research has shown that matching gender and race between the two can help or hurt treatment outcomes, using very rough success measures.

So what does their system do? First off, it uses research to better match patients with therapists, rather than leave this to chance or the “ups” system that has been used for decades. Research has shown that matching gender and race between the two can help or hurt treatment outcomes, using very rough success measures.

Second, it builds in some pretty clever stuff, such as using your smartphone to create geofences around potentially risky locations for each individual patient, and providing a warning signal to encourage the patient to steer clear of these locations.

Finally, their system will “allow practice offices to see how their therapists are performing and look carefully at the demographics,” said Fischer. “We have to change the dynamic of how therapy care is being done and how therapists are rated, to better inform patients.”

It is too early to tell if Takoda will succeed or not, but if they do, the potential benefits are clear. Just like in Moneyball, where a poorly-performing team won more games, they hope to see a transformation in the therapy world with a lot more patient “wins” too.

A new way to do big data with entity resolution

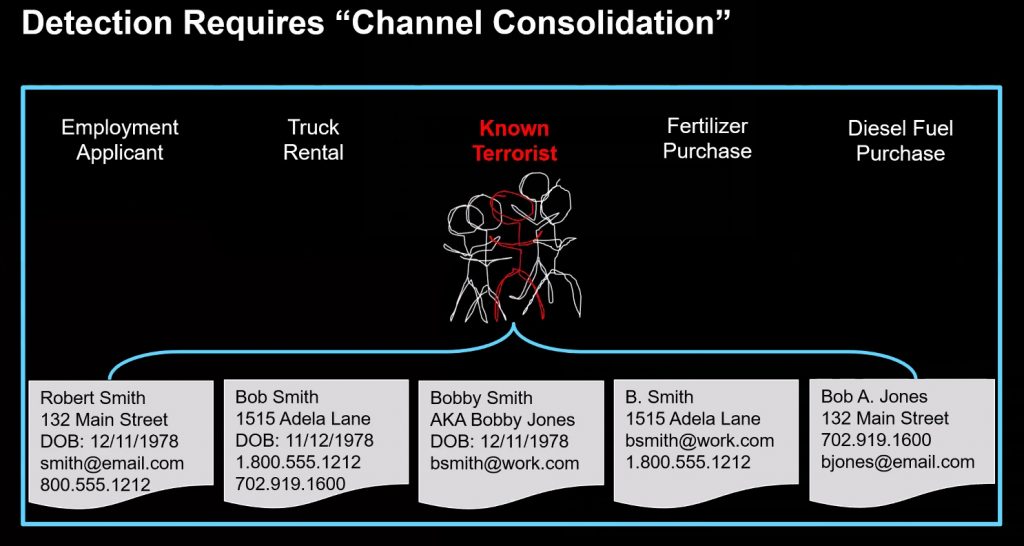

I have this hope that most of you reading this post aren’t criminals, or terrorists. So this might be interesting to you, if you want to know how they think and carry out their business. Their number one technique is called channel separation, the ability to use multiple identities to prevent them from being caught.

Let’s say you want to rob a bank, or blow something up. You use one identity to rent the getaway car. Another to open an account at the bank. And other identities to hire your thugs or whatnot. You get the idea. But in the process of creating all these identities, you aren’t that clever: you leave some bread crumbs or clues that connect them together, as is shown in the diagram below.

This is the idea behind a startup that has just come out of stealth called Senzing. It is the brainchild of Jeff Jonas. The market category for these types of tools is called entity resolution. Jonas told me, “Anytime you can catch criminals is kind of fun. Their primary tradecraft holds true for anyone, from bank robbers up to organized crime groups. No one uses the same name, address, phone when they are on a known list.” But they leave traces that can be correlated together.

This is the idea behind a startup that has just come out of stealth called Senzing. It is the brainchild of Jeff Jonas. The market category for these types of tools is called entity resolution. Jonas told me, “Anytime you can catch criminals is kind of fun. Their primary tradecraft holds true for anyone, from bank robbers up to organized crime groups. No one uses the same name, address, phone when they are on a known list.” But they leave traces that can be correlated together.

Entity resolution is big business. There are more than 50 firms that sell some kind of service based on this, but they offer more of a custom consulting tool that requires a great deal of care and feeding and specialized knowledge. Many companies end up with million-dollar engagements by the time they are done. Jonas is trying to change all that and make it much cheaper to do it. You can run his software on any Mac or Windows desktop, rather than have to put a lot of firepower behind the complex models that many of these consulting firms use.

Who could benefit from his product? Lots of companies. For example, a supply chain risk management vendor can use to scrape data from the web and determine who is making trouble for a global brand. Or environmentalists looking to find frequent corporate polluters. A finservices firm that is trying to find the relationship between employees and suspected insider threats or fraudulent activities. Or child labor lawyers trying to track down frequent miscreants. You get the idea. You know the data is out there in some form, but it isn’t readily or easily parsed. “We had one firm that was investigating Chinese firms that had poor reputations. They got our software and two days later were getting useful results, and a month later could create some actionable reports.” The ideal client? “Someone who has a firm that may be well respected, but no one actually calls” with an engagement, he told me.

Jonas started developing his tool when he was working at IBM several years ago. I interviewed him for ReadWrite and found him fascinating. An early version of his software played an important role in figuring out the young card sharks behind the movie 21 were taking advantage of card counting in several Vegas casinos, and was able to match up their winnings all over town and get the team banned. Another example is from Colombia universities who saved $80M after finding 250,000 fake students being enrolled.

IBM gets a revenue share from Senzing’s sales, which makes sense. The free downloads are limited in terms of how much data you can parse (10,000 records), and they also sell monthly subscriptions that start at up to $500 for the simplest cases. It will be interesting to see how widely his tool will be used: my guess is that there will be lots of interesting stories to come.

FIR B2B Podcast #89: Fake Followers and Real Influence

The New York Times last week published the results of a fascinating research project entitled The Follower Factory, that describes how firms charge to add followers, retweets, likes and other social interactions to social media profiles. While we aren’t surprised at the report, it highlights why B2B marketers shouldn’t shortcut the process of understanding the substance of an influencer’s following when making decisions about whom to engage. The Times report identifies numerous celebrities from entertainment, business, politics, sports and other areas who have inflated their follower numbers for as little as one cent per follower. In most cases, the fake followers are empty accounts without any influence or copies of legitimate accounts with subtle tweaks that mask their illegitimacy.

The New York Times last week published the results of a fascinating research project entitled The Follower Factory, that describes how firms charge to add followers, retweets, likes and other social interactions to social media profiles. While we aren’t surprised at the report, it highlights why B2B marketers shouldn’t shortcut the process of understanding the substance of an influencer’s following when making decisions about whom to engage. The Times report identifies numerous celebrities from entertainment, business, politics, sports and other areas who have inflated their follower numbers for as little as one cent per follower. In most cases, the fake followers are empty accounts without any influence or copies of legitimate accounts with subtle tweaks that mask their illegitimacy.

The topic isn’t a new one for either of us. Paul wrote a book on the topic more than ten years ago. Real social media influencers get that way through an organic growth in their popularity, because they have something to say and because people respond to them over time. There is no quick fix for providing value.

Twitter is a popular subject for analysis because it’s so transparent: Anyone can investigate follower quality and root out fake accounts or bots by clicking on the number of followers in an influencer’s profile. Other academic researchers have begun to use Twitter for their own social science research, and a new book by UCLA professor

Paul and David review some of their time-tested techniques to growing your social media following organically, and note the ongoing value of blogs as a tool for legitimate influencers to build their followings.

You can listen to our 16 min. podcast here:

Researching the Twitter data feed

A new book by UCLA professor is available online free for a limited time, and I recommend you download a copy now. While written mainly for academic social scientists and other researchers, it has a great utility in other situations.

Zachary has been working with analyzing Twitter data streams for several years, and basically taught himself how to program enough code in Python and R to be dangerous. The book assumes a novice programmer, and provides the code samples you need to get started with your own analysis.

Why Twitter? Mainly because it is so transparent. Anyone can figure out who follows whom, and easily drill down to immediately see who are these followers, and how often they actually use Twitter themselves. Most Twitter users by default have open accounts, and want people to engage them in public. Contrast that with Facebook, where the situation is the exact opposite and thus much harder to access.

To make matters easier, Twitter data comes packaged in three different APIs, streaming, search and REST. The streaming API provides data in near-real-time and is the best way to get data on what is currently trending in different parts of the world. The downside is that you could be picking a particularly dull moment in time when nothing much is happening. The streaming API is limited to just one percent of all tweets: you can filter and focus on a particular collection, such as all tweets from one country, but still you only get one percent.That works out to about five million tweets daily.

Many researchers run multiple queries so they can collect more data, and several have published interesting data sets that are available to the public. And there is this map that shows patterns of communication across the globe over an entire day.

The REST API has limits on how often you can collect and how far back in time you can go, but isn’t limited to the real-time feed.

Interesting things happen when you go deep into the data. Zachary first started with his Twitter analysis, he found for example a large body of basketball-related tweets from Cameroon, and upon further analysis linked them to a popular basketball player (Joel Embiid) who was from that country and lot of hometown fans across the ocean. He also found lots of tweets from the Philippines in Tagalog were being miscataloged as an unknown language. When countries censor Twitter, that shows up in the real-time feed too. Now that he is an experienced Twitter researcher, he focuses his study on smaller Twitterati: studying the celebrities or those with massive Twitter audiences isn’t really very useful. The smaller collections are more focused and easier to spot trends.

So take a look at Zachary’s book and see what insights you can gain into your particular markets and customers. It won’t cost you much money and could payoff in terms of valuable information.

When anonymous web data isn’t anymore

One of my favorite NY Times technology stories (other than, ahem, my own articles) is one that ran more than ten years ago. It was about a supposedly anonymous AOL user that was picked from a huge database of search queries by researchers. They were able to correlate her searches and tracked down Thelma, a 62-year old widow living in Georgia. The database was originally posted online by AOL as an academic research tool, but after the Times story broke it was removed. The data “underscore how much people unintentionally reveal about themselves when they use search engines,” said the Times story.

In the intervening years since that story, tracking technology has gotten better and Internet privacy has all but effectively disappeared. At the DEFCON trade show a few weeks ago in Vegas, researchers presented a paper on how easy it can be to track down folks based on their digital breadcrumbs. The researchers set up a phony marketing consulting firm and requested anonymous clickstream data to analyze. They were able to actually tie real users to the data through a series of well-known tricks, described in this report in Naked Security. They found that if they could correlate personal information across ten different domains, they could figure out who was the common user visiting those sites, as shown in this diagram published in the article.

In the intervening years since that story, tracking technology has gotten better and Internet privacy has all but effectively disappeared. At the DEFCON trade show a few weeks ago in Vegas, researchers presented a paper on how easy it can be to track down folks based on their digital breadcrumbs. The researchers set up a phony marketing consulting firm and requested anonymous clickstream data to analyze. They were able to actually tie real users to the data through a series of well-known tricks, described in this report in Naked Security. They found that if they could correlate personal information across ten different domains, they could figure out who was the common user visiting those sites, as shown in this diagram published in the article.

The culprits are browser plug-ins and embedded scripts on web pages, which I have written about before here. “Five percent of the data in the clickstream they purchased was generated up by just ten different popular web plugins,” according to the DEFCON researchers.

So is this just some artifact of gung-ho security researchers, or does this have any real-world implications? Sadly, it is very much a reality. Last week Disney was served legal papers about secretly collecting kid’s usage data of their mobile apps, saying that the apps (which don’t ask parents permission for the kids to use, which is illegal) can track the kids across multiple games. All in the interest of serving up targeted ads. The full list of 43 apps that have this tracking data can be found here, including the one shown at right.

So is this just some artifact of gung-ho security researchers, or does this have any real-world implications? Sadly, it is very much a reality. Last week Disney was served legal papers about secretly collecting kid’s usage data of their mobile apps, saying that the apps (which don’t ask parents permission for the kids to use, which is illegal) can track the kids across multiple games. All in the interest of serving up targeted ads. The full list of 43 apps that have this tracking data can be found here, including the one shown at right.

So what can you do? First, review your plug-ins, delete the ones that you really don’t need. In my article linked above, I try out Privacy Badger and have continued to use it. It can be entertaining or terrifying, depending on your POV. You could regularly delete your cookies and always run private browsing sessions, although you do give up some usability for doing so.

Privacy just isn’t what it used to be. And it is a lot of hard work to become more private these days, for sure.

Everyone is now a software company (again)

Several years ago I wrote, “everyone is in the software business. All of the interesting business operations are happening inside your company’s software.” Since then, this trend has intensified. Today I want to share with you three companies that should come under the software label. And while you may not think of these three as software vendors, all three run themselves like a typical software company.

The three are Tesla, Express Scripts, and the Washington Post. It is just mere happenstance that they also make cars, manage prescription benefits and publish a newspaper. Software lies at the heart of each company, as much as a Google or a Microsoft.

The three are Tesla, Express Scripts, and the Washington Post. It is just mere happenstance that they also make cars, manage prescription benefits and publish a newspaper. Software lies at the heart of each company, as much as a Google or a Microsoft.

In my blog post from 2014, I talked about how the cloud, big data, creating online storefronts and improving the online customer experience is driving more companies to act like software vendors. That is still true today. But now there are several other things to look for that make Tesla et al. into software vendors:

- Continuous updates. One of the distinguishing features of the Tesla car line is that they update themselves while they are parked in your garage. Most car companies can’t update their fleet as easily, or even ever. You have to bring them in for servicing, to make any changes to how they operate. Tesla’s dashboard is mostly contained inside a beautiful and huge touch LED screen: the days of dedicated dials are so over. These continuous updates are also the case for The Washington Post website, so they can stay competitive and current. The Post posts more total articles than the NYTimes with double the reporting staff of the DC-based paper. That shows how seriously they take their digital mission too.

- These companies are driven by web analytics and traffic and engagement metrics. Just like Google or some other SaaS-based vendor, The Washington Post post-Bezos is obsessed with stats. Which articles are being read more? Can they get quicker load times, especially on mobile devices? Will readers pay more for this better performance? The Post will try out different news pegs for each piece to see how it performs, just like a SaaS vendor does A/B testing of its pages.

- Digital products are the drivers of innovation. “There are no sacred cows [here, we] push experimentation,” said one of the Post digital editors. “It is basically, how fast do you move? Innovation thrives in companies where design is respected.” The same is true for Express Scripts. “We have over 10 petabytes of useful data from which we can gain insights and for which we can develop solutions,” said their former CIO in an article from several years ago.

- Scaling up the operations is key. Tesla is making a very small number of cars at present. They are designing their factories to scale up, to where they can move into a bigger market. Like a typical SaaS vendor, they want to build in scale from the beginning. They built their own ERP system that shortens the feedback loop from customers to engineers and manages their entire operations, so they can make quick changes when something isn’t working. You don’t think of car companies being so nimble. The same is true for Express Scripts. They are in the business of managing your prescriptions, and understanding how people get their meds has become more of a big data problem. They can quickly figure out if a patient is following their prescription and predict the potential pill waste if they aren’t. The company has developed a collection of products that tie in an online customer portal to their call center and mobile apps.

I am sure you can come up with other companies that make normal stuff like cars and newspapers that you can apply some of these metrics to. The lessons learned from the software industry are slowly seeping into other businesses, particularly those businesses that want to fail fast and more quickly as their markets and customers change.

SecurityIntelligence blog: Tracking Online Fraud: Check Your Mileage Against Endpoint Data

A recent Simility blog post detailed how it is tracking online fraud. With the help of a SaaS-based machine learning tool, the company and its beta customers have seen a 50 to 300 percent reduction in fraudulent online transactions. This last January, they looked at 100 different behaviors across 500,000 endpoints scattered around the world. They found more than 10,000 of those devices were compromised, and then looked for patterns of similar behavior. They found seven commonalities, and some of them are surprising.

You can read my blog post on IBM’s SecurityIntelligence.com here.

IBM SecurityIntelligence blog: Can You Still Protect Your Most Sensitive Data?

An article in The Washington Post called “A Shift Away From Big Data” chronicled several corporations that are actually deleting their most sensitive data files rather than saving them. This is counterintuitive to today’s collect-it-all data-heavy landscape.

However, enterprises are looking to own their encryption keys and protecting their metadata privacy. Plus, there is a growing concern that American-based companies are more vulnerable to government requests than offshore businesses.

You can read more on IBM’s SecurityIntelligence.com blog here.

The blockchain world gets more interesting by the day

I was at a conference last week where everyone was doing some interesting things with blockchain technology. This is the not-so-secret sauce behind Bitcoin: a transaction log that is verifiable and can be synchronized across distributed servers and still handle multiple trust relationships, where chargebacks can’t happen and where the crypto is strong enough to have banks and other financial institutions spending millions of dollars supporting dozens of startups.

I have written before about blockchain tech for IBM’s SecurityIntelligence blog here, but what got me interested about the conference was how practical blockchain implementations have been and will be. This is especially true in changes to the world of supply chains, where goods move across the globe under a variety of incomplete and error-prone tracking circumstances.

Indeed, at the conference I saw lots of blockchain apps that related to supply chains and had almost nothing to do with cryptocurrencies. This is an industry that is ripe for change. As one analyst has written, many supply chains have data quality issues and automation has failed to deliver significant productivity gains. That could change with these new apps.

For example, there is a company called Everledger.io. The idea is to attach a unique digital signature to each and every diamond that is traded on the various international exchanges. This signature can be immediately verified with the actual item itself – like the way a checksum can be used to verify if a digital file has been altered – to ensure that the diamond hasn’t been tampered with or substituted. So far they have been able to track close to a million diamonds in this fashion. According to insurers, about seven percent of the world’s diamonds are fraudulent in one way or another. Last fall, data from the Gemological Institute of America, the main diamond industry certification body was altered by hackers.

We are still in early days, but you can see there are lots of other applications to help detect when counterfeit goods enter a supply chain that are ripe for blockchain applications. Sending prescription drugs around the world is another high-value application that several teams are working on blockchain apps.

One FedEx manager was on a panel where they spoke about how they need new technology for managing their supply chain. “The immutability of the transaction is important for us: are you who you say you are, and are you shipping what you say you are shipping?” They spend a lot on insurance and it would be nice if they could leverage blockchain tech to prove that a package actually did make it to the final destination, with something other than an illegible signature.

While they can track a package from when it leaves your door through their shipment network, that only works if they have control over the shipment from end-to-end. That isn’t always the case, and especially internationally where it can be more cost-effective if they can hand off a package to another shipper. The panel also brought up an interesting question, as to what constitutes a delivery address, with one of them holding up his phone, saying how he wants to be able to deliver something right to where he is at the moment. That has a lot of appeal to me, as I recall how many hours I have spent trying to find a package delivery person when I stepped out of my office for a moment.

Also speaking was a representative of Chattanooga-based Dynamo, a new accelerator for supply chain ventures. They are funding several blockchain-related startups. “It isn’t just about saving money with these kinds of businesses, but about finding opportunities to expand commerce.”

The conference started off with a speech from Brian Behlendorf, who is now in charge of the hyperledger project that is part of the Linux Foundation. He has been around the tech industry for a long time, putting up Wired magazine’s early website and developing numerous open source projects. The idea behind hyperledger is to have an open source project that can be used in a number of blockchain circumstances. Think of what the Apache programmers did for web servers back decades ago: the same thing will be attempted with having a set of protocols and standard infrastructure to build blockchain apps on top of with hyperledger.

Before the conference took place, a pre-conference hackathon was held and more than a dozen teams and 50 people participated to win the top prize of $20k. The winners included college students, which should give you an idea of how quickly blockchain is evolving. Unlike many hackathons where the winners get to pose with an oversize check, in this case the winning teams’ prize money was preloaded in bitcoin on a special cryptokey, which was quite fitting. The first place finishers wrote an app to eliminate ID fraud, using blockchain to encrypt and validate who you actually are.

Blockchain isn’t just all about the supply chain: the banks are getting involved too. A private effort from R3 has more than 40 financial services supporters to try to create standards for distributed ledgers. Barclays has more than 45 Bitcoin-related projects. Deloitte has a group based in Toronto doing cryptocurrency and blockchain consulting. A Berlin neighborhood has dozens of retailers who accept bitcoins. Finally, there are other currencies that are gaining traction, including Ethereum and Dash.org, that attempt to improve upon the original bitcoin specifications and further fueling blockchain interest.

It looks like there will lots of blockchain-related news in the coming months.