Jack Posobiec, Mike Benz, Justine Sacco, Samara Duplessis. If you have never heard of any of these people, this post might be illuminating about how online conspiracies are created and thrive. It is based on a new book, Invisible Rulers: The People Who Turn Lies into Reality,” by Renee DiResta, a computer science researcher whom I have followed over many years. DiResta has been involved in debunking various memes, such as Pizzagate, “stolen” elections, anti-vaxxers, Wayfair selling kids inside their filing cabinets and numerous other cabals. It is now quite possible to mass-produce unreality.

Her book describes the toxic mixture of influencers, algorithms and crowd responses to construct various intricate and believable online conspiracies. She calls this unholy trinity a bespoke reality, used as a self-reinforcing mechanism that has been constructed over the years to cause a lot of pain and suffering for unsuspecting people. “Platforms have imbued crowds with new qualities. They are no long fleeting and local but persistent and global,” she writes. She herself has been the target of a few internet mobs, getting sued, doxxed, misquoted and more. Earlier this summer, she lost her job at the Stanford Internet Observatory, a research outfit she ran with Alex Stamos, who left last year. That link describes what SIO will become without their leadership, and it is debatable if the operation still really exists.

Clearly, “it is not a good time to be in the content moderation industry,” said 404 Media’s Jason Koebler. Trust and safety moderation teams are all but disbanded, and big consulting contracts to comb through the millions of toxic posts on various social networks aren’t being renewed. Facebook announced earlier this year they were shutting down CrowdTangle, its major research tool, to be replaced by something that may or not actually be useful. We all know what happened over at Twitter when it was bought by a billionaire man-boy, such as repricing API access to the Twitter APIs. What used to be free back in the Before Times now costs $42,000 a month. And new research from CheckMyAds indicate that advertisers there are returning back, only this time being shoehorned into comments, including comments of posts that violate its own content rules about hate speech.

@checkmyads

Elon Musk’s X placed ads for dozens of brands in the replies below posts that violate the X Rules against hateful content. Here’s what we found when we looked of a sampling of posts.

♬ original sound – Check My Ads

It seems all social media have adopted a model of toxic influencer-as-a-service. “What matters is keeping fans engaged, aggrieved and subscribed,” says DiResta. She talks about how the influencer is not just telling the story, but becomes part of the story itself. They can adopt one of several roles or personas: the Entertainer, the Explainer, the Bestie, Idols, and Gurus. There are generals, who keep the mob all in a lather, and Reflexive Contrarians, a particular type of explainer that tell you why everything you know is wrong, and Propagandists, and the Perpetually Aggrieved. This latter type have a solid understanding of how platform algorithms amplify their content, and yet also can avoid their moderation efforts, when they cry “censorship” if they run afoul of them.

No matter what type of influencer one is, the real measure of success is when they amass a large enough audience they become like Enron, “too big to cancel.” At that point, truth and interest all become relative, and almost irrelevant, what she calls the Fantasy Industrial Complex, the cinematic universe that is no different from the comics.

But the cinematic universe has to have its villains to succeed. If you create an online service that focuses on a particular self-selected audience (say Parler as an example), you lose the ability to fight the others, and your perpetual complaints don’t land. “There is no opportunity to spin up an aggrievement fest over being wrongfully moderated,” she writes. By design, you can’t own your enemies. So sad.

The title of this post — “big if true” — refers to what influencers say in their rush to publish some content. “Experts may wait to be sure of something,” says DiResta. “But not influencers. And if this turns out to be false? Oh, well, they were just sharing their opinion and just asking questions.” Trolling is fun, and quite profitable, it turns out ” And it almost doesn’t matter if the statements actually advance a cause or prove anything. “The point is the fight. Winning insights, in fact, negatively impacts the influencer because resolution would reduce the potential for future monetizable content,” she writes.

This has several implications. We are no longer in the arena of freedom of speech: instead, we debate the freedom of reach. It isn’t about hosting content on a particular platform, but how it is promoted and packaged. We aren’t talking about the marketplace of ideas, but the way those ideas are manipulated.

DiResta’s book should be required reading for all PR and marketers. The last portion of her book has some very concrete suggestions on how to turn down the toxicity, and try to return to a bespoke world that actually has some basis in truth. If you don’t want to read it, I suggest watching the middle third or so of her interview with Quentin Hardy.And maybe re-evaluate your social media presence. “If we want virtual town squares” in our online world, she says “we have to act like the people on them are our actual neighbors.”

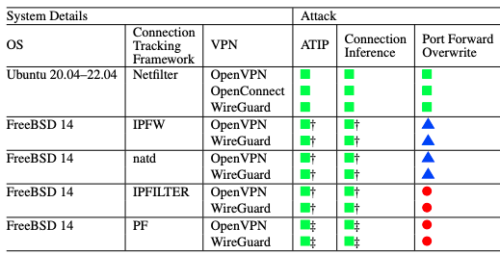

As you can see from the chart below, it goes to the way modern VPNs are designed and depends on Network Address Translation (NAT) and how the VPN software consumes NAT resources to initiate connection requests, allocates IP addresses, and sets up network routes.

As you can see from the chart below, it goes to the way modern VPNs are designed and depends on Network Address Translation (NAT) and how the VPN software consumes NAT resources to initiate connection requests, allocates IP addresses, and sets up network routes. The latest in face fraud has little to do with AI-generated deep fake videos, according to

The latest in face fraud has little to do with AI-generated deep fake videos, according to  Microsoft will start selling its own line of AI-enabled laptops later this summer that will include Recall. Sometimes total recall goes awry, as fans of the original Arnold movie (or Philip Dick short story) might remember. It’s too bad that this is one journey from sci fi to reality that we could do without.

Microsoft will start selling its own line of AI-enabled laptops later this summer that will include Recall. Sometimes total recall goes awry, as fans of the original Arnold movie (or Philip Dick short story) might remember. It’s too bad that this is one journey from sci fi to reality that we could do without.