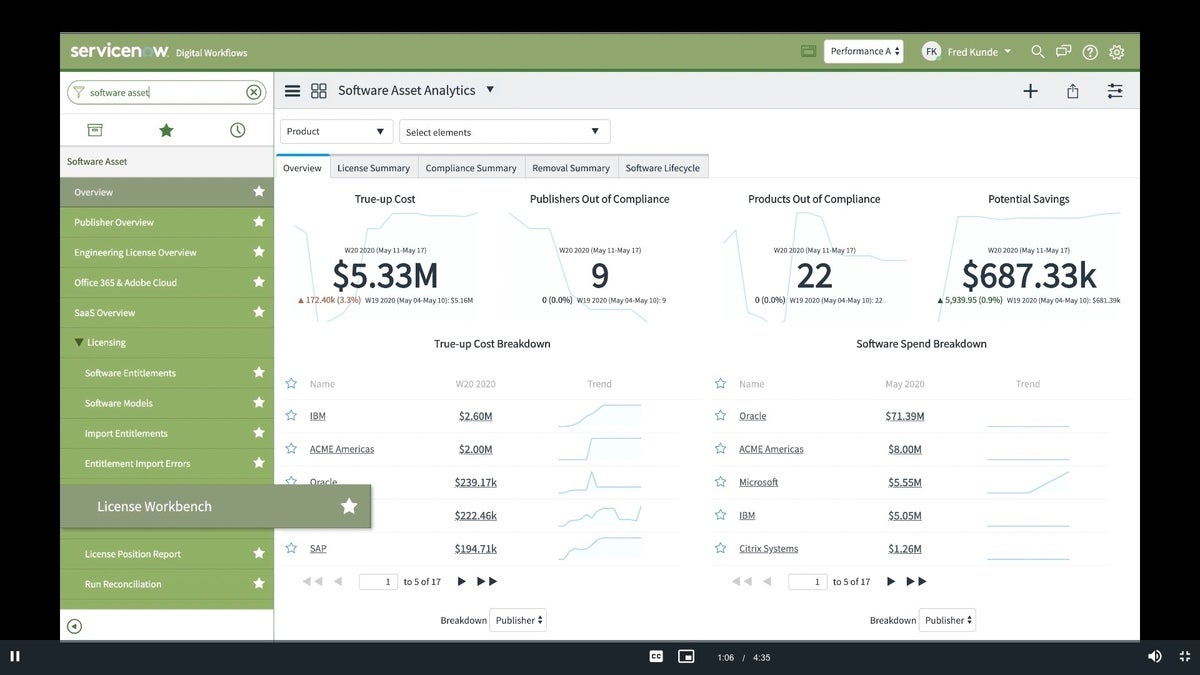

The vulnerabilities of the Apache Log4j logging package—and the attacks they’ve drawn—have made one thing very clear: If you haven’t yet implemented a software inventory across your enterprise, now is the time to start evaluating and implementing such tools. These aren’t new — I recall testing one of the earlier products, Landesk, which is now a part of Ivanti, back in the early 1990s. In this post for Infoworld, I go into detail about how you can evaluate Ivanti and four of other leading tools from Atlassian, ServiceNow (shown above), ManageEngine and Spiceworks, why these tools are needed in modern software development organizations, how you should go about evaluating them, what their notable features are, and what these tools will cost.

The vulnerabilities of the Apache Log4j logging package—and the attacks they’ve drawn—have made one thing very clear: If you haven’t yet implemented a software inventory across your enterprise, now is the time to start evaluating and implementing such tools. These aren’t new — I recall testing one of the earlier products, Landesk, which is now a part of Ivanti, back in the early 1990s. In this post for Infoworld, I go into detail about how you can evaluate Ivanti and four of other leading tools from Atlassian, ServiceNow (shown above), ManageEngine and Spiceworks, why these tools are needed in modern software development organizations, how you should go about evaluating them, what their notable features are, and what these tools will cost.

Category Archives: App Dev tools

Infoworld: How Roblox fixed a three-day worldwide infrastructure outage

Last October the gaming company Roblox’s online network went down, an outage that lasted three days. The site is used by 50M gamers daily. Figuring out and fixing the root causes of this disruption would take a massive effort by engineers at both Roblox and their main tech supplier, HashiCorp. The company eventually posted an amazing analysis on a blog post at the end of January. Roblox got bitten by a strange coincidence of several events. The processes they went through to diagnose and ultimately fix things is instructive to readers that are doing similar projects, and especially if you are running any large-scale IaC installations or are a heavy user of containers and microservices across your infrastructure.

Last October the gaming company Roblox’s online network went down, an outage that lasted three days. The site is used by 50M gamers daily. Figuring out and fixing the root causes of this disruption would take a massive effort by engineers at both Roblox and their main tech supplier, HashiCorp. The company eventually posted an amazing analysis on a blog post at the end of January. Roblox got bitten by a strange coincidence of several events. The processes they went through to diagnose and ultimately fix things is instructive to readers that are doing similar projects, and especially if you are running any large-scale IaC installations or are a heavy user of containers and microservices across your infrastructure.

There are a few things to be learned from the Roblox outage that I discuss in my latest story for Infoworld.

Infoworld: What app developers need to do now to fight Log4j exploits

Earlier this month, security researchers uncovered a series of major vulnerabilities in the Log4j Java software that is used in tens of thousands of web applications. The code is widely used across consumer and enterprise systems, in everything from Minecraft, Steam, and iCloud to Fortinet and Red Hat systems. One analyst estimate millions of endpoints could be at risk.

There are at least four major vulnerabilities from Log4j exploits. What is clear is that as an application developer, you have a lot of work to do to find, fix, and prevent log4j issues in the near-term, and a few things to worry about in the longer term.

You can read my analysis and suggested strategies in Infoworld here.

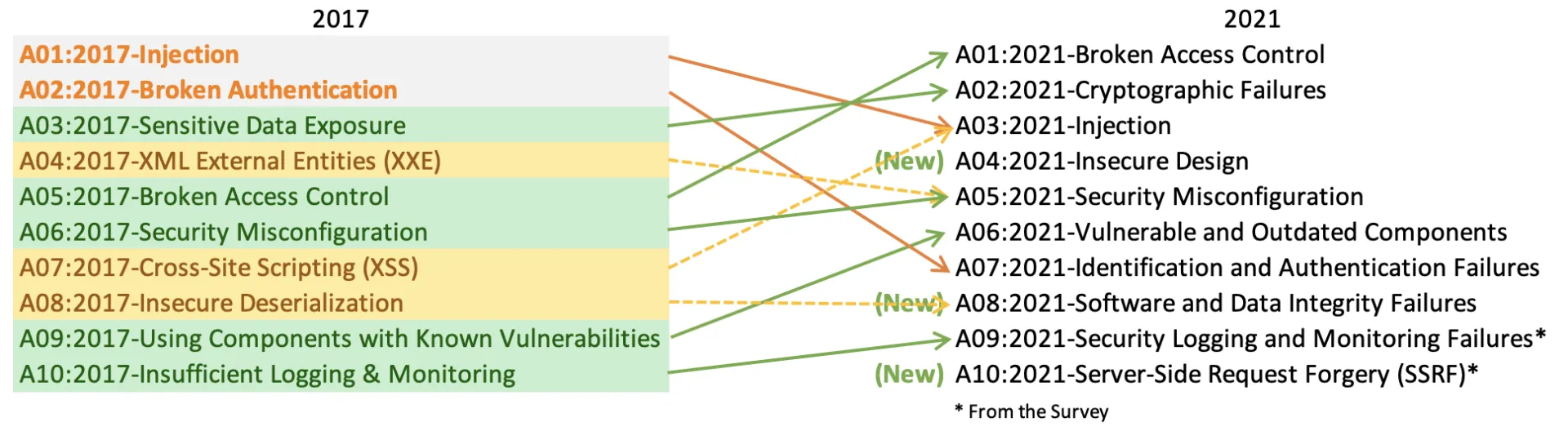

Avast blog: Here are OWASP’s top 10 vulnerabilities in 2021

Last week was the 20th anniversary of the Open Web Application Security Project (OWASP), and in honor of that date, the organization issued its long-awaited update to its top 10 exploits. It has been in draft form for months and has been updated several times since 2003, and before its latest iteration, in 2017. In my blog post for Avast, I probe into its development, how it differs from the older lists, and what are some key takeaways for infosec managers and corporate app developers.

Last week was the 20th anniversary of the Open Web Application Security Project (OWASP), and in honor of that date, the organization issued its long-awaited update to its top 10 exploits. It has been in draft form for months and has been updated several times since 2003, and before its latest iteration, in 2017. In my blog post for Avast, I probe into its development, how it differs from the older lists, and what are some key takeaways for infosec managers and corporate app developers.

The 2021 Top 10 list has sparked some controversy. Security consultant Daniel Miessler complains that list is mixing unequal elements, and calls out the insecure design item as a problem. “While everyone can agree it’s important, it’s not a thing in itself. It’s instead a set of behaviors that we use to prevent issues.” He thinks the methodology is backwards: “OWASP should start with the purpose of the project and the output you want it to produce for a defined audience, and then look at the data needed.”

Why we need girls’ STEM programs

Like many of you, I have watched the horrors unfold in Afghanistan this week. There has been some excellent reporting — particularly by Al Jazeera on their English channel — but very little said about one massive and positive change that the past 20 years has seen: hundreds of thousands of boys and girls there have received an education that was previously out of reach. I am particularly glad to see that many students have also gotten interested in STEM fields as well.

I was reminded of something that happened to me nine summers ago, when I was one of the judges in the annual Microsoft Imagine Cup collegiate software contest, held that year in Sydney. By chance, I ended up judging three teams that were all female students from Ecuador, Qatar and Oman. Just so you understand the process: each country holds its own competition, and that team goes on to the finals. That means that the women bested dozens if not hundreds of other teams in their respective countries.

My post from 2012 shows the Omani team (above) and how carefully they branded themselves with red head scarfs (their app was something dealing with blood distribution, hence the color and the logos on their shirts). The Qatari team had a somewhat different style: one woman wore sweats and sneakers, one wore a full-on burka covering everything but a screen for her eyes, and the other two had modest coverings in between those points. It was my first time seeing anyone give a talk in a burka, and it was memorable. All four of them were from the same university, which was also an important point. While none of my teams were finalists, it didn’t really matter. They all were part of the 375 students who made it to Sydney, and they all got a lot out of the experience, as did I.

The reason I was thinking about the issues for women’s STEM education was this piece that I found in the NY Times about the FIRST robotics competition and the Afghan girls team. The story was written two years ago, and pre-dates what is happening now.

The girls were able to made it out of Kabul on Tuesday to Oman, where they will continue their STEM education. But there are certainly many thousands of girls who aren’t so fortunate, and we’ll see what happens in the coming weeks and months. I think many of us are literally holding our breaths and hoping for the best.

One of the reasons for the FIRST girls team’s success was great mentorship by Roya Mahboob, an Afghan expat tech entrepreneur and the team’s founder. She — yes you might not know that Roya is a woman’s name and is Persian meaning visionary — isn’t the only one that got behind these girls — if you read some of their own stories you can see that they had the support of an older generation of women who had gotten STEM education — the “tech aunties brigade” as I would call them — who were important role models. It shows that this progress happens slowly — family by family — as the old world order and obstacles are broken down bit by bit. Think about that for a moment: these girls already had older family members who were established in their careers. In Afghanistan, there isn’t a glass ceiling, but a glass floor to just gain entry.

While there is a lot to be said about whether America and the other NATO allies should have been in Afghanistan to begin with, I think you could make an argument that our focus on education was a net positive for the country and its future. From various government sources cited in this report, “literacy among 15- to 24-year-olds increased by 28 percentage points among males and 19 points among females, primarily driven by increases in rural areas.” This is over the period from 2005 to 2017. And while I couldn’t find any STEM-specific stats, you can see that education has had a big impact. I don’t know if the mistakes of our “endless war” can be absolved by this one small but shining result, but I am glad to see more all-girls STEM teams take their message around the world, and to motivate others to try to start their own STEM careers.

Linode blog: Guides to improving app security

I have written a series of blog posts to help developers improve their security posture.

Thanks to Covid challenges, there is a more complicated business environment and a higher collection of risks. Supply chains are more stressed, component transportation is more complex, and new software is needed to manage these changes. Businesses have more complex compliance requirements, which also ups the risk ante, especially if they run afoul of regulations or experience a data breach. Attackers are more clever at penetrating corporate networks with stealthier methods that often go without any detection for weeks or months.

Cybersecurity continues to be a challenge as adversaries come up with new and innovative ways to penetrate computer networks and steal data. One of the more popular attack methods is ransomware. There are tools to defend yourself against potential attack and techniques to strengthen your computer security posture. In this post, I describe how these attacks happen, what you can do to defend yourself and how to prevent future attacks.

The days where software developers wrote their application code in isolation of any security implications are over. Applications are exploited every minute of the day, thanks to the internet that connects them to any hacker around the planet. Application security doesn’t have to be overwhelming: there are dozens if not hundreds of tools to help you improve your security posture, prevent exploits, and reduce configuration errors that let bad actors gain unauthorized access to your network. In this post, I review the different kinds of appsec tools and best practices to improve your security posture.

Security starts with having a well-protected network. This means keeping intruders out, and continuously scanning for potential breaches and flagging attempted compromises. Sadly, there is no single product that will protect everything, but the good news is that over the years a number of specialized tools have been developed to help you protect your enterprise network. Your burden is to ensure that there are no gaps in between these various tools, and that you have covered all the important bases to keep your network secure and protect yourself against potential harm from cyber criminals. New security threats happen daily as attackers target your business, make use of inexpensive services designed to uncover weaknesses across your network or in the many online services that you use to run your business. In this post, I review the different types of tools, point out the typical vendors who supply them and why they are useful to protect your network.

As developers release their code more quickly, security threats have become more complex, more difficult to find, and more potent in their potential damage to your networks, your data, and your corporate reputation. Balancing these two megatrends isn’t easy. While developers are making an effort to improve the security of their code earlier in the software life cycle, what one blogger on Twilio has called “shifting left,” there is still plenty of room for improvement. In this guide, I describe what are some of the motivations needed to better protect your code.

Many developers are moving “left” towards the earliest possible moment in the application development life cycle to ensure the most secure code. This guide discusses ways to approach coding your app more critically. It also outlines some of the more common security weaknesses and coding errors that could lead to subsequent problems. In this post, I look at how SQL injection and cross-site scripting attacks happens and what you can do to prevent each of them.

Application security testing products come in two basic groups and you need more than one. The umbrella groups: testing and shielding. The former run various automated and manual tests on your code to identify security weaknesses. The application shielding products are used to harden your apps to make attacks more difficult to implement. These products go beyond the testing process and are used to be more proactive in your protection and flag bad spots as you write the code within your development environment. This guide delves into the differences between the tools and reviews and recommends a series of application security testing products.

CSOonline: Top 7 security mistakes when migrating to cloud-based apps

With the pandemic, many businesses have moved to more cloud-based applications out of necessity because more of us are working remotely. In a survey by Menlo Security of 200 IT managers, 40% of respondents said they are facing increasing threats from cloud applications and internet of things (IoT) attacks because of this trend. There are good and bad ways to make this migration to the cloud and many of the pitfalls aren’t exactly new. In my analysis for CSOonline, I discuss seven different infosec mistakes when migrating to cloud apps.

Avast blog: Covid tracking apps update

After the Covid-19 outbreak, several groups got going on developing various smartphone tracking apps, as I wrote about last April. Since that post appeared, we have followed up with this news update on their flaws. Given the interest in using so-called “vaccine passports” to account for vaccinations, it is time to review where we have come with the tracking apps. In my latest blog for Avast, I review the progress on these apps, some of the privacy issues that remain, and what the bad guys have been doing to try to leverage Covid-themed cyber attacks.

Book and courseware review: Learning appsec from Tanya Janca

If you are looking to boost your career in application security, there is no better place to start than by reading a copy of Tanya Janca’s new book Alice and Bob Learn Application Security. The book forms the basis of her excellent online courseware on the same subject, which I will cover in a moment.

If you are looking to boost your career in application security, there is no better place to start than by reading a copy of Tanya Janca’s new book Alice and Bob Learn Application Security. The book forms the basis of her excellent online courseware on the same subject, which I will cover in a moment.

Janca has been doing security education and consulting for years and is the founder of We Hack Purple, an online learning academy, community and weekly podcast that revolves around teaching everyone to create secure software. She lives in Victoria BC, one of my favorite places on the planet, and is one of my go-to resources to explain stuff that I don’t understand. She is a natural-born educator, with a deep well of resources that comes not just from being a practitioner, but someone who just oozes tips and tools to help you secure your stuff.

Take these two examples from her book:

- First is a series of security tools. To try to keep her book focused, she doesn’t make these recommendations there but has plenty of online places such as this link where she makes suggestions.

- Second is this tweet stream about favorite topics by others (many of which did make it into her book)

The book is both a crash course for newbies as well as a refresher for those that have been doing the job for a few years. I learned quite a few things and I have been writing about appsec for more than a decade. The audience is primarily for application developers, but it can be a useful organizing tool for IT managers that are looking to improve their infosec posture, especially these days when just about every business has been penetrated with malware, had various data leaks, and could become a target from the latest Internet-based threat. Everyone needs to review their application portfolio carefully for any potential vulnerabilities since many of us are working from home on insecure networks and laptops.

Her rough organizing framework for the book has to do with the classic system development lifecycle that has been used for decades. Even as the nature of software coding has changed to more agile and containerized sprints, this concept is still worth using, if security is thought of as early in the cycle as possible. My one quibble with the book is that this framework is fine but there are many developers who don’t want to deal with this — at their own peril, sadly. For the vast majority of folks, though, this is a great place to start.

Alice and Bob are that dynamic duo of infosec that are often foils for good and bad practices, are used as teaching examples that reek of events drawn from Janca’s previous employers and consulting gigs.

For example, you’ll learn the differences between pepper and salt: not the condiments but their security implications. “No person or application should ever be able to speak directly to your database,” she writes. The only exceptions are your apps or your database admins. What about applications that make use of variables placed in a URL string? Not a good idea, she says, because a user could see someone else’s account, or leave your app open to a potential injection attack. “Never hard code anything, ever” is another suggestion because by doing so you can’t trust the application’s output, and the values that are present in your code could compromise sensitive data and secrets.

“When data is sensitive, you need to find out how long your app is required to store it and create a plan for disposing of it at the end of its life.” Another great suggestion for testing the security of your design is to look for places where there is implied trust, and then remove that trust and see what breaks in your app.

Never write your own security code if you can make use of ones that are part of your app dev framework. And spend time on improving your “soft skills” as a developer: meaning learning how to communicate with your less-technical colleagues. “This is especially true, when you feel that the sky is falling and you aren’t getting any management buy-in for your ideas.”

One topic that she returns to frequently is what she calls technical debt. This is a sadly too-often situation, whereby programmers make quick and dirty development decisions. It reflects the implied costs of reworking the code in your program due to taking shortcuts, shortcuts that eventually will catch up with you and have major security implications. She talks about how to be on the lookout and how to avoid this style of thinking.

Let’s move on to talk about the online classes.

The classes will cost $999 (with an option to interact directly with her for 30 minutes for an additional $300) but are certainly worth it. They cover three distinct areas, all of which are needed if your code is going to stand up against hackers and other adversaries.

The first course is for beginners, and covers the numerous areas of appsec that you will need to understand if you are going to be building secure apps from scratch, or trying to fix someone else’s mess. Even though I have been testing and writing about infosec for decades, I still managed to learn something from this class.

If you are not a beginner, and if you are just aiming to learn more for yourself, then you should probably just focus on the third class. The second class goes into more detail about how to create a culture at your organization where appsec is part of everyone’s job. If you aren’t going to be managing a development team, you might want to return to this class later on.

There are certainly many sources of online education, but surprisingly, few offer the range and depth that Janca has put together. Google and Microsoft have free classes to show you how to make use of their clouds, but they aren’t as comprehensive nor as useful, especially for beginners who may not even know how to frame the right questions, or even assemble their goals for what they want to learn about appsec. And both OWASP and SANS, which normally are my go-to places to learn something technical, are also deficient on the practice of appsec, although they both have developed many open-source tools and cheat sheets and other supporting things that are used in developing secure apps. Thus Janca’s courseware fills an important missing niche.

The textbook for all three classes is her excellent Alice and Bob book mentioned above. Yes, you could probably learn some of the things by just reading the book without taking the classes, but you would have to work a lot harder, especially if you are more of an auditory learner. Watching and listening to Janca explain her way through numerous different tools that you’ll need to build your apps securely is worth the price of the courses: you are in the presence of a master teacher who knows her stuff.

One thing missing from the trio of classes is any product-specific discussion. (She covers this separately.) I realize why she did this, but think that eventually you will be frustrated and just wish you could have a little more context of how a piece of defensive or detection software actually works, because that is how I, as an experiential learner, figure these things out.

All in all, I highly recommend the sequence, with the above caveats. We all need to move in the direction of making all of our apps more secure, and Janca’s courseware should be required for anyone and everyone.

Apple’s App Store: monopoly or digital mall?

Another salvo in the legal battle between Apple and its developers was fired last month. The EU Commission is following up on a complaint from Spotify that says Apple’s practices are anti-competitive and are designed to block the popular music streaming service. Apple has two policies: one that prevent app creators from linking payments from within the app other than subscriptions, and another that limits users from making payments other than in-app purchases. These two policies result in developers having to pay Apple commissions on these payment streams: which amount to nearly a third for the first year and 15% in subsequent years.

This follows the US Supreme Court ruling that iPhone customers could sue Apple for allegedly operating the App Store as a monopoly that overcharges people for software. So far no action has happened as a result of this case and legal experts say it will probably take several years to wind its way through the courts. There was another lawsuit filed in US District Court in San Jose by two app developers that also accuse Apple of being a monopolist.

This follows the US Supreme Court ruling that iPhone customers could sue Apple for allegedly operating the App Store as a monopoly that overcharges people for software. So far no action has happened as a result of this case and legal experts say it will probably take several years to wind its way through the courts. There was another lawsuit filed in US District Court in San Jose by two app developers that also accuse Apple of being a monopolist.

Andy Yen of ProtonMail posted this blog entry last month, saying “We have come to believe Apple has created a dangerous new normal allowing it to abuse its monopoly power through punitive fees and censorship that stifles technological progress, creative freedom, and human rights. Even worse, it has created a precedent that encourages other tech monopolies to engage in the same abuses.” He states further that “It is hard to stay competitive if you are forced to pay your competitor 30% of all of your earnings.”

Of course, Apple disputes all of these charges, saying that it is just a digital mall where the tenants (the developers) are just paying rent. Nevertheless, it is the only mall when it comes to providing iOS apps. Apple claims it needs some compensation to screen out malware and badly coded apps and claims that the vast majority of apps in its store are free with no payments collected from developers. “We only collect a commission from developers when a digital good or service is delivered through an app.” The company explained its practices in this post in May, and cited a number of instances where third-party app developers compete with its own apps such as iCloud storage, the Camera, Maps and Mail apps.

Tim Cook thinks nobody “reasonable is going to come to the conclusion that Apple’s a monopoly. Our share is much more modest. We don’t have a dominant position in any market.” I disagree. From where I sit this seems very similar to what Microsoft went through back in the 1990s. You might remember that the US government ruled its Windows and anti-competitive practices were considered a monopoly.

There are some differences between Microsoft then and Apple now: Apple doesn’t have a dominant share in mobile OS outside of the US (Google’s Android has 75% of the market). whereas Microsoft had 90% of the PC OS market. But still, the Apple App Store represents a high barrier for app developers to enter, and consumers do suffer as a result.