A few weeks’ back, I wrote about a friend of mine that I called Jane who had suffered from a phishing attack that led towards her losing more than $30,000 in a pig butchering scheme. She called me last week and stopped by to show me that thanks to her homeowners’ insurance policy, she was able to be reimbursed for $25,000 in losses. This is because of an endorsement that included personal cyber insurance. This is the first time that I have ever heard of such coverage, so naturally I wanted to take a deeper dive.

Probably the best starting point is this 2023 Nerdwallet blog, which also helpfully links to the various insurers. It shows you the numerous perils that could be covered by any policy and makes a point that this insurance can’t cover things that happened before the policy is in force. Another good source is this 2023 blog in Forbes. If scroll down past the come-on links, you will see the perils listed and some other insurers mentioned.

This complexity is both good and bad for consumers who are trying to figure out whether to purchase any cyber insurance. It is good because the insurers recognize that cyber is not just a category like insuring a fur coat, or some other physical item. If your washing machine springs a leak and you have coverage for water damage — something that happened to me a few years ago — it is nice to be insured and be reimbursed. Whether you get the level of reimbursement that will enable you to rip out your floors, replace it with something of approximate value, and get your expenses of having to move your stuff and live in a hotel for a couple of weeks is up to the insurer. And whether your claim will eventually trigger your insurer to drop you, and place you on a block list for the next five years is another story. But you can still purchase coverage and the coverage is — for the most part– well defined.

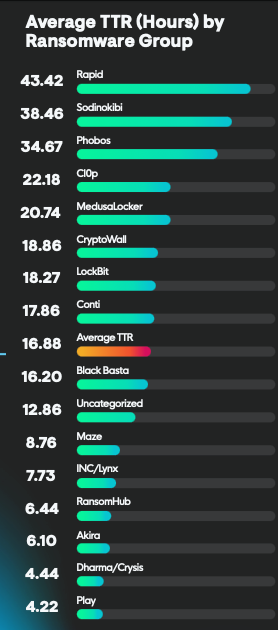

But cyber insurance is not well defined, because of all these various categories of perils can spill over. If your computer is infected with malware and the attackers ultimately get access to your bank accounts, how do you prove that causality to the satisfaction of the insurer? What happens if you are faced with a demand to pay a ransom to get access to your data? Or if you think you are sending funds to help a family member or co-worker in distress that turns out to be a criminal? Many of the problems happen at that hairy intersection between technology and human error.

Before you go any further down this path, I want to take a moment and describe an entirely different approach. What if the financial vendors took a more pro-active role in stopping cyber fraud? It is happening, albeit slowly and under certain specific situations.

One such example is Coinbase, who wrote about what they are doing in a February blog here. The post presents a series of situations where social engineering played a role in a particular fraudulent scheme. “Coinbase will never make an unsolicited phone call to a customer. Anyone who calls you indicating that they are from Coinbase and wants you to move assets is a scammer. Hang up the phone!” There are other recommendations that span the technical spectrum such as using better authentication factors and rotating API keys. As you may or may not know, Coinbase is deeply involved in crypto transactions, so this is a natural fit.

Contrast this with Bank of America, just to choose someone at random. If you know where to look, you can review five red flags used by scammers, including being contacted by someone unexpectedly, being pressured to act immediately, pay in an unusual way or asked for personal information. Unfortunately, they only allow you to specific two hardware security keys, which seems to go against best security practices.

And this is why we are in the state of affairs with scammers today. Incomplete, imperfect solutions have enabled the scammers to build multi-million dollar scam factories that prey on us all the time. Just this past weekend, both my wife and I got text reminders that the balance on our EZ Pass accounts was low. There were only two problems: neither of us use or even live near anyplace we can use them, and both originated from a French phone number. Sacre bleu! This is an attack which has been around for some time but recently resurfaced.

If you have decided to purchase this type of insurance for you or your family, there are two basic paths. First is to see if you can add a cyber “endorsement” to your existing homeowners or renters policy. If this is possible, decide how much coverage you need. Many insurers have these programs, and here it pays to read the fine print and understand when coverage will kick in and when it won’t:

If you have an insurer that doesn’t have this capability, you can go with one of two specialist cyber policies. Nerdwallet summarizes these offerings by NFP (they call it Digital Shield) or Blink, a division of Chubb. USAA (my current home insurer) works with Blink for example and offered me an add-on policy for $19/month. Blink doesn’t cover fraud from malicious family members or cyberbullying by employers, a widespread cyber-attack and some other situations. From my reading of the NFP’s Digital Shield webpage, it seems like these situations are covered by their policies. However, I couldn’t get anyone from NFP to return my calls.

The bottom line? While my friend was able to benefit from her cyber policy, you might not. Visesh Gosrani, who is a UK-based cyber insurance expert, told me “The limits these policies come with are normally going to be disappointing. The reason these policies are being bundled is that in the future homeowners are expected to realize that cyber insurance is important and more open to increasing their coverage if they have already had the policy. The short-term risk is that they end up being disappointed by the policy that they had for free or very little cost.”