I have split my years living part of the time in suburbs and part in urban areas. This is not counting two times that I lived in the LA area, which I don’t quite know how to quantify. I have learned that I like living in what urbanist researchers (as they are called) classify as a “15-minute neighborhood” — meaning that you can walk or bike to many of the things you need for your daily life within that time frame, which works out to about a mile or so walk and perhaps a three mile bike ride. I also define my neighborhood in St. Louis as walk-to-Whole Foods and walk-to-hospital, somewhat tongue-in-cheek.

Why is this important? Several reasons. First, I don’t like being in a car. On my last residency in LA, I had a 35 mile commute, which could take anywhere from 40 minutes to hours, depending on traffic and natural accidents. At my wife’s suggestion, I turned that commute into a 27 mile car ride and got on my bike for the last (or first) leg. While that lengthened the commute, it got me to ride each day. Now my commute is going from one bedroom (the one I sleep in) to another (that I work in). Some weeks go by where I don’t even use the car.

Second, I like being able to walk to many city services, even apart from WF and the doctors. When the weather is better, I bike in Forest Park, which is about half a mile away and is a real joy for other reasons besides its road and path network.

This research paper, which came out last summer, called “A universal framework for inclusive 15-minute cities,” talks about ways to quantify things across cities and takes a deep dive into specifics. It comes with an interactive map of the world’s urban areas that I could spend a lot of time exploring. The cities are mostly red (if you live here in the States) or mostly blue (if you live in Europe and a few other places). The colors aren’t an indication of political bent but how close to that 15-minute ideal most of the neighborhoods that make up the city are. Here is a screencap of the Long Island neighborhood that I spent many years living in: the area shown includes both my home and office locations, and for the most part is a typical suburban slice.

The cells (which in this view are the walkable area from a center point) are mostly red in that area. Many commuters who worked in the city would take issue with the scores in this part of Long Island, which has one of the fastest travel times into Manhattan, and in my case, I could walk to the train within 15 or so minutes.

The paper brings up an important issue: cities to be useful and equitable have to be inclusive and have services spread across their footprints. Most don’t come close to this ideal. For the 15 minute figure to apply, you need density high enough where people don’t have to drive. The academics write, “the very notion of the 15-minute city can not be a one-size-fits-all solution and is not a viable option in areas with a too-low density and a pronounced sprawl.”

Ray Delahanty makes this point in his latest video where he focuses on Hoboken, New Jersey. (You should subscribe to his videos, where he talks about other urban transportation planning issues. They have a nice mix of entertaining travelogue and acerbic wit.)

Maybe what we need aren’t just more 15-minute neighborhoods, but better distribution of city services.

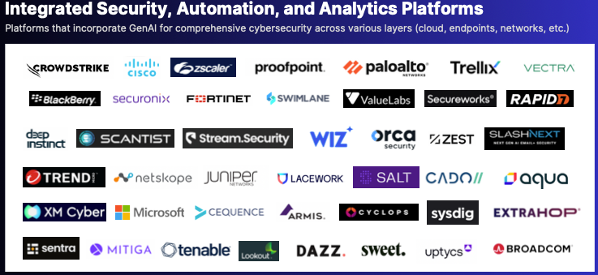

The class of products called SOAR, for Security Orchestration, Automation and Response, has undergone a major transformation in the past few years. Features in each of the four words in its description that were once exclusive to SOAR have bled into other tools. For example, responses can be found now in endpoint detection and response tools. Orchestration is now a joint effort with

The class of products called SOAR, for Security Orchestration, Automation and Response, has undergone a major transformation in the past few years. Features in each of the four words in its description that were once exclusive to SOAR have bled into other tools. For example, responses can be found now in endpoint detection and response tools. Orchestration is now a joint effort with  The novel

The novel