In our story last month, we covered various crowdsourced community methods, looking at the combination of Kaggle contests and Greenplum analytics. There are other examples besides this collaboration where communities are leveraging their own people and data, and some of the most illustrative quite literally are what people are doing to build their own specialized maps.

A map is a powerful data visualization tool: at one glance, you can see trends, clusters of activities and track events. Edward Tufte, data visualization expert, explains how one doctor’s mapping of a Cholera outbreak in 1850’s London was able to track the cause of the epidemic – a bacterium that was transmitted through infected water – to a particular street corner water pump. The good doctor didn’t use Hadoop but shoe leather to figure out where people who were getting sick were getting their water supply. And what is interesting is that this is long before the actual bacterium was discovered in the 1880s.

Enough of the history lesson. Let’s see how crowdmapping and big data science are bringing new ways to visualize data in a more meaningful context.

As one example, last month I was visiting our nation’s capital and noticed that on many streets were racks of bicycles ready to be rented for a few dollars a day. These bikesharing programs are becoming popular in many cities – New York is set to roll its one out sometime soon. The DC program has been in place for about a year, with more than 1600 bikes spread across the city in 175 different locations. Now the operation, called Capital Bikeshare, wants to expand across the Potomac River into the Arlington, Virginia suburbs. So they decided to crowdsource where to put these new locations, and set up this site here to collect the suggestions. On the map you can see locations that the community has suggested and where county planners have recommended, along with of course the locations of the existing stations. You can also leave comments on others’ suggested locations. It is a great idea and one that wouldn’t have been possible just a few years ago, when these mapping tools were expensive or finicky to code up.

Some other successful crowdmaps can be found in some unusual locations. While we traditionally think that you need a lot of computing power and modern data collection methods, crowdmapping is also happening in places where there is little continuous electricity, let alone Internet access, in many places in the third world.

For example, outside of Nairobi Kenya a neighborhood called Kibera was a blank spot on most online maps until a few years ago. Then a bunch of residents decided to map their own community, using online open source mapping tools. It has grown into a complete interactive community project, and as you can see from the map below important locations such as running water and clinics are shown on the map quite accurately.

In another third-world crowdmap is one that is just as essential as the Kibera project. This effort, called Women Under Siege, has been documenting the history of sexual violence attacks in Syria. The site’s creators state on their home page, “We are relying on you to help us discover whether rape and sexual assault are widespread–such evidence can be used to aid the international community in grasping the urgency of what is happening in Syria, and can provide the base for potential future prosecutions. Our goal is to make these atrocities visible, and to gather evidence so that one day justice may be served.” You can filter the reports by the type of attack or neighborhood, and also add your own report to the map.

One of the first community mapping efforts was started by Adrian Holovaty in 2007 in Chicago, mapping city crime reports to the local police precincts. Since then the Everyblock.com site has been purchased by MSNBC and expanded to 18 other cities around the US, including Seattle, DC, and Miami. “Our goal is to help you be a better neighbor, by giving you frequently updated neighborhood news, plus tools to have meaningful conversations with neighbors,” the site’s About page states. You can set up a custom page with your particular neighborhood and get email alerts when crime reports and other hyperlocal news items are posted to the site. The site now pulls together a variety of information besides crime reports, including building permits, restaurant inspections, and local Flickr photos too. This shows the power of the map interface, making this kind of information come alive and meaningful to those who live near these events.

Another effort is called SeeClickFix, which has mobile apps that you can download to your smartphone where citizens who see a problem can report it to their local government and provide detailed information. It was most recently used by various communities that were hard hit by Superstorm Sandy in October, such as this collection of issues from Middletown Conn. area as seen below:

Google put together its own Sandy Crisis Map and displays open gas stations and other data points on it to help storm victims find shelter or resources.

Communities are what you define them, and not always from people living near each other but who share common interests. Our next map is from California’s Napa Valley, home to 900 or so wineries in a few miles juxtaposition. David Smith put this map together that shows you each winery and when they are open, whether appointments are required for tastings, and other information. Once he got this project started, Barry Rowlingson added on to it using R to help with the statistics. What makes this fascinating is this is just a couple of guys who are using open source APIs to build their maps and make it easier to navigate around Napa’s wineries.

Here is another great idea for mapping very perishable data. There are several cities that have implemented real-time transit maps that show you how long you have to wait for your next bus or streetcar. There are dozens of transit systems that are part of NextBus’s website, which mostly focuses on US-based locations. But there are plenty of others: Toronto’s map can be found here, and Helsinki’s transit map can be found here. You can mouse-over the icons on the map to get more details about the particular vehicle. The best thing about these sorts of sites are that they are very simple to use and encourage people to take transit, since they can see quite readily when their next bus or tram will arrive at their stop.

If I have stimulated your mapping appetite, know that there are lots of other crowdmap sites, including Crowdmap.com, ushahidi.com and

Openstreetmap.org, along with efforts from Google. They are all worthy projects, and combine a variety of geo-locating tools with wiki-style commenting features and interfaces to attach programs to extend their utility.

If you want learn more, here is a Web-based tutorial offered by Google’s Mapmaker blog that will show you the simple steps involved in creating your own crowd map and how to find the data to begin your explorations. Here is a similar tutorial for CrowdMap. Good luck with finding your own map to some interesting data relationships.

One of the biggest problems for ecommerce has always been what happens when customers want to mix your online and brick and mortar storefronts. What if a customer buys an item online but wants to return it to a physical store? Or wants an item that they see online but isn’t in stock in their nearest store?

One of the biggest problems for ecommerce has always been what happens when customers want to mix your online and brick and mortar storefronts. What if a customer buys an item online but wants to return it to a physical store? Or wants an item that they see online but isn’t in stock in their nearest store?

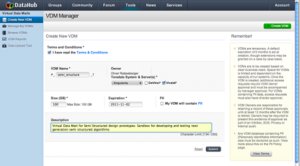

When building Big Data apps, you need to conduct a test run with someone else’s data before you put the software into production. Why? Because using an unfamiliar dataset can help illuminate any flaws in your code, perhaps making it easier to test and perfect your underlying algorithms. To that end, there are a number of public data sources freely available for use. Some of them are infamous, such as the

When building Big Data apps, you need to conduct a test run with someone else’s data before you put the software into production. Why? Because using an unfamiliar dataset can help illuminate any flaws in your code, perhaps making it easier to test and perfect your underlying algorithms. To that end, there are a number of public data sources freely available for use. Some of them are infamous, such as the