Every year hundreds of college students compete in the National Collegiate Cyber Defense Competition. Teams from around the country begin with regional competitions, and the winners of those go on to compete for bragging rights and cash prizes at the national event in Orlando held at the end of April. A friend of mine from the Seattle area, Stephen Kangas, was one of the volunteers, all of whom are drawn from IT security professionals. I spoke to him this week about some of his experiences. The event tries to simulate defending a simulated corporate network, and is divided into two basic teams: the defenders who comprise the blue teams from the colleges, and the attackers, or red team. In addition, there are other groups, such as the judges and the “orange team” which I will get to in a moment. There is also a team of judges with body cams to record the state of play are assigned to each blue team and these are used to tally up the final point totals. Points are also awarded based on the number of services that are still online and haven’t been hacked, as well as those systems which were hacked and then recovered. Both teams have to file incident reports and these are also evaluated as part of the scores.

Stephen has participated at the competition for several years as a mentor and coach for a team from a local high school that competes in the high school division. This year he was on one of the red teams attacking one of the college blue teams. He has his Certified Ethical hacker credential and is working towards a MS in Cybersecurity degree too. He has been involved in various IT roles both as a vendor and as a consultant, including a focus in information security, for decades. “I wanted to expand my knowledge in this area. Because most of my experience has been on defensive side, I wanted to get better, and for that you have to know about the strategy, tools, and tactics used by the offensive black hats out there.”

The event takes place over a weekend and the red team attackers take points away from the defenders for penetrating their corresponding blue team’s network and “pwning” their endpoints, servers, and other devices. “I was surprised at how easy it was to penetrate our target’s network initially. People have no idea how vulnerable they are as individuals and it is becoming easier every day. We need to be preparing and helping people to develop the knowledge and skills to protect us.” His red team consisted of three others that had complementary specializations, such as email, web and SQL server penetration and different OSs. Each of the 30 red team volunteers brings their own laptop and but they all use the same set of hacking tools (which includes Kali Linux, Cobalt Strike, and Empire, among others), and the teams communicate via various Slack channels during the event.

The event has an overall red team manager who is taking notes and sharing tips with the different red teams. Each blue team runs an exact VM copy of the scenario, with the same vulnerabilities and misconfigurations. This year it was a fake prison network. “We all start from the same place. We don’t know the network topology, which mimics the real-world situation where networks are poorly documented (if at all).” Just like in the real world, blue teams were given occasional injects, such as deleting a terminated employee or updating the release date of a prisoner; the red teams were likewise given occasional injects, such as finding and pwning the SQL server and changing the release date to current day.

In addition to the red and blue teams is a group they call the orange team that adds a bit of realism to the competition. These aren’t technical folks but more akin to drama students or improv actors that call into the help desk with problems (I can’t get my email!) and read from scripted suggestions to also put more stress on the blue team to do a better job of defending their network. Points are awarded or taken away from blue teams by the judges depending upon how they handle their Help Desk phone calls.

Adding additional realism, during the event members of each red team make calls with the help desk, pretending to be an employee, trying to social engineer them for information. “My team broke in and pwned their domain controllers. We held them for ransom after locking them out of their Domain Controller, which we returned in exchange for keys and IP addresses to some other systems. Another team called and asked ransom for help desk guy to sing a pop song. They had to sing well enough to get back their passwords.” His team also discovered several Linux file shares that had employee and payroll PII on it.

His college’s team came in second, so they are not going on to the nationals (University of Washington won first place). But still, all of the college students learned a lot about better defense that they can use when competing next year, and ultimately when they are employed after graduation. Likewise, the professionals on the red teams learned new tools and techniques from each other that will benefit them in their work. It was an interesting experience and Stephen intends to volunteer for Pacific Rim region CCDC again next year.

Earlier this month, president of RSA, Rohit Ghai, opened the RSA Conference in San Francisco with some stirring words about understanding the trust landscape. The talk is both encouraging and depressing, for what it offers and for how far we have yet to go to realize this vision completely.

Earlier this month, president of RSA, Rohit Ghai, opened the RSA Conference in San Francisco with some stirring words about understanding the trust landscape. The talk is both encouraging and depressing, for what it offers and for how far we have yet to go to realize this vision completely.

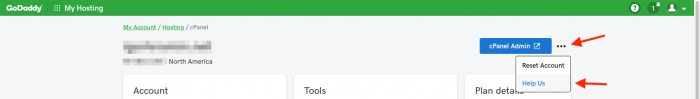

If you use GoDaddy hosting, you should go to your cPanel hosting portal, click on the small three dots at the top of the page (as shown above), click “help us” and ensure you have opted out.

If you use GoDaddy hosting, you should go to your cPanel hosting portal, click on the small three dots at the top of the page (as shown above), click “help us” and ensure you have opted out.