An innocent hike starts out this mystery by Allison Brennan where a women is unexpectedly killed, somewhere in the southern desert near Patagonia AZ. Soon an entire FBI field team is investigating her apparent murder, and before long we learn to respect this undercover motley group that includes agents posing as bartenders, factory workers, and others that are brought in to solve the case. What I liked about this novel was the very realistic treatment — or so I imagined — about how the team works together, including a love affair between team members that isn’t entirely kosher. There are lots of bodies as the book progresses, and lots of bruised egos to, some deservedly so. While Patagonia is a real town, the rest of the book is the author’s responsibility and she pulls it off quite nicely. Highly recommended.

An innocent hike starts out this mystery by Allison Brennan where a women is unexpectedly killed, somewhere in the southern desert near Patagonia AZ. Soon an entire FBI field team is investigating her apparent murder, and before long we learn to respect this undercover motley group that includes agents posing as bartenders, factory workers, and others that are brought in to solve the case. What I liked about this novel was the very realistic treatment — or so I imagined — about how the team works together, including a love affair between team members that isn’t entirely kosher. There are lots of bodies as the book progresses, and lots of bruised egos to, some deservedly so. While Patagonia is a real town, the rest of the book is the author’s responsibility and she pulls it off quite nicely. Highly recommended.

Category Archives: book review

Book review: The Jigsaw Man

Detective Inspector Anjelica Henley has a problem. A new series of copycat murders have happened that mimic a perp whom she put behind bars previously. She is also in love with one of her bosses at her police unit, to the concern of her husband. After fending off an attack by the perp, she returns to duty to deal with the copycat killer. The bodies start to pile up and her husband wants her to quit the force. “I want the job and I want my family. It just seems like I can’t have both at the moment,” she says at one point. Their marital conflict drives some of the more interesting plot points as Henley zeros in on the killer.

Detective Inspector Anjelica Henley has a problem. A new series of copycat murders have happened that mimic a perp whom she put behind bars previously. She is also in love with one of her bosses at her police unit, to the concern of her husband. After fending off an attack by the perp, she returns to duty to deal with the copycat killer. The bodies start to pile up and her husband wants her to quit the force. “I want the job and I want my family. It just seems like I can’t have both at the moment,” she says at one point. Their marital conflict drives some of the more interesting plot points as Henley zeros in on the killer.

It is a classic situation but artfully told with some great characters and plot points. Even though I am not very familiar with the London locales the story still kept me engaged until the end. For thriller fans I would highly recommend this book written by Nadine Matheson.

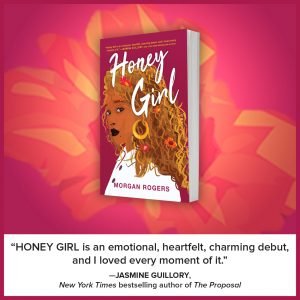

Book review: Honey Girl by Morgan Rogers

The characters in this novel are smitten with love and don’t know how to process their feelings, thanks to a number of missteps throughout their childhood. The cast are mostly black or brown lesbians, which adds a nice dimension to those of us who would like to read novels of these characters. I found myself immediately warming to the opening premise: two women vacationing in Vegas get drunk and then married despite having just met. And while the situation could easily have degraded into a bad “Hangover” spin-off, the book remains true to their characters and brings us deeply into their world. The couple is an interesting pair: a recently minted astronomy PhD and a radio talk show host who reminded me of Allison Steele of my youthful days listening to WNEW-FM. The book will challenge you to think about love and loss and conflict and reconciliation, and I highly recommend it. You can buy Honey Girl here.

The characters in this novel are smitten with love and don’t know how to process their feelings, thanks to a number of missteps throughout their childhood. The cast are mostly black or brown lesbians, which adds a nice dimension to those of us who would like to read novels of these characters. I found myself immediately warming to the opening premise: two women vacationing in Vegas get drunk and then married despite having just met. And while the situation could easily have degraded into a bad “Hangover” spin-off, the book remains true to their characters and brings us deeply into their world. The couple is an interesting pair: a recently minted astronomy PhD and a radio talk show host who reminded me of Allison Steele of my youthful days listening to WNEW-FM. The book will challenge you to think about love and loss and conflict and reconciliation, and I highly recommend it. You can buy Honey Girl here.

Book review: Tom Clancy’s Net Force Attack Protocol

This is the latest in a series of books written by others, in this case by Jerome Preisler. I had high hopes for this book, which is part of a series about a new cybersecurity-enhanced Seal Team type of military commandos. This shows how good an author Clancy is, and how Preisler is just a pale imitation. Like the “Rocky” movie sequels, the book picks up where previous books end, so you really can’t realize your full value if you read it as a standalone volume. And it just ends at some random plot point, without really resolving many of the characters’ situations. Like Clancy, it is filled with jargon, weaponry, mil-speak, and plenty of explosions and gun play. Unlike Clancy, none of this really makes much sense or is essential to moving the plot along, or even mildly interesting. As someone who works in cybersecurity, I thought its treatment of the IT issues were just juvenile and superficial and didn’t draw me into the narrative or characters. Plus, the actual advanced cybersec defenders are less dependent on those macho things that shoot bullets and more on using their brains and computer skills. If you are hungry for more Clancy, pick up one of his old classics like “Red October.” Or if you want to read a series that has much better character and plot development how an actual cybersec team works, check out this series. In either case, you should give this Protocol a pass.

This is the latest in a series of books written by others, in this case by Jerome Preisler. I had high hopes for this book, which is part of a series about a new cybersecurity-enhanced Seal Team type of military commandos. This shows how good an author Clancy is, and how Preisler is just a pale imitation. Like the “Rocky” movie sequels, the book picks up where previous books end, so you really can’t realize your full value if you read it as a standalone volume. And it just ends at some random plot point, without really resolving many of the characters’ situations. Like Clancy, it is filled with jargon, weaponry, mil-speak, and plenty of explosions and gun play. Unlike Clancy, none of this really makes much sense or is essential to moving the plot along, or even mildly interesting. As someone who works in cybersecurity, I thought its treatment of the IT issues were just juvenile and superficial and didn’t draw me into the narrative or characters. Plus, the actual advanced cybersec defenders are less dependent on those macho things that shoot bullets and more on using their brains and computer skills. If you are hungry for more Clancy, pick up one of his old classics like “Red October.” Or if you want to read a series that has much better character and plot development how an actual cybersec team works, check out this series. In either case, you should give this Protocol a pass.

Book review: Mirror Man

At first glance, the plot line of this book seems tired: part clash of the clones, part “Total Recall” memory conflicts, part retread sci-fi mystery. But as you get drawn into the book, which concerns a pharma marketing exec who volunteers for an illegal cloning experiment, replacing himself with a clone for a year, you find out it is quite original and intriguing. The clone — and his body double who is locked away — are both carefully observed, although not as carefully as the scientists think. By the time you reach the end, you’ll have thoroughly enjoyed this book, and the combination of sci-fi and mystery is a nice balance. Highly recommended and I might have to rewatch the original Total Recall just to savor some of those memories, wholesale.

At first glance, the plot line of this book seems tired: part clash of the clones, part “Total Recall” memory conflicts, part retread sci-fi mystery. But as you get drawn into the book, which concerns a pharma marketing exec who volunteers for an illegal cloning experiment, replacing himself with a clone for a year, you find out it is quite original and intriguing. The clone — and his body double who is locked away — are both carefully observed, although not as carefully as the scientists think. By the time you reach the end, you’ll have thoroughly enjoyed this book, and the combination of sci-fi and mystery is a nice balance. Highly recommended and I might have to rewatch the original Total Recall just to savor some of those memories, wholesale.

Here is an excerpt from the new Jane Gilmartin novel.

Book review: The End of Trust

Last week the Electronic Frontier Foundation published an interesting book called The End of Trust. It was published in conjunction with the writing quarterly McSweeneys, which I have long been a subscriber and enjoy its more usual fiction short story collections. This issue is its first total non-fiction effort and it is worthy of your time.

There are more than a dozen interviews and essays from major players in the security, privacy, surveillance and digital rights communities. The book tackles several basic issues: first the fact that privacy is a team sport, as Cory Doctorow opines — meaning we have to work together to ensure it. Second, there are numerous essays about the role of the state in a society that has accepted surveillance, and the equity issues surrounding these efforts. Third, what is the outcome and implications of outsourcing of digital trust. Finally, various authors explore the difference between privacy and anonymity and what this means for our future.

There are more than a dozen interviews and essays from major players in the security, privacy, surveillance and digital rights communities. The book tackles several basic issues: first the fact that privacy is a team sport, as Cory Doctorow opines — meaning we have to work together to ensure it. Second, there are numerous essays about the role of the state in a society that has accepted surveillance, and the equity issues surrounding these efforts. Third, what is the outcome and implications of outsourcing of digital trust. Finally, various authors explore the difference between privacy and anonymity and what this means for our future.

While you might be drawn to articles from notable security pundits, such as an interview where Edward Snowden explains the basics behind blockchain and where Bruce Schneier discusses the gap between what is right and what is moral, I found myself reading other less infamous authors that had a lot to say on these topics.

Let’s start off by saying there should be no “I” in privacy, and we have to look beyond ourselves to truly understand its evolution in the digital age. The best article in the book is an interview with Julia Angwin, who wrote an interesting book several years ago called Dragnet Nation. She says “the word formerly known as privacy is not about individual harm, it is about collective harm. Google and Facebook are usually thought of as monopolies in terms of their advertising revenue, but I tend to think about them in terms of acquiring training data for their algorithms. That’s the thing what makes them impossible to compete with.” In the same article, Trevor Paglen says, “we usually think about Facebook and Google as essentially advertising platforms. That’s not the long-term trajectory of them, and I think about them as extracting-money-out-of-your-life platforms.”

Role of the state

Many authors spoke about the role that law enforcement and other state actors have in our new always-surveilled society. Author Sara Wachter-Boettcher said, “I don’t just feel seen anymore. I feel surveilled.” Thenmozhi Soundararajan writes that “mass surveillance is an equity issue and it cuts across the landscape of race, class and gender.” This is supported by Alvaro Bedoya, the director of a Georgetown Law school think tank. He took issue about the statement that everyone is being watched, because some are watched an awful lot more than others. With new technologies, it is becoming harder to hide in a crowd and thus we have to be more careful about crafting new laws that allow the state access to this data, because we could lose our anonymity in those crowds. “For certain communities (such as LBGTQ), privacy is what lets its members survive. Privacy is what let’s them do what is right when what’s right is illegal. Digital tracking of people’s associations requires the same sort of careful First Amendment analysis that collecting NAACP membership lists in Alabama in the 1960s did. Privacy can be a shield for the vulnerable and is what let’s those first ‘dangerous’ conversations happen.”

Scattered throughout the book are descriptions of various law enforcement tools, such as drones facial recognition systems, license plate readers and cell-phone simulators. While I knew about most of these technologies, collected together in this fashion makes them seem all the more insidious.

Outsourcing our digital trust

Angwin disagrees with the title and assumed premise of the book, saying the point is more about the outsourcing of trust than its complete end. That outsourcing has led to where we prefer to trust data over human interactions. As one example, consider the website Predictim, which scans a potential babysitter or dog walker to determine if they are trustworthy and reliable using various facial recognition and AI algorithms. Back in the pre-digital era, we asked for personal references and interviewed our neighbors and colleagues for this information. Now we have the Internet to vet an applicant.

When eBay was just getting started, they had to build their own trust proxy so that buyers would feel comfortable with their purchases. They came up with early reputation algorithms, which today have evolved into the Uber/Lyft star-rating for their drivers and passengers. Some of the writers in this book mention how Blockchain-based systems could become the latest incarnation for outsourcing trust.

Privacy vs. anonymity

The artist Trevor Paglen says, “we are more interested not so much in privacy as a concept but more about anonymity, especially the political aspects.” In her essay, McGill ethics professor Gabriella Coleman says, “Anonymity tends to nullify accountability, and thus responsibility. Transparency and anonymity rarely follow a binary moral formula, with the former being good and the latter being bad.”

Some authors explore the concept of privacy nihilism, or disconnecting completely from one’s social networks. This was explored by Ethan Zuckerman, who wrote in his essay: “When we think about a data breach, companies tend to think about their data like a precious asset like oil, so breaches are more like oil spills or toxic waste. Even when companies work to protect our data and use it ethically, trusting a platform gives that institution control over your speech. The companies we trust most can quickly become near-monopolies whom we are then forced to trust because they have eliminated their most effective competitors. Facebook may not deserve our trust, but to respond with privacy nihilism is to exit the playing field and cede the game to those who exploit mistrust.” I agree, and while I am not happy about what Facebook has done, I am also sticking with them for the time being too.

This notion of the relative morality of our digital tools is also taken up in a recent NY Times op/ed by NYU philosopher Matthew Liao entitled, Do you have a moral duty to leave Facebook? He says that the social media company has come close to crossing a “red line” but for now he is staying with them.

The book has a section for practical IT-related suggestions to improve your trust and privacy footprint, many of which will be familiar to my readers (MFA, encryption, and so forth). But another article by Douglas Rushkoff goes deeper. He talks about the rise of fake news in our social media feeds and says that it doesn’t matter what side of an issue people are on for them to be infected by the fake news item. This is because the item is designed to provoke a response and replicate. A good example of this is one individual recently mentioned in this WaPost piece who has created his own fake news business out of a parody website here.

Rushkoff recommends three strategies for fighting back: attacking bad memes with good ones, insulating people from dangerous memes via digital filters and the equivalent of AV software, and better education about the underlying issues. None of these are simple.

This morning the news was about how LinkedIn harvested 18M emails from to target ads to recruit people to join its social network. What is chilling about this is how all of these email addresses were from non-members that it had collected, of course without their permission.

You can go to the EFF link above where you can download a PDF copy or go to McSweeneys and buy the hardcover book. Definitely worth reading.

Book review: You’ll see this message when it is too late

A new book from Professor Josephine Wolff at Rochester Inst. of Technology called You’ll see this message when it is too late is worth reading. While there are plenty of other infosec books on the market, to my knowledge this is first systematic analysis of different data breaches over the past decade.

She reviews a total of nine major data breaches of the recent past and classifies them into three different categories, based on the hackers’ motivations; those that happened for financial gain (TJ Maxx and the South Carolina Department of Revenue and various ransomware attacks); for cyberespionage (DigiNotar and US OPM) and online humiliation (Sony and Ashley Madison). She takes us behind the scenes of how the breaches were discovered, what mistakes were made and what could have been done to mitigate the situation.

She reviews a total of nine major data breaches of the recent past and classifies them into three different categories, based on the hackers’ motivations; those that happened for financial gain (TJ Maxx and the South Carolina Department of Revenue and various ransomware attacks); for cyberespionage (DigiNotar and US OPM) and online humiliation (Sony and Ashley Madison). She takes us behind the scenes of how the breaches were discovered, what mistakes were made and what could have been done to mitigate the situation.

A lot has been already written on these breaches, but what sets Wolff’s book apart is that she isn’t trying to assign blame but dive into their root causes and link together various IT and corporate policy failures that led to the actual breach.

There is also a lot of discussion about how management is often wrong about these root causes or the path towards mitigation after the breach is discovered. For example, then-South Carolina governor Nikki Haley insisted that if only the IRS had told them to encrypt their stolen tax data, they would have been safe. Wolff then describes what the FBI had to do to fight the Zeus botnet, where its authors registered thousands of domain names in advance of each campaign, generating new ones for each attack. The FBI ended up working with security researchers to figure out the botnet’s algorithms and be able to shut down the domains before they could be used by the attackers. This was back in 2012, when such partnerships between government and private enterprise were rare. This collaboration also happened in 2014 when Sony was hacked.

Another example of management security hubris can be found with the Ashley Madison breach, where its managers touted how secure its data was and how your profiles could be deleted with confidence — both promises were far from the truth as we all later found out.

The significance of some of these attacks weren’t appreciated until much later. For example, the attack on the Dutch registrar DigiNotar’s certificate management eventually led to its bankruptcy. But more importantly, it demonstrated that a small security flaw could have global implications, and undermine overall trust in the Internet and compromise hundreds of thousands of Iranian email accounts. To this day, most Internet users still don’t understand the significance in how these certificates are created and vetted.

Wolff mentions that “finding a way into a computer system to steal data is comparatively easy. Finding a way to monetize that data can be much harder.” Yes, mistakes were made by the breached parties she covers in this book. “But there were also potential defenders who could have stepped in to detect or stop certain stages of these breaches.” This makes the blame game more complex, and shows that we must consider the entire ecosystem and understand where the weak points lie.

Yes, TJ Maxx could have provided stronger encryption for its wireless networks; South Carolina DoR could have used MFA; DigiNotar could have segmented its network more effectively and set up better intrusion prevention policies; Sony could have been tracking exported data from its network; OPM could have encrypted its personnel files; Ashley Madison could have done a better job with protecting its database security and login credentials. But nonetheless, it is still difficult to define who was really responsible for these various breaches.

For corporate security and IT managers, this book should be required reading.

Book review: The Selfie Generation

Alicia Eler once worked for me as a reporter, so count me as a big fan of her writing. Her first book, called The Selfie Generation, shows why she is great at defining the cultural phenomenon of the selfie. As someone who has taken thousands of selfies, she is an expert on the genre. Early on in the book she says that anyone can create their own brand just by posting selfies, and the selfie has brought together both the consumer and his or her social identity. The idea is that we can shape our own narratives based on how we want to be seen by others.

Alicia Eler once worked for me as a reporter, so count me as a big fan of her writing. Her first book, called The Selfie Generation, shows why she is great at defining the cultural phenomenon of the selfie. As someone who has taken thousands of selfies, she is an expert on the genre. Early on in the book she says that anyone can create their own brand just by posting selfies, and the selfie has brought together both the consumer and his or her social identity. The idea is that we can shape our own narratives based on how we want to be seen by others.

Do selfies encourage antisocial behavior? Perhaps, but the best photographers aren’t necessarily social beings. She captures the ethos from selfie photographers she has known around the world, such as @Wrongeye, Mark Tilsen and Desiree Salomone, who asks, “Is it an act of self-compassion to censor your expression in the present in favor of preserving your emotional stability in the future?”

Are teens taking selfies an example of the downfall of society? No, as Eler says, “teens were doing a lot of the same things back then, but without the help of social media to document it all.”

She contrasts selfies with the Facebook Memories feature, which automatically documents your past, whether you want to remember those moments or not. She recommends that Facebook include an option to enable this feature, for those memories that we would rather forget.

Eler says, “Nowadays, to not tell one’s own life story through pictures on social media seems not only old-fashioned, but almost questionable—as if to say ‘yes, I do have something to hide,’ or that one is paranoid about being seen or discoverable online.”

Eler mentions several forms of selfies-as-art. For example, there is the Yolocaust project, to shame those visitors to the various Holocaust memorials around the world who were taking selfies and make them understand the larger context. And the “killfie,” where someone taking a picture either inadvertently or otherwise dies.

This is an important book, and I am glad I had an opportunity to work with her early in her career.