I gave this keynote at the StampedeCon conference in August in St. Louis and was well received. If you are interested in having me talk about the many use cases for Big Data for your next event, let me know.

I gave this keynote at the StampedeCon conference in August in St. Louis and was well received. If you are interested in having me talk about the many use cases for Big Data for your next event, let me know.

Category Archives: Big Data

SlashBI: B.I. and Big Data Can Play Together Nicely

Integrating a Big Data project with a traditional B.I. shop can take a lot of work, but a few suggestions could make the process easier. Here are a few suggestions from the Hadoop Summit conference from last week, including many from Abe Taha, vice president of engineering at KarmaSphere.

A second article in Slashdot about best practices for Hadoop in enterprise deployments can be found here. There are lots of efforts underway to make Hadoop more suitable for large-scale business deployments—including the addition of integral elements such as high availability, referential integrity, failovers, and the like. My story goes into some of the details, including the ability to deploy the MapR version under Amazon’s Web Services (above).

A second article in Slashdot about best practices for Hadoop in enterprise deployments can be found here. There are lots of efforts underway to make Hadoop more suitable for large-scale business deployments—including the addition of integral elements such as high availability, referential integrity, failovers, and the like. My story goes into some of the details, including the ability to deploy the MapR version under Amazon’s Web Services (above).

Tell your children to learn Hadoop

I spent some time last week with several vendors and users of Hadoop, the formless data repository that is the current favorite of many dot coms and the darling of the data nerds. It was instructive. Moms and Dads, tell your kids to start learning this technology now. The younger the better.

I still know relatively little about the Hadoop ecosystem, but it is a big tent and getting bigger. To grok it, you have to cast aside several long-held tech assumptions. First, that you know what you are looking for when you build your databases: Hadoop encourages pack rats to store every log entry, every Tweet, every Web transaction, and other Internet flotsam and jetsam. The hope is that one day some user will come with a question that can’t be answered in any way other than to comb through this morass. Who needs to spend months on requirements documents and data dictionaries when we can just shovel our data into a hard drive somewhere? Turns out, a lot of folks.

Think of Hadoop as the ultimate in agile software development: we don’t even know what we are developing at the start of the project, just that we are going to find that proverbial needle in all those zettabytes.

Hadoop also casts aside the notion that we in IT have even the slightest smidgen of control over our “mission critical” infrastructure. It also casts aside that we turn to open source code when we have reached a commodity product class that can support a rich collection of developers. That we need solid n.1 versions after the n.0 release has been debugged and straightened out. Versions which are offered by largish vendors who have inked deals with thousands of customers.

No, no, no and no. The IT crowd isn’t necessarily leading the Hadooping of our networks. Departmental analysts can get their own datasets up and running, although you really need skilled folks who have a handle on the dozen or so helper technologies to really make Hadoop truly useful. And Hadoop is anything but a commodity: there are at least eight different distributions with varying degrees of support and add-ons, including ones from its originators at Yahoo. And the current version? Try something like 0.2. Maybe this is an artifact of the open source movement which loves those decimal points in their release versions. Another company has released its 1.0 version last week, and they have been at it for several years.

And customers? Some of the major Hadoop purveyors have dozens, in some cases close to triple digits. Not exactly impressive, until you run down the list. Yahoo (which began the whole shebang as a way to help its now forlorn search engine) has the largest Hadoop cluster around at more than 42,000 nodes. And I met someone else who has a mere 30-node cluster: he was confident by this time next year he would be storing a petabyte on several hundred nodes. That’s a thousand terabytes, for those that aren’t used to thinking of that part of the metric system. Netflix already has a petabyte of data on their Hadoop cluster, which they run on Amazon’s Web Services. And Twitter, Facebook, eBay and other titans and dot com darlings have similarly large Hadoop installations.

Three years ago I would have told you to teach your kids WordPress, but that seems passé, even quaint now. Now even grade schoollers can set up their own blogs and websites without knowing much code at all, and those who are sufficiently motivated can learn Perl and PHP online. But Hadoop clearly has captured the zeitgeist, or at least a lot of our data, and it poised to gather more of it as time goes on. Lots of firms are hiring too, and the demand is only growing. (James Kobielus, now with IBM, goes into more detail here.)

Cloudera has some great resources to get you started from knowing nothing about it: they claim 12,000 people have watched or participated in their training sessions. You can start your engines here.

ITworld: NoSQL: Breaking free of structured data

As companies use the Web to build new applications, and as the amount of data generated by them increases, they are reaching the limits of traditional relational databases. A set of alternatives, grouped under the umbrella label NoSQL (for not only SQL), has become more popular and a number of notable use cases, including social networking giants Facebook and Twitter, are leading the way in this arena.

You can read my article over at ITworld here.

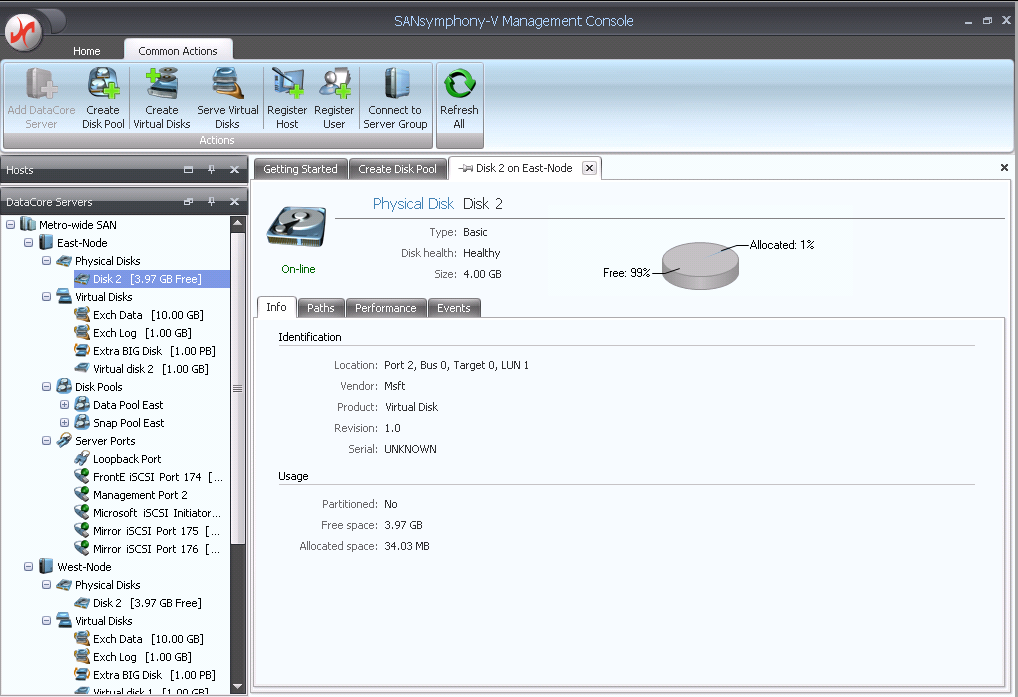

Using DataCore Sansymphony-V to manage your virtual storage

DataCore’s latest version of its storage networking management tool solves the biggest problem stalling server and desktop virtualization projects. It provides a powerful and graphical mechanism to set up storage pools and provide multipath and continuous data protection for a wide variety of SANs.

DataCore’s latest version of its storage networking management tool solves the biggest problem stalling server and desktop virtualization projects. It provides a powerful and graphical mechanism to set up storage pools and provide multipath and continuous data protection for a wide variety of SANs.

Pricing: DataCore-authorized solution providers offer packages starting under $10K for a two-node, high-availability environment.

Requirements: Windows Server 2008 R2

Management console runs on Windows desktop versions from XP SP3 to Windows 7.

You can watch my three minute screencast video review here.

Strange Loop Conference St Louis

I attended a few sessions of this conference here in St. Louis, organized by Alex Miller. Miller has the uncanny ability to find the geekiest person at major organizations and convince them to come to town and talk about some of the really big issues that they are dealing with their code. The conference started off with Hilary Mason, who is a computer scientist and mathematician working for bit.ly. Did you know that you can get all sorts of analytics with any shortened bit.ly URL by just appending a plus sign at the end of it? Yup. She spoke about machine learning, and understanding and predicting behavior from large data set collections. For example, when the World Cup was playing, they observed all sorts of traffic coming from the countries that were in competition during the games. As soon as the game was over, the losing country’s traffic dropped to nothing. Obvious, but interesting. She also gave one of the best illustrations of Bayesian probability analysis that I have seen this side of grad school (and that has been a very long time for me).

I got to hear from Eben Hewitt, who wrote the O’Reilly book on Cassandra, an open source database project that is part of Apache and the current favorite of the large data set folks. He spoke about the really big data guys and how we have to talk in petabytes — WalMart’s customer data base is half a PB, and Google processes 24 PB each day. The data that was assembled to make the movie Avatar was around a PB.

Finally, there was Brian Sletten, an independent consultant based in LA, talking about new Web technologies. He mentioned the Powerhouse Museum in Sydney that is doing some interesting things with Web services — now how cool is that? I can feed my museum addition by going to a geeky conference.

You should put this on your radar for next year. This is very high signal, almost no noise. Some of the speakers could use some polishing, but the raw data is excellent.

Baseline Strominator column: Data center consolidation

I have to come clean: I am a data center geek.

I love visiting them, and talking to the people who design and run them. Maybe it was because I worked around mainframes at the beginning of my IT career. Maybe it is all that power coursing through all those wires, and those big, greasy generators. Or the thrill of getting access to the inner sanctum of IT after passing through various security checkpoints and ‘man traps.’

There is just something about a hyper-cooled raised floor that gets me excited. Okay, enough of that. But there are some interesting things happening in data centers, including companies that are trying to downsize them to save power and money.

My next column for Baseline is posted today, and talks about what Sun did to consolidate its data centers.