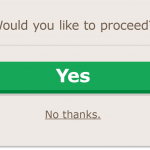

Are you familiar with the term dark patterns? You probably are if you do any online shopping. The term has been in use in the UX world for a decade and refers to a design choice that makes a user decide on something that they might not have otherwise chosen, such as adding a product to a shopping cart that wasn’t selected, running a deal countdown clock, or warning that a product you are thinking about buying is running low in inventory. These are also called nudging, where the website designer places the preferred answer in lar ger font or bigger icons such as the image below.

ger font or bigger icons such as the image below.

Last fall a group of academic researchers found more than 1,800 instances of dark pattern usage on 1,254 websites, which likely represents a low estimate. Many of these websites had pretty deceptive practices.

Dark patterns are just the latest salvo in the attempt to keep our privacy private. An article posted over the weekend in the New York Times documents the decline of this notion. “We have imagined that we can choose our degree of privacy with an individual calculation in which a bit of personal information is traded for valued services — a reasonable quid pro quo,” writes the author, Shoshana Zuboff. “We thought that we search Google, but now we understand that Google searches us. We assumed that we use social media to connect, but we learned that connection is how social media uses us.” Our digital privacy is now very much a publicly traded service. Zuboff’s study of this erosion of privacy is just in time to honor this year’s Data Privacy Day. She mentions a series of examples, such as Delta Airlines’ use of facial recognition software at several airports to shave seconds off of passenger boarding times, with almost everyone opting in without nary a complaint.

The other news item in time for DPD is the UK’s Information Commissioner’s Office (ICO) recent publication of a series of design guidelines called Age Appropriate Design Code to help children’s privacy and online safety. Let’s discuss what they are trying to do, what some of the issues are with enforcing their guidelines, and what this all has to do with dark patterns.

The ICO rules haven’t yet been adopted by Parliament – that is several months away if all goes well, and longer if it turns into another Brexit debacle. And that is the crux of the problem: “Companies are notoriously bad at self-regulating these things. Guidance is great, but if it’s not mandatory, it doesn’t mean much’” says my go-to UX expert, Danielle Cooley. And Techcrunch likens this to the ICO saying, “Are you feeling lucky data punk? How comprehensive the touted ‘child protections’ will end up being remains to be seen.”

Cooley gives the ICO props for moving things along – which is more than we can say for any US-based organization. “It is a step in the right direction and a good starting point for other government entities, much like GDPR was for motivating California to pass their own privacy legislation. However, there is really no way to enforce much of this and there are multiple ways around it too.” She gives the example of American alcohol manufacturers that prohibit people under 21 from entering their websites. Can they really stop a minor from clicking through the age screen? Not really. “At least, the ICO addresses dark patterns and nudging.” One point Cooley makes is proving the opposite of dark patterns is a lot harder to do, and few analysts have done any research.

The ICO has specific examples of nudging, which “could encourage children to provide more personal data than they would otherwise volunteer.” There is a total of 15 different categories of guidelines, ranging from transparency, dealing with default setting and data sharing, and parental controls. The rules are for all children under the age of 18, which is a wider scope than existing UK and US data protection laws that generally stop at age 13. It is extensive and mostly well thought out and applies to a wide collection of online services, including gaming, social media platforms and streaming services as well as ecommerce sites.

Another plus for the ICO rules is how it adopts a risk-based approach to ensure that the rules are effectively applied. However, while that sounds good in theory, it might prove difficult in practice. For example, let’s say you want to verify the age of a website visitor. Do you have one version of your site for really young kids, while another for teens? Exactly how can you implement this? I don’t know either.

Naturally, the tech industry is not happy with this effort, saying they were too onerous, vague and broad. Like GDPR, they apply to every online business, regardless of whether they are based in the UK or elsewhere. The industry reps do have something of a point. What is interesting about the ICO rules is how it places the best interest of children above the bottom lines of the tech vendors and site operators. That is going to be hard to pull off, even if the rules are passed into law and the threat of fines (four percent of total annual worldwide revenue) are levied.

The UK tech policy expert Heather Burns wrote an extensive critique of the ICO draft rules last summer (while there have been some changes with the final draft, most of her issues remain relevant), calling it “one of the worst proposals on internet legislation I’ve ever seen.” The draft proposed a catch-22 situation: to find out if kids are accessing a service, administrators would be required to collect personally identifiable data about all users and site usage, precisely the sort of thing that the ICO, as a privacy regulator, should be dissuading companies from doing. Another issue is that the ICO rules, which were written to target U.S. social media giants, could be onerous for UK domestic startups and SMEs, at a time when many are considering their post-Brexit options. “If the goal of the draft code is to trigger an exodus of tech businesses and investment, it will succeed,” she writes. Additionally, no economic impact assessment of the proposals, as is required for UK legislation, was conducted.

Her section-by-section analysis is well worth studying. For example, she wrote that the draft proposal associated “the use of location data with abduction, physical and mental abuse, sexual abuse and trafficking. This hysteria could lead to young adults being infantilised under rules prepared for toddlers; rules which could, for example, ban them from being able to use a car share app to get home because it uses geolocation data.”

Only time will tell whether the ICO rules are helpful or hurting things. And in the meantime, think about how you can do something today that will help your overall data privacy for the rest of the year. Ideally, you should celebrate your data privacy 24/7.Instead, we seem to note its diminishment, year after year.

And you read this in the gray lady yesterday?

https://www.nytimes.com/interactive/2019/12/19/opinion/location-tracking-cell-phone.html