Last week Microsoft announced its cloud computing effort called Azure. Fitting in between current offerings of Google’s and Amazon’s, it represents a very big step towards moving applications off the desktop and out of a corporation’s own datacenters. Whether or not it will have any traction with corporate IT developers remains to be seen. Think of Microsoft as bringing more of a Wild West feel to the whole arena of cloud computing.

How to distinguish the players? If we think back to the late 1880s, Amazon provides the land grants and raw Linux and Windows acreage to build your applications upon. Google’s General Goods Store will stock and give away all the APIs that a programmer could ever use. And some of the scrappy prospectors that come to build the new towns are from Microsoft. Ballmer as Billy the kid, anyone?

Enough of the metaphors. Mary Jo Foley’s excellent explanation of the different bits and pieces of Azure here is worth reading. But the first instance of Azure is long on vision and short on the actual implementation: Microsoft calls it a “community technology preview”, what the rest of us would call “alpha” version, given how long it takes them to actually get things nailed down and working properly (version 3 is usually where most of us start to think of their code as solid). Granted, Google calls many of its applications beta that are in much better shape – I mean, Gmail has been in beta about 17 years now.

What are some of the issues to consider before jumping on the Microsoft train? First, consider what your .Net skill set is and whether your programmers are going to be using Jscript or something else for the majority of their coding work. The good news is that Azure will work with .Net apps right from the start. (support for SOAP and REST and AJAX will be coming, they promise.)

Microsoft spoke about testing and debugging these apps on your local desktop, just as you do now, and then deploying them in their cloud. The bad news is that you probably have to re-write a good portion of these apps so that the user interface and data extraction logic can work across low-bandwidth connections.

CTO Ray Ozzie, in a cnet interview, talks about “fundamentally the application pattern does have to change. Most applications will not run that way out of the box [on Azure].” While good programming practice today is to separate Web page content from formatting instructions, most programmers assume they are running everything on the same box. Remember how miserable LAN apps were back in the days of Token Ring? We have 10 gig Ethernet now, and people have gotten sloppy.

This is no small issue, and my prediction is that most apps will need some major surgery before they can be cloud-worthy. One wag already has placed his bets: Stephen Arnold writes in his blog Beyond Search, “I remain baffled about SharePoint search running from Azure. In my experience, performance is a challenge when SharePoint runs locally, has resources, and has been tuned to the content. A generic SharePoint running from the cloud seems like an invitation to speeds similar to my Hayes 9600 baud dial up modem.” For those of you that are too young to remember, that means verrrrry slow.

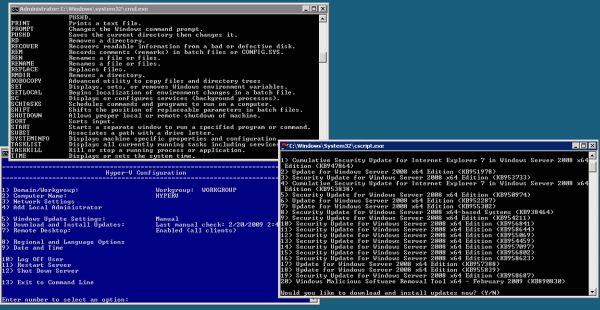

While you are boning up on .Net, you might also want to get more familiar with Windows Server and SQL Server 2008, because many of the same technologies will be used for Azure. One thing that won’t be in Azure is Hyper-V, apparently, we have another hypervisor to run the Azure VMs. Too bad, I was just getting comfortable with Hyper-V myself. Nobody said this was gonna be easy.

Speaking of servers, Microsoft is in the midst of a major land grab of its own, building out data centers in multiple cities and beefing up the ones it already has:

More good news is that they plan on using Azure to run their own hosted applications, and are in the middle of moving them over to the platform (so far, only Live Mesh is there today, here is an explanation of what this does for those that are interested). Right now, all Azure apps will run in its Quincy, Wash. data center, 150 miles east of Redmond, but you can bet this will change as more people use the service. At least Microsoft tells you this, Amazon treats it as a state secret how many and where its S3 and EC2 data centers are.

Of course, the big attraction for cloud computing is scalability, and Ozzie, in the same cnet interview, had this to say about it: “Every company right now runs their own Web site and they’re always afraid of what might happen if it becomes too popular. This gives kind of an overdraft protection for the people who run their Web sites.” I like that. But given the number of outages experienced by Amazon and Google over the past year, what happens when we have bank failures in Dodge?

Second, where do you want to homestead your apps: just because you want to make use of the Microsoft services doesn’t mean your apps have to reside on their servers. If you are happy with all the Google Goods, stay with that. If you like someone else’s scripting language, tool set, likewise.

What Microsoft is trying to do is manage the entire cloud lifecycle development, similar to how they already manage local app development with Visual Studio and dot Net tools. Yes, Amazon will let you build whatever virtual machine you wish in your little piece of cloud real estate, but Microsoft will try to work out the provisioning and set up the various services ahead of time.

Next, the real trouble with all of this cloud computing is how any cloud-based app is going to play with your existing enterprise data structures that aren’t in nice SQL data bases, and may even be scattered around the landscape in a bunch of spreadsheets or text files. Snaplogic (and Microsoft’s own Popfly) has tools to mix and mash up the data, but figuring out the provenance of your data is also not taught in traditional CompSci classes and is largely unheard of around most IT shops, too. Do you need a DBA for your cloud assets? It is 10 pm, do you know what your Widgets are doing with your data?

Next, pricing isn’t set, although for the time being, Azure is free for all. If we look at what Amazon charges for the kind of real estate that Microsoft is offering (2000 VM machine hours, 50 GB of storage, and 600 GB/month of bandwidth), that works out to about $400 a month. Think about that for a moment: $400 a month can buy you a pretty high end dedicated Linux or Windows server from any of a number of hosting providers, and then you don’t have to worry about bandwidth and other charges. And there are many others, like Slicehost here in St. Louis, who can sell you a VM in their data center for a lot less, too.

However, Amazon’s S3 storage repository is amazingly cheap, and getting cheaper as of this week: in fact, they are now charging less per GB the more you store with them. Microsoft should set tiered, fixed monthly pricing and make the storage component free. I am thinking a basic account is free, and then $100 a month would be just about right for the next level. Look how Office Live Small Business sells its hosting services as an indication of what to expect.

Finally, take a moment to do a browser inventory as best you can. You’ll find that you are supporting way too many different versions from different vendors, and getting people to switch just because the IT sheriff says so is probably impossible. If you are going to enter the brave new world of cloud computing, this is yet another indication of the beginnings of where the Wild West will begin for you.