A new malware method has been identified by cybersecurity researchers. While it hasn’t yet been widely used, it is causing some concern. Ironically, it has been named Havoc.

A new malware method has been identified by cybersecurity researchers. While it hasn’t yet been widely used, it is causing some concern. Ironically, it has been named Havoc.

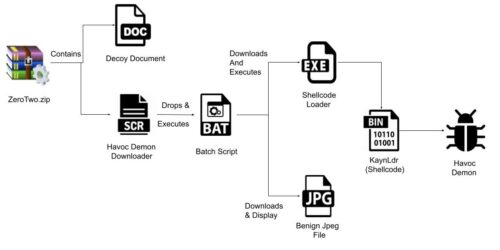

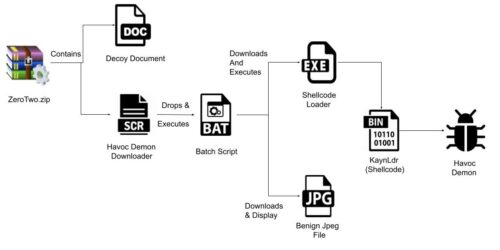

Why worry about it if it is a niche case? Because of its sophistication of methods and the collection of tools and techniques (shown in the diagram above from ZScaler) that it used. It doesn’t bode well for the digital world. Right now it has been observed targeting government networks.

Havoc is a command and control (C2) framework, meaning that it is used to control the progress of an attack. There are several C2 frameworks that are used by bad actors, including Manjusaka, Covenant, Merlin, Empire and the commercial Cobalt Strike (this last one is used by both attackers and red team researchers). Havoc is able to bypass the most current version of Windows 11 Defender (at least until Microsoft figures out the problem, then releases a patch, then gets us to install it). It is also able to employ various evasion and obfuscation techniques.

One reason for concern is how it works. Researchers at Reversing Labs “do not believe it poses any risk to development organizations at this point. However, its discovery underscores the growing risk of malicious packages lurking in open source repositories like npm, PyPi and GitHub.” Translated into English, this means that Havoc could become the basis of future software supply chain attacks.

In addition, the malware disables the Event Tracing for Windows (ETW) process. This is used to log various events, so is another way for the malware to hide its presence. This process can be turned on or off as needed for debugging operations, so this action by itself isn’t suspicious.

One of the common techniques is for the malware to go to sleep once it reaches a potential target PC. This makes it harder to detect, because defender teams can perhaps track when some malware entered their system but don’t necessarily find when it wakes up with further work. Another obfuscation technique is to hide or otherwise encrypt its source code. For proprietary applications, this is to be expected, but for open-source apps the underlying code should be easily viewable. However, this last technique is bare bones, according to the researchers, and easily found. The open source packages that were initially infected with Havoc have been subsequently cleansed (at least for now). Still, it is an appropriate warning for software devops groups to remain vigilant and to be on the lookout for supply chain irregularities.

One way this is being done is called static code analysis, where your code in question is run through various parsing algorithms to check for errors. What is new is using ChatGPT-like products to do the analysis for you and here is one paper that shows how it was used to find code defects. While the AI caught 85 vulnerabilities in 129 sample files (what the author said was “shockingly good”), it isn’t perfect and is more a complement to human code review and traditional code analysis tools.

A new malware method has been identified by cybersecurity researchers. While it hasn’t yet been widely used, it is causing some concern. Ironically, it has been named Havoc.

A new malware method has been identified by cybersecurity researchers. While it hasn’t yet been widely used, it is causing some concern. Ironically, it has been named Havoc. When we first thought about the plausible future of a real Skynet, many of us assumed it would take the form of a mainframe or room-sized computer that would be firing death rays to eliminate us puny humans. But now the concept has taken a much more insidious form as — a chatbot?

When we first thought about the plausible future of a real Skynet, many of us assumed it would take the form of a mainframe or room-sized computer that would be firing death rays to eliminate us puny humans. But now the concept has taken a much more insidious form as — a chatbot? The

The