The virtualization market is a fast-moving one right now. New players are coming to market, acquisitions are plenty, and boatloads of announcements are expected next week at VMware’s annual VMworld show in San Francisco. For example, there are new partnerships between Infineta and SunGard and between Savvis and Trend Micro. Over the past year there have been lots of new products and services to make virtualization easier to manage on both the largest- and smallest-scale deployments. And as virtualization becomes more ubiquitous, even those IT managers who haven’t yet implemented it within their data centers should be interested.

Certainly the virtualization market is evolving rapidly, and with that in mind, this piece looks at some of the issues, the leading vendors, and the remaining deployment obstacles at hand.

Expect better desktop virtual machine (VM) integration from both Microsoft and VMware. It used to be a chore to set up a VM on a new desktop. That’s quickly becoming a thing of the past. Microsoft’s Windows 8, scheduled to arrive this fall, comes with a complete hypervisor built in to run VMs. (Windows Server has long had this.) VMware is working on its WSX browser-based VM player, and the beta became available earlier this summer. Microsoft and VMware offer two different approaches, to be sure, but both hint at making it easier to run VMs anywhere, benefitting more-casual users and also making it easier for test and development environments to bring up VMs quickly.

It is time to seriously consider Hyper-V. Windows 8 is perhaps another compelling reason to take another look at Hyper-V. Microsoft has beefed up its System Center 2012 Cloud and Datacenter Management features to make Hyper-V more manageable and scalable. Microsoft claims its tests show Hyper-V can support a greater density of VMs than VMware (PDF). While that may or may not be true for your particular installation, clearly its hypervisor is maturing.

If you believe these claims or think you can cut your virtualization costs with Hyper-V, consider that there is another horse in this race that has come from behind in the past year, the Linux-based solution KVM. The open-source project has picked up steam and is now offered by some hosting providers, including CloudSigma and Contegix, as a lower-cost alternative to running your VMs in the cloud. KVM’s backers include Red Hat, IBM, and Ubuntu. Expect its popularity to continue to increase and perhaps take more market share from VMware.

The year 2012 probably won’t see many more deployments of virtual desktop infrastructure (VDI). VDI isn’t cheap or easy, and planning your bandwidth and storage needs can be vexing, as mentioned earlier this spring. While most of these issues still remain, there are some indications of progress, particularly around the largest installations. Earlier this summer Atlantis Computing announced its ILIO FlexCloud storage product, which optimizes how virtual desktops use storage to significantly boost their performance. Virtual machines can create a lot of duplicated files used by common operating-systems applications, and Atlantis has a nice work-around for this issue. Will 2013 be the year of VDI? Probably not.

The hybrid cloud is making it harder to distinguish platform and infrastructure players as virtualized apps become more complex. The line marking the differences between a platform and infrastructure is getting harder to draw. The pure IaaS players are acquiring more management vendors or introducing more platform offerings (see Amazon’s improved support for Hadoop this year); the pure PaaS players are providing more infrastructure-level features (Joyent comes to mind as one example).

Indeed, as Om Malik and Stacey Higginbotham noted last month, even VMware might be getting into the PaaS market with its intention to spin off its Cloud Foundry and associated big-data platforms and tools into a separate business unit.

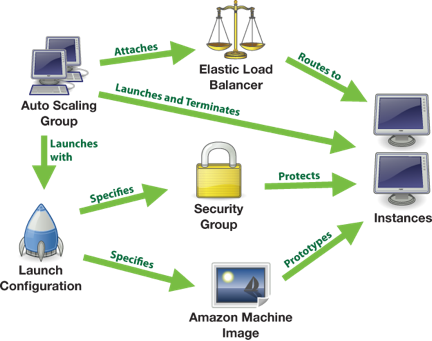

And both PaaS and IaaS players, along with the major virtualization vendors, are trying to provide more orchestration and provisioning tools to make their environments better able to scale up or down as demands change, without a lot of manual intervention. Amazon, for example, has its Auto Scaling feature and CloudFormation service, and both can be used for this purpose. IBM’s SmartCloud has a wealth of offerings here as well.

Earlier in July VMware purchased DynamicOps, a cloud-provisioning-and-management vendor, and Nicira, a software-defined-networking vendor. In both purchases, provisioning and management features were in mind. Lots of smaller vendors are entering this space, too, Intigua being one example. And there are existing vendors such as AppFog that now allow you to migrate apps across a wide variety of PaaS vendors, including Amazon Web Services, HP’s Cloud, Microsoft Azure, and Rackspace.

While provisioning isn’t quite effortless, these developments will make it less painful, and there is hope that eventually provisioning can become part of the built-in hypervisor-management toolset. Expect to see better provisioning tools and more integration between PaaS and IaaS products in the future as more enterprises move toward more hybrid-cloud deployment.

Virtualized apps are becoming more of a necessity. More enterprises are now looking for ways to quickly deploy multiple versions of apps concurrently across their infrastructure. There are five major vendors that have solutions in this arena: Microsoft (App-V), Citrix (XenApp), InstallFree (7bridge), Symantec (Workspace Virtualization), and VMware (ThinApp). All have seen some significant feature improvements in the past year and are getting less painful to install and manage, although deploying these products is still far too complex. For example, Microsoft’s App-V will include virtual copies of its Office product, but it also includes Package Accelerators, Sequencer Templates, Packaging Diagnostics, and built-in best practices. That is a lot to learn before virtual apps can be successfully deployed. All of these products will need to become easier to use, and we should expect all five of the major vendors to move in this direction in the coming years.

Storage is still the biggest obstacle. Managing your storage needs and making sure your storage delivers sufficient performance and reliability will continue to be challenges for larger VM deployments. The resulting product category to fix this is called the storage hypervisor, and it’s coming of age — albeit slowly. Besides the Atlantis Computing product mentioned above, both DataCore Software and Virsto are beefing up their storage solutions to work across multiple VM hypervisors and be more adept at managing mixed-vendor environments, including support for thin provisioning and deduplication. Expect more vendors to enter this marketplace, and perhaps even the traditional hypervisor vendors such as VMware and Citrix’s Xen will boost their storage offerings.

Recovering from VM failures is another hurdle. As you virtualize more of your data-center infrastructure, understanding where the failure points are and how to recover from them will be another sticking point. Knowing which VMs are dependent on others and how to restart particular services in the appropriate order will take some careful planning.

One suggestion is to examine what Netflix has done to continually test its large Amazon Web Services infrastructure with what it calls Chaos Monkey. As the company’s Cory Bennett and Ariel Tseitlin write on the Netflix Tech Blog:

“We have found that the best defense against major unexpected failures is to fail often. By frequently causing failures, we force our services to be built in a way that is more resilient.”

Expect more of these efforts in the future as more enterprises gain knowledge about managing large-scale VM installations.

Custom corporate data APIs are taking off. It is fascinating to see end-user organizations beginning to treat their own data with sufficient rigor to create custom data-access APIs, particularly as data access has long been the province of the programming or platform vendors. Christian Reilly at Bechtel calls this data virtualization. The idea is that you first consolidate and then virtualize the data-access APIs so that your company’s data consumers can gain access to what they need without having to rebuild the entire desktop software stack for browser-based or smartphone access. By having a well-defined corporate API, you can ensure that your data is more portable (think iPad dashboards) and more actionable.

Virtual firewalls still lag behind physical ones. The protective network infrastructure technologies that are plentiful and commonplace in the physical world are not so secure when it comes to the world of VMs. And while few attacks have been observed in the wild that specifically target VMs, this doesn’t mean you shouldn’t protect them. A number of vendors offer ways to secure your VMs, and Catbird Networks, Vyatta, and Reflex Technologies are still market leaders here. As another example, VMware purchased Blue Lane Technologies a few years ago and has slowly incorporated this software into its vShield product line. Expect to see more newcomers such as unproven Bromium and acquisitions in the coming year like the deal between BeyondTrust and eEye to mix and match the two worlds.

The true virtual network is coming. The companion effort to better network protection is software-defined networking (SDN). This means virtualizing the entire network stack from switches to routers and managing them with the same finesse that you would start and stop your VMs.

Until relatively recently virtual-network infrastructure meant that you could virtualize one or more network-adapter cards inside your VM. SDN widely expands this arena to make automatic provisioning and scaling apply to your network connections too and to coordinate with the VM provisioning. The Nicira acquisition sits firmly in this space, making VMware more open and agile. Oracle also pumped up its network-virtualization portfolio by buying Xsigo last month, showing interest here. Juniper and Cisco, the traditional networking heavyweights, aren’t sitting on their hands, either: Juniper is embracing the open-systems approach with OpenFlow, while Cisco has funded the startup Insieme to create an SDN product of its own. We have also reported on newcomer Contrail Systems in this space.

Expect many more developments, acquisitions, and new product offerings to make the virtual network more usable and integrated into enterprise VM operations.

Pricing will be closely watched. Last summer’s introduction of vSphere v5 was a huge black eye when it came to what was a major price increase for the largest installations, as we wrote about then. Microsoft and others will continue to chip away at VMware in terms of price in the near term.

As you can see, there is a lot of turmoil in the virtualization space. Certainly VMware will continue its market domination, but Microsoft and others are making gains in virtual-server share and startups are entering the field it seems almost daily with various innovations. Just like in the physical world, storage, security, and networking issues will need better solutions to improve the overall VM deployments in the data center.

When building Big Data apps, you need to conduct a test run with someone else’s data before you put the software into production. Why? Because using an unfamiliar dataset can help illuminate any flaws in your code, perhaps making it easier to test and perfect your underlying algorithms. To that end, there are a number of public data sources freely available for use. Some of them are infamous, such as the

When building Big Data apps, you need to conduct a test run with someone else’s data before you put the software into production. Why? Because using an unfamiliar dataset can help illuminate any flaws in your code, perhaps making it easier to test and perfect your underlying algorithms. To that end, there are a number of public data sources freely available for use. Some of them are infamous, such as the