A consortium of A-list reporters from 17 major American and Euro news outlets have begun publishing what they have learned from the documents unearthed by whistleblower Frances Haugen. The trove is a redacted copy of what was given to various legislative and watchdog US and UK agencies. The story collection is being cataloged over at Protocol here. I haven’t read everything – yet – but here are some salient things that I have learned. Most of this isn’t surprising, given the venality that Zuck & co. have shown over the years.

A consortium of A-list reporters from 17 major American and Euro news outlets have begun publishing what they have learned from the documents unearthed by whistleblower Frances Haugen. The trove is a redacted copy of what was given to various legislative and watchdog US and UK agencies. The story collection is being cataloged over at Protocol here. I haven’t read everything – yet – but here are some salient things that I have learned. Most of this isn’t surprising, given the venality that Zuck & co. have shown over the years.

- Facebook indeed favors profits over human safety and continues to do so. This piece for the AP documents how foreign “maids” are recruited on Instagram to come work in Saudi Arabia, and then traded using various Facebook posts once they are in the country. The article talks about current searches for the Arabic word for maids has numerous hits with pictures, ages, and prices of candidates. With all its bluster of billions of dollars spent on tracking down these abuses of its terms of service, this shouldn’t be so easy to find if Facebook was really doing a credible job to stamp this out.

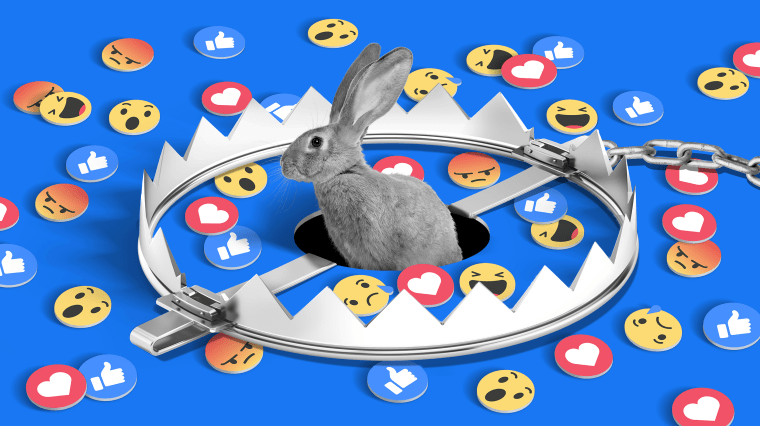

- Facebook has played a key role in radicalizing its users. NBC News writes about how internal research identified thousands of QAnon groups covering 2.2M members and nearly a thousand anti-vax groups with 1.7M members. The research attributes this population to what it calls “gateway groups” that recruit more than half of them. Again, the fact that the company’s own researchers could track this – and yet do little to stop the growth of these efforts – is troubling.

- The same NBC piece talks about a research project using a strawman “Carol Smith” user. Within days of her creation as a conservative-leaning by Facebook staffers, she was receiving all sorts of pernicious content, including invites to join various QAnon groups and others that clearly violated Facebook’s own disinformation rules. Did they act on this research to prevent this? Not that I could see.

- The “Stop the Steal” movement that led to the January 6 Capitol riot was organized through many of Facebook’s properties, pages and groups. CNN reports that one internal memo stated that the company wasn’t able to recognize the people contributing to these efforts in time to stop them, although subsequent algorithmic changes have been put in place to do so. Some content moderation efforts that were put in place prior to the November 2020 election were quickly reversed afterwards and could have helped mute some of the organizers of the January 6 riot.

- We might think that Facebook has done a sub-par job vetting American content. But it is far worse elsewhere in the world, as this piece in The Atlantic shows. The data shows 13 percent of Facebook’s misinformation-moderation staff hours were devoted to the non-U.S. countries in which it operates, whose populations comprise more than 90 percent of Facebook’s active users. The moderators hired by Facebook aren’t familiar with the local customs, don’t speak the languages, don’t understand the fragility of their governments or the stability of their internet connections – all things that mean more proportional resources will be needed to do a credible job.

So how can we fix this? I don’t think government regulation is the answer. Instead, it is time for new leadership and better designed algorithms that don’t amplify violence and misinformation. Kara Swisher writes in her current NYT column that “Facebook has been tone-deaf and uncaring about the harm that its own research showed its products were doing, despite ensuing pleas from concerned employees.” She also is lobbying for Zuck’s replacement with a leader who can finally listen to — and act on — these issues.

Another path can be found with the parallel universe being setup by former Facebook data scientists and frustrated middle managers called the Integrity Institute. Whether this will work is an open issue, but it could be a useful start.

In an era of extreme political polarization, I found the Congressional testimony of Frances Haugen to be refreshing, not only for exposing aberrant behavior at Facebook but also because this issue has the potential for a bipartisan response. Information policy should not be a partisan issue, perhaps it provides an avenue for political cooperation. When Senators Mike Lee and Ed Markey agree on a topic, that’s big news in my book.

I am surprised by your conclusion that government regulation is not the answer. While I can appreciate both the need for responsible corporate leadership as well as the challenges associated with crafting sensible legislation and regulatory frameworks, I don’t think any of these problems can be resolved by installing more responsible corporate leadership. Government needs to act, section 230 regulations need to be revised, the FTC needs to reexamine monopoly in the digital age.

I was intrigued by Haugen’s call for “human scale social media.” It looks like Snap is trying to do this, I’m not sure if there are others.