The hardest part about doing an online poll isn’t the poll itself, but figuring out what you want to really find out. And these days there are more choices than ever for low-cost surveying sources that make the process of polling pretty easy.

Since taking a class in user experience design last year, I have been experimenting with a few different kinds of polls to collect information for my articles. It has been a mixed bag. I tried out LinkedIn Polls and used Google Forms, and both were less than satisfying for different reasons.

And I am not even concerned about valid or representative sampling techniques, even though I do have a dim memory of my earlier statistics classes when I was in graduate school and we had to do things the old fashioned pencil-and-paper Chi Squared ways.

And I am not even concerned about valid or representative sampling techniques, even though I do have a dim memory of my earlier statistics classes when I was in graduate school and we had to do things the old fashioned pencil-and-paper Chi Squared ways.

A good place to start with surveys is go to review the suggestions for survey design from Caroline Jarrett. She has written books on the topic and speaks extensively at various user design conferences. She has some great examples of the more common errors in creating surveys and tips on what you should do too. I linked to her in a story that I wrote for Dr. Dobbs last year.

In the piece I also mention some other tips for doing online surveys, and include links to five survey service providers. They vary in cost from free to $200 a month; depending on which service provider you choose and how many responses you plan on receiving.

In addition to the providers I mention, several years ago LinkedIn added the ability to create polls (polls.linkedin.com). You can send out free polls to your entire network, but if you want to target them to a specific group it will cost you at least $50 per poll. You can use the service if you have a free LinkedIn account too. They haven’t done a very good job of publicizing the polls feature, which is too bad because it is dirt simple to use.

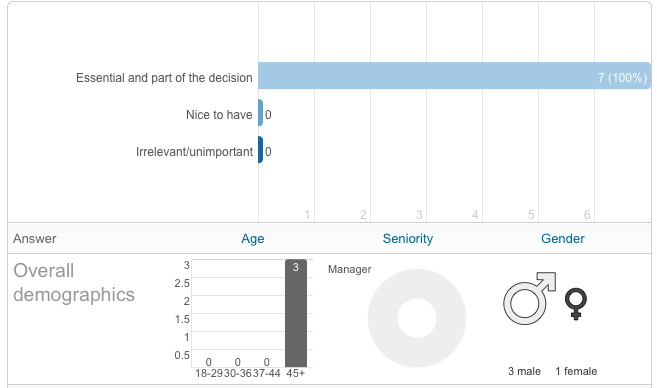

I tried it out and didn’t exactly get much in the way of responses, but the reporting is nice: you get age, gender and job title breakdown, should your respondents choose to allow this information to be reported to you. (You can see the results above.)

LinkedIn Polls isn’t as sophisticated as some of the ones mentioned in my article, but is a great place to start if you are trying to get some quick research done. Another free service that has more flexibility is Google Forms. You assemble your poll questions into a template document, and you can send out the link to your peeps. When they reply their answers are recorded in a Google Spreadsheet, at least in theory. Mine didn’t quite work, and I am not sure why. You get a link to the results and there are some nice graphs that are produced automatically. It is a bit more complex to use than LinkedIn, but still you don’t need a course in statistics to figure it out (and maybe that is what tripped me up).

Any poll that you create you’ll probably want to use some kind of scheduling program such as Hootsuite or something similar to periodically remind your peeps about your poll, or otherwise to advertise its existence among your social networks. LinkedIn makes it easy by have its own URL shortener, along with handy buttons to repost the poll to your Facebook and Twitter streams too. Google creates a special link to the poll that you can send out via email.

This is a good post. My students at Northwestern also like qualtrics for surveys. it provides slightly better analysis tools than surveymonkey. There are also some interesting technics like using amazon’s mechanical turk to recruit a random sample; this can be done quite inexpensively; though there are some trick to make sure it isn’t garbage in and garbage out. doing an internet search for mechanical turk market research pulls up a number of resources, including research on turk’s biases. For analytics, I’d like to give kudos to a startup I’ve gotten to know called statwing (www.statwing.com ) statwing can take your survey monkey results and automagically do some things you would in conventional program like spss or sas, including generating some nice charts that match standard statistical tests.

Finally, quantitative research for a product should always be coupled (both before and after) with “getting out of the building” (to quote steve blank) to get qualitative info on what the target market is doing. I just wrote a post on forbes (http://blogs.forbes.com/startupviews ) that covers what we use in the NUvention web class at Northwestern and provides a decent roadmap.

Thanks Todd. Will check these out. Some other thoughts from my readers:

1. My favorite tool is qualaroo (formerly known as kissinsights).

We used it a lot to gather context sensitive information on specific pages and then within a few hours we would take direct actions based on user responses (most of the time yielding great thanks and recognition from our user base for being so proactive). Combining this data with a powerful analytics tool like woopra.com and you suddenly have true intelligence to work with data-wise.

2. Dave Crocker writes: Jarrett’s guidance oddly misses some basics:

— Self-report about behavior tends to be inaccurate; surveys are better about attitudes and opinions than reporting previous behavior or actual future behavior.

— Wording matters and can bias the answer; choose neutral language

— Scales can be biased; keep the two ends comparable, with the mid-point being a neutral value. (I think 3 or 5 points is plenty.)

Dave has some really good points, but I’ll add to them.

Information gathered may have a very low reliability and/or answer rate if:

1. The people get confused by the questions.

2. A lie makes them look good or feel good about themselves.

3. They give up while taking the poll.

4. Their answers don’t mean a lot to them.

The last one is the most important. I run a soccer team. These guys pay dues to play each season. I send them eVites as to where and when the games are. My response rate from paying/active members is generally 50% per game, if that. I’ll often get the responses so late that I’m unsure whether I can field a team of more than 7 (11 is a full squad with no substitutes). I’ll have 18 say yes, and 11 will show. I’ll have 11 say yes and 16 will show. If the people don’t get something valuable out of participating, your response rate may suck. If they get something valuable whether they respond at all or whether they give invalid answers, your data will suck.

Oh, yeah. Bribery can work, but you might get people who care nothing about the poll itself. Or you can get an outsider who wants to look good skewing your results. I’m often called upon to “vote for” a particular product or vendor as a Value Added Reseller. In some of these cases, you can’t vote against. The most plus votes wins, so it pays to solicit responses.

So, when you poll, consider the value and consider whether anyone might want to “game” the poll.

The best polls? Ones given at the end of a service engagement with a one on one personal Q&A that can go anywhere with the manager evaluating the service given. OK, I also like entering polls where I can win free stuff and/or get a coupon for a burger sometimes, but I might game them or consider them to be BS.

There are lies, damn lies, statistics, and models.

All great suggestions Tony, thanks!

David