If you want to see into the future of BI, then look no further than the nearest gaming development studio. It isn’t all fun and first-person-shooting. The game developers are the sentinels of a variety of advanced IT techniques and are usually out in front of the general IT population in terms of using big data, real time analytics, and cloud computing, among other areas.

We all know that computer games are big business, with last year’s worldwide sales north of $20 billion and even the subcategory of social games at over $2 billion: compare this to around $8 billion for the average annual US movie ticket box office.

We last looked at how Riot Games is using Hadoop and other NoSQL tools to track its players statistics and improve game play in December, but they are just one of many game studios that are taking technology to new heights.

Gamers have been ahead of the curve in three key areas: rapid changes in computing infrastructure, persistent and more personalized data connections from the cloud, and a long history of using graphical processors (GPUs) to support high performance computing. Let’s look at each of these items in more detail, and why the gamers get them.

Rapid on-demand computing changes

“From an infrastructure perspective, games have a high volume of data points due to user interactions and typically have a unique need for fast response. This makes them very tricky cloud data users,” says Robert Nelson, the CEO of Facebook and mobile game developers Broken Bulb Studios. The company makes use of SoftLayer for their cloud hosting and has several terabytes of data with peak transfer rates over 200 Mbps.

“SoftLayer’s platform has a unique combination of scalability and customizability, which supports the dynamic infrastructure of gaming companies. SoftLayer can provision cloud computing instances in minutes, allowing us to rapidly scale up or down as our needs change,” he said. For example, last year as part of a new game launch they saw 1.4 million players come to their website in a week, up from a few thousand beta users prior to the launch. Some of their games have required SoftLayer to double their infrastructure overnight because of heavy demand. This variation in demand is the wheelhouse of cloud computing, but games do seem to have a higher fluctuation than a traditional IT application.

Another gaming studio, Hothead Games, launched its Big Win series of sports games last year. They saw the number of servers rise from six to 60 on the SoftLayer hosting network. It was all handled with ease. “Our code makes hundreds of millions of database transactions a day. It’s critical to our business that every single one of those works reliably and is super fast,” said Joel DeYoung, director of technology at Hothead Games.

SoftLayer isn’t alone in recognizing this market. Peer 1 Hosting has also worked with some of the world’s largest game developers to deliver their games under wide fluctuations in demand. One launch saw traffic spike to more than a thousand servers, which were automatically provisioned by their managed hosting service. And Joyent has supported one of the largest e-learning games called Quizlet with their hosting services. Thanks to some careful analysis, Quizlet found too many PHP calls and was able to rewrite their code to speed up their operations. They have scaled up from a few hundred beta users several years ago to more than 60 million page views a month today.

Persistence and personalization

“Gaming is a more interesting target market than traditional B2C spaces,” says Brian Stone of Causata, a customer experience management company. “And online gaming is even more so, since it offers unparalleled opportunities for cross-selling and upselling. You are competing with your friends and constantly checking your play statistics, and very involved with your social network. Compare that to an online banking app: the game is a lot more engaging and personal.”

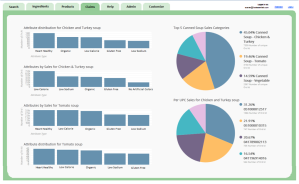

Providing the underlying analysis for this personalization means solid BI support, and games use a variety of tools. IsCool Entertainment can analyze more than a million of its online gamers’ activity and social behavior with Actian’s Vectorwise, a Hadoop analytics engine. This provides data for calculating rewards, generates leader boards and delivers virtual prizes, all to enhance customer engagement and retention.

“Games have a persistent connection with the user and as a result, we get so much more data,” said Reid Tatoris, the CEO of PlayThru.com. The company produces games that are used instead of the annoying Captcha Turing tests to verify that a human is signing up for a website. “Interacting with an app doesn’t give you the how. Is this person’s interaction human? How did you go about completing the task? That is where we try to help.” An example of their game is shown below

PlayThru has gotten lots of insights into personal preferences as a result of deploying their app across 20 million page views. “When you are playing a mobile game, you can get all sorts of information about what the user is doing, where they are located, and how they are interacting with the game in near real-time.” Try getting that kind of insight with a user creating a Word document. As a result of PlayThru’s games, they are seeing submission rates increase by 40 percent over the traditional text-based Captcha applications.

One game that is a champion of personalization is the site Fanhood.com, which connects sports fans with their favorite teams through Facebook. “There is so much content to navigate, we try to focus on what is relevant for a particular fan,” says the company’s CEO Brandon Ramsey. “What’s more, we try to structure it within your Facebook social graph so you can immediately tell which of your friends are fans of teams that your local team is playing this week.” Fanhood uses MongoDB and Cassandra to manage millions of rows of data for each team and fan to create its personal team updates.

Having all this data is a tremendous opportunity if managed properly. Causata is handling an online sports betting site, and can provide all sorts of specifics such as who is opening which emails and the path that a customer takes within the site. “We can then predict the number of bets made and their value, the average duration between bets, and the sports that each visitor is most interested in,” says Stone. Causata builds these models using R and Hadoop.

GPU computing

Finally, there is the notion of using graphics processors for boosting general computing tasks. While this concept isn’t new, even here the gaming industry has been ahead of the curve. Several years ago, a group of Swiss researchers put together a cluster of 200 PS3s to form a primitive supercomputer. While Sony disabled this ability soon afterwards, a number of hosting providers now offer on-demand GPU computing in the cloud, making use of Nvidia graphics processors and specialized Linux operating systems that can take advantage of this increased horsepower. The providers include Amazon Web Services, Peer 1 and SoftLayer, among others. This provides more intensive CPU cycles at lower cost too. One of the Amazon configurations was able to place in the top500 list of the most powerful supercomputers for several years running.

All of these BI tools and advanced computing techniques have brought about what Kimberly Chulis, the CEO of Core Analytics, calls “a new focus on advanced analytics and micro-segmentation to drive player monetization. Game developers and brands have an opportunity to apply these big data analytics techniques to capture rich and varied behavioral and multi-structured game and player data.”

One of the biggest problems for ecommerce has always been what happens when customers want to mix your online and brick and mortar storefronts. What if a customer buys an item online but wants to return it to a physical store? Or wants an item that they see online but isn’t in stock in their nearest store?

One of the biggest problems for ecommerce has always been what happens when customers want to mix your online and brick and mortar storefronts. What if a customer buys an item online but wants to return it to a physical store? Or wants an item that they see online but isn’t in stock in their nearest store?